Provision

What Will You Do¶

In this part, you will provision an Amazon EKS cluster using Custom Networking.

Update Cluster Specification¶

- Open a suitable YAML editor and copy/paste the example EKS cluster specification provided below.

- Save the file as "custom-networking-demo.yaml" (an example)

Cluster Spec Explained

In the example cluster spec provided,

- The name of the EKS cluster will be "custom-networking-demo" and it is configured to be provisioned in the "defaultproject" in your Org.

- The EKS cluster is configured with one managed node group.

- (3) ENI Configs will be created, one for each AZ we are using.

The following items in the declarative cluster specification will need to be updated/customized for your environment.

- cluster name: "custom-networking-demo"

- project: "defaultproject"

- cloudCredentials: "my-cloud-credential"

- region: "us-west-2"

- Subnet IDs

- AWS Tags as required in your AWS account

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

# The name of the cluster

name: custom-networking-demo

# The name of the project the cluster will be created in

project: defaultproject

spec:

blueprintConfig:

# The name of the blueprint the cluster will use

name: minimal

# The version of the blueprint the cluster will use

version: latest

# The name of the cloud credential that will be used to create the cluster

cloudCredentials: my-cloud-credential

config:

# The EKS addons that will be applied to the cluster

addons:

- name: kube-proxy

version: latest

- name: vpc-cni

version: latest

- name: coredns

version: latest

managedNodeGroups:

# The AWS AMI family type the nodes will use

- amiFamily: AmazonLinux2

# The desired number of nodes that can run in the node group

desiredCapacity: 1

iam:

withAddonPolicies:

# Enables the IAM policy for cluster autoscaler

autoScaler: true

# The AWS EC2 instance type that will be used for the nodes

instanceType: t3.large

# The maximum number of nodes that can run in the node group

maxSize: 6

# The minimum number of nodes that can run in the node group

minSize: 1

# The name of the node group that will be created in AWS

name: my-ng

# Enable private networking for the nodegroup

privateNetworking: true

# The size in gigabytes of the volume attached to each node

volumeSize: 80

# The type of disk backing the node volume. alid variants are: "gp2" is General Purpose SSD, "gp3" is General Purpose SSD which can be optimised for high throughput (default), "io1" is Provisioned IOPS SSD, "sc1" is Cold HDD, "st1" is Throughput Optimized HDD.

volumeType: gp3

metadata:

# The name of the cluster

name: custom-networking-demo

# The AWS region the cluster will be created in

region: us-west-2

# The tags that will be applied to the AWS cluster resources

tags:

email: [email protected]

env: qa

# The Kubernetes version that will be installed on the cluster

version: latest

network:

cni:

name: aws-cni

params:

# Set the existing VPC subnets the AWS CNI will use

customCniCrdSpec:

us-west-2a:

- subnet: subnet-081ff5e370607fafa

us-west-2c:

- subnet: subnet-0d336d3350d55a986

us-west-2d:

- subnet: subnet-0a4548dabae4b34cb

vpc:

clusterEndpoints:

# Enables private access to the Kubernetes API server endpoints

privateAccess: true

# Enables public access to the Kubernetes API server endpoints

publicAccess: false

subnets:

private:

# The existing private subnets in the VPC the cluster will use

subnet-083bf5944d5ecb3dd:

id: subnet-083bf5944d5ecb3dd

subnet-0bce0fb4a1f682e13:

id: subnet-0bce0fb4a1f682e13

subnet-0f4534f41b98dd7be:

id: subnet-0f4534f41b98dd7be

# The existing public subnets in the VPC the cluster will use

public:

subnet-0238aec96d29bc809:

id: subnet-0238aec96d29bc809

subnet-0ad39284a3ed57cfe:

id: subnet-0ad39284a3ed57cfe

subnet-0fb450e17506bd15d:

id: subnet-0fb450e17506bd15d

proxyConfig: {}

type: aws-eks

Provision EKS Cluster¶

- Type the command below to provision the EKS cluster

rctl apply -f custom-networking-demo.yaml

If there are no errors, you will be presented with a "Task ID" that you can use to check progress/status. Note that this step requires creation of infrastructure in your AWS account and can take ~20-30 minutes to complete.

{

"taskset_id": "pkvgygk",

"operations": [

{

"operation": "ClusterCreation",

"resource_name": "custom-networking-demo",

"status": "PROVISION_TASK_STATUS_PENDING"

},

{

"operation": "NodegroupCreation",

"resource_name": "my-ng",

"status": "PROVISION_TASK_STATUS_PENDING"

},

{

"operation": "BlueprintSync",

"resource_name": "custom-networking-demo",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

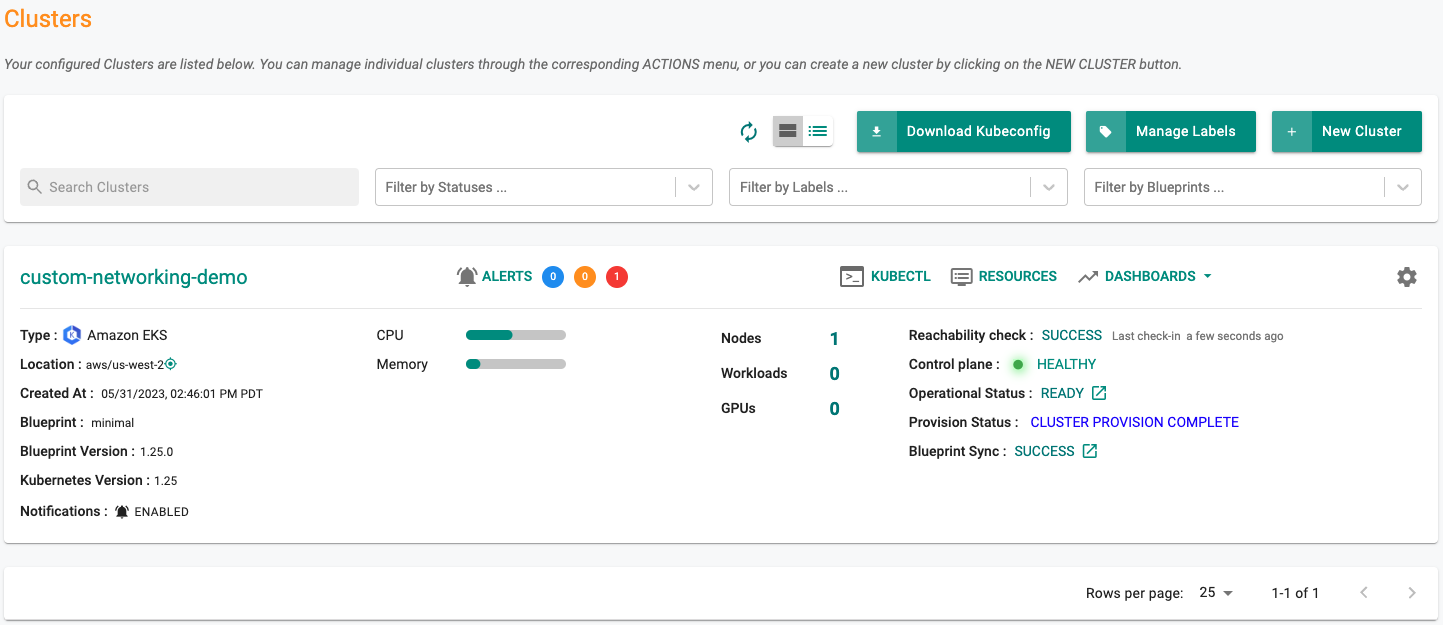

- Navigate to the specified "project" in your Org

- Click on Infrastructure -> Clusters.

The provisioning process can take approximately 30 minutes to fully complete. Once provisioning is complete, you should see a healthy cluster in the project in your Org