Release - April 2024¶

v2.5 - Self Hosted¶

30 Apr, 2024

This release introduces:

- Fresh controller installation for EKS, GKE, and Airgapped Controller.

- Upgrade path from version 2.4 to 2.5 for GKE controller, EKS controller, and Airgapped controller.

The section below provides a brief description of the new functionality and enhancements in the v2.5 controller release.

Amazon EKS¶

Enhanced Cloud Error Messaging for Cluster Provisioning¶

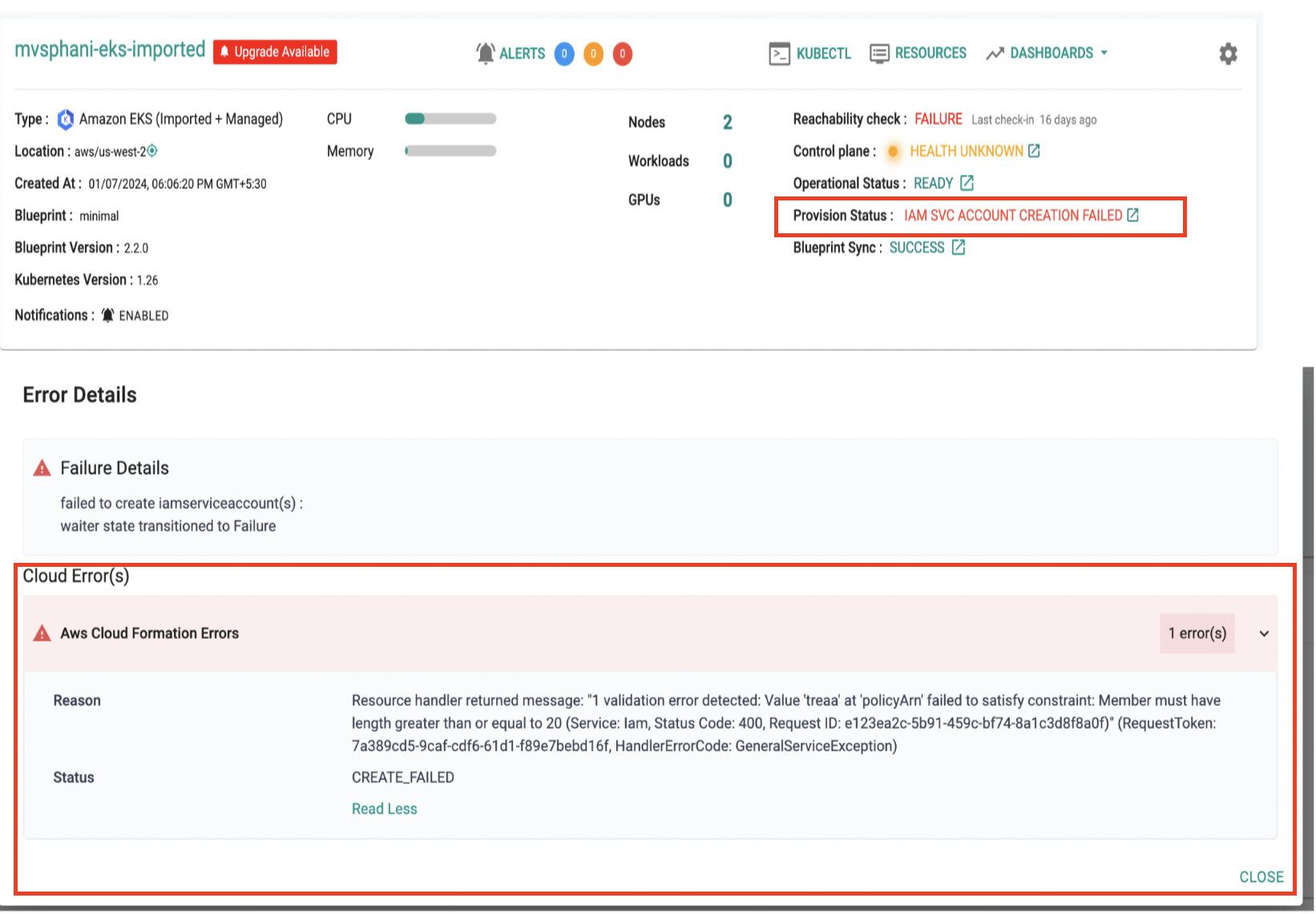

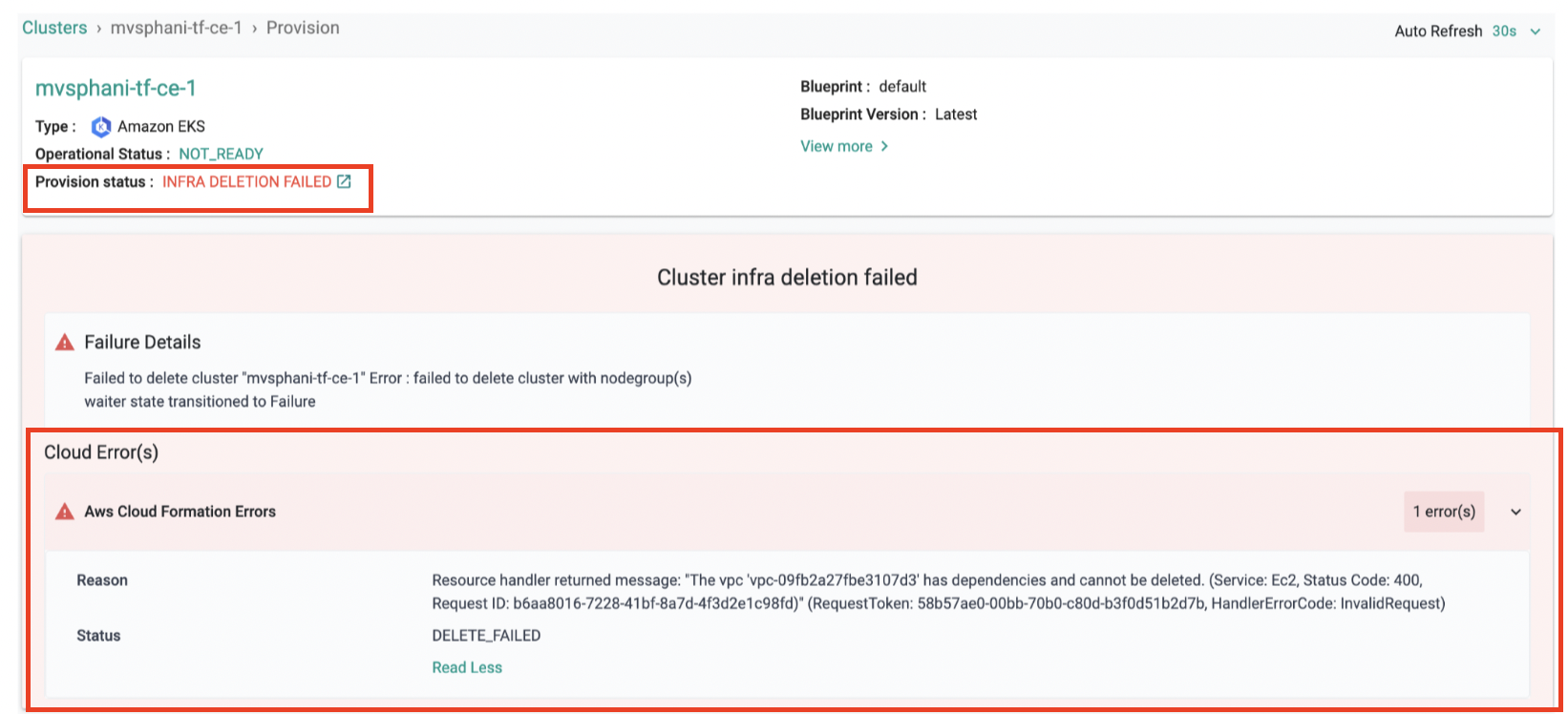

We have enhanced cluster LCM experience by providing more detailed error messages. In the event of any failures during the process, you will receive real-time feedback from both cloud formation and cluster events. This improved visibility pinpoints the exact source of the issue, allowing you to troubleshoot and resolve problems more efficiently.

Examples

- When an invalid policy ARN is configured during the creation of an IAM Service Account, here is how the failure will be displayed

- If a cluster deletion fails due to a specific reason, this is how it will be displayed

Migrating EKS Add-ons to Managed Add-ons¶

This enhancement streamlines experience and optimizes workflow efficiency. Here's what you need to know:

- Kubeproxy, coredns, and vpc-cni are now mandated during cluster provisioning

- For existing clusters, creating add-ons (Day 2) will automatically convert existing self-managed add-ons to managed add-ons

- During cluster upgrades, any essential self-managed add-ons that are not already converted to managed add-ons will be automatically migrated

Considerations for RCTL and Terraform Users:

For users using RCTL and Terraform, please take note of the following steps:

-

RCTL Users:

- After this managed add-on migration, the cluster configuration file will now include managed add-on configuration. To ensure that your cluster configuration reflects these changes, please download the latest configuration using either the user interface (UI) or the RCTL tool

-

Terraform Users:

-

Execute the command

terraform apply --refresh-onlyto update the state, ensuring it reflects the correct state post migration with managed add-on information. This will synchronize the state file. -

Use

terraform showto output the resource file, ensuring it aligns with the new state file with managed add-on information.

-

Important

-

Users must ensure that the action

DescribeAddonConfigurationis included in the IAM role policy used in the EKS cluster as we migrate self-managed add-ons. This action is necessary to retrieve add-on configurations and required to compare and porting configuration during migration. -

Add-ons will only be updated if set to the latest version; no action will take place if they are pinned to a specific version during a cluster upgrade.

Azure AKS Applicable for controllers with Signed certificates only ¶

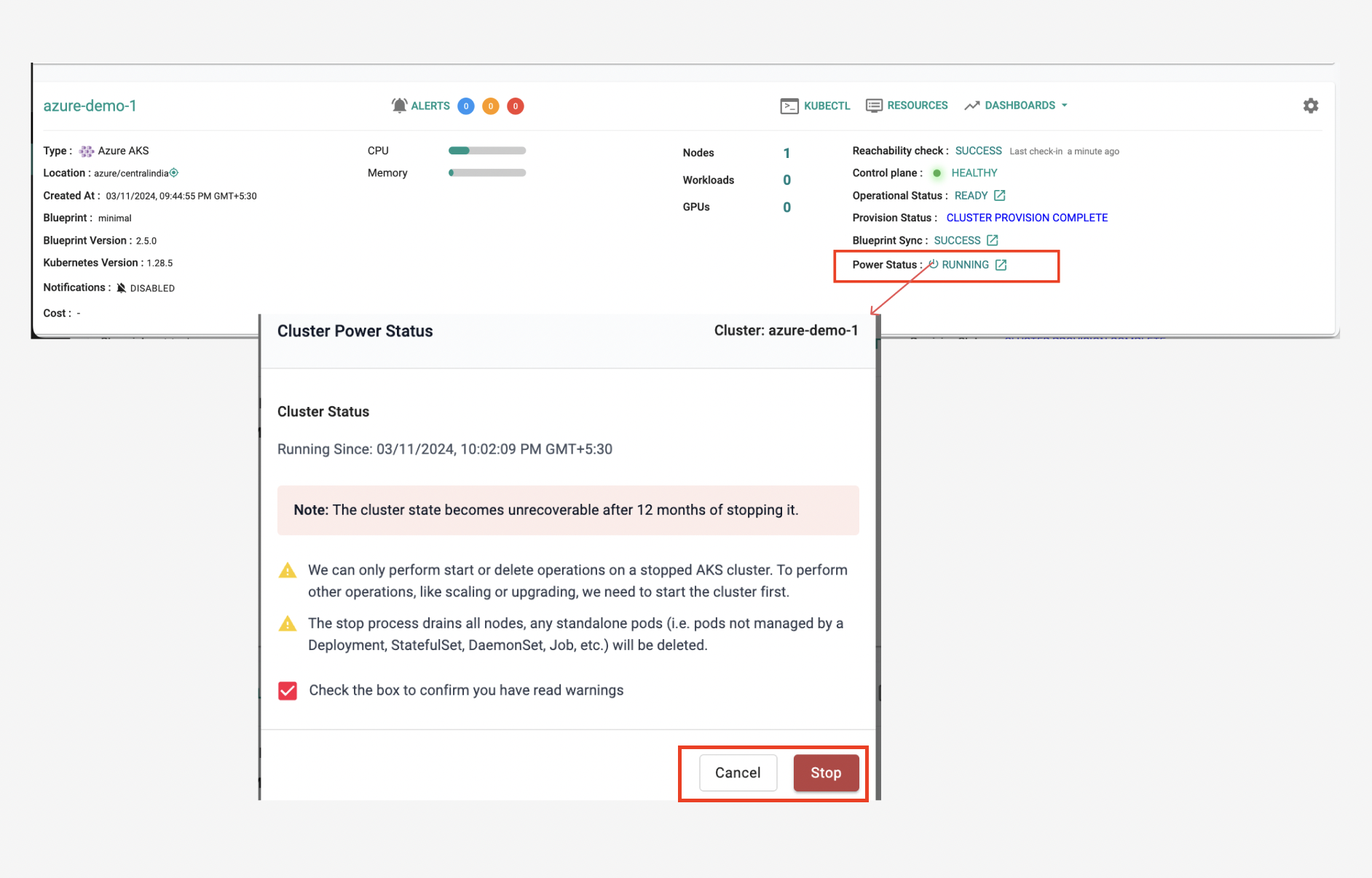

Stop and Start AKS Cluster¶

No more switching back and forth! You can now start and stop your AKS clusters directly from within the Rafay platform. This seamless integration simplifies cluster management and helps optimize your cloud spending by pausing idle clusters and minimizing resource consumption through the familiar Rafay Interface.

For more information, please refer to the AKS documentation

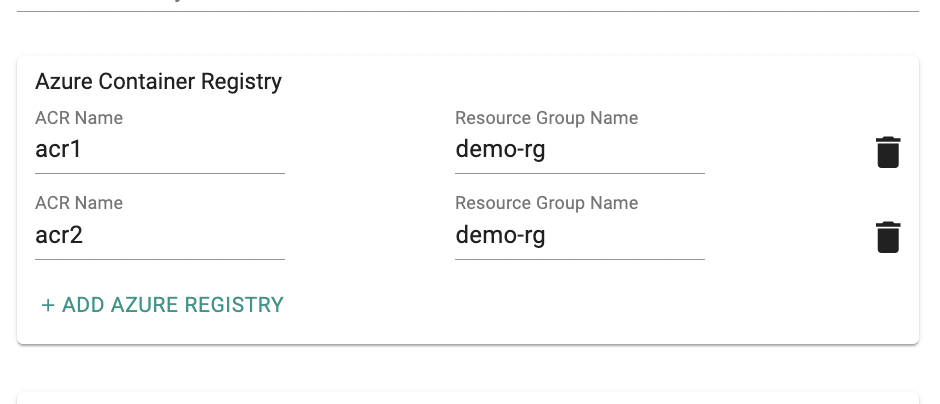

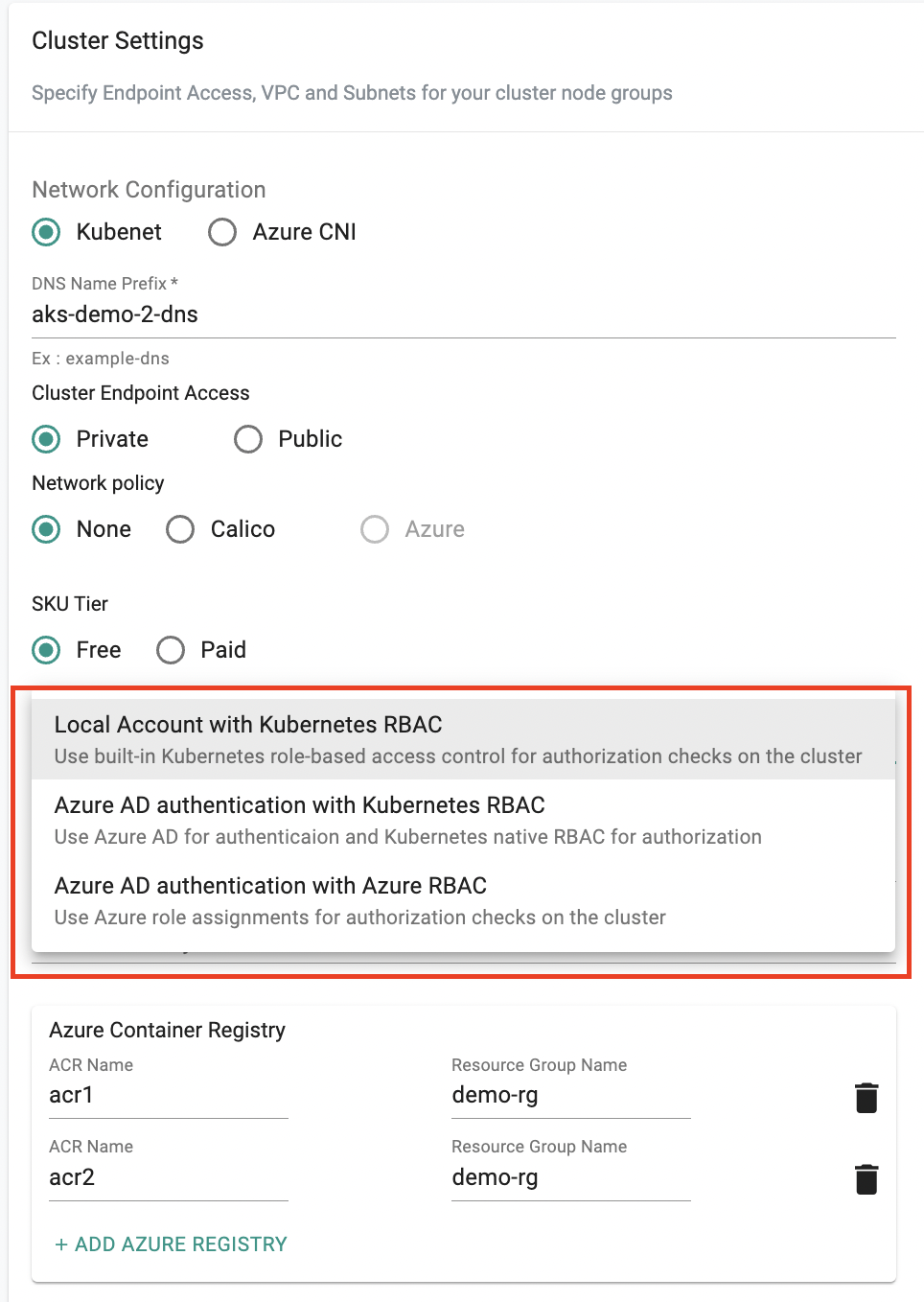

Multiple ACR Support¶

In this release, we have added support for adding multiple Azure Container Registry profiles directly when creating the AKS cluster as part of the cluster configuration. This enhancement offers greater flexibility in configuring multiple container registries, simplifying the customization of your AKS cluster to suit your specific requirements.

Configurable Azure AKS Authentication & Authorization¶

Instead of switching between consoles, you can now configure and choose between local accounts or Azure AD for streamlined setup or enhanced security through centralized identity management. Opt for Azure RBAC for managing access at the Azure resource level, or Kubernetes RBAC for precise control when configuring the cluster using Rafay Controller.

AKS UI Enhancements¶

- You can now create an AKS Node pool in a subnet separate from the cluster's subnet.

- We have added UI support in AKS to specify a User Assigned Identity and Kubelet Identity for a managed cluster identity.

Google GKE¶

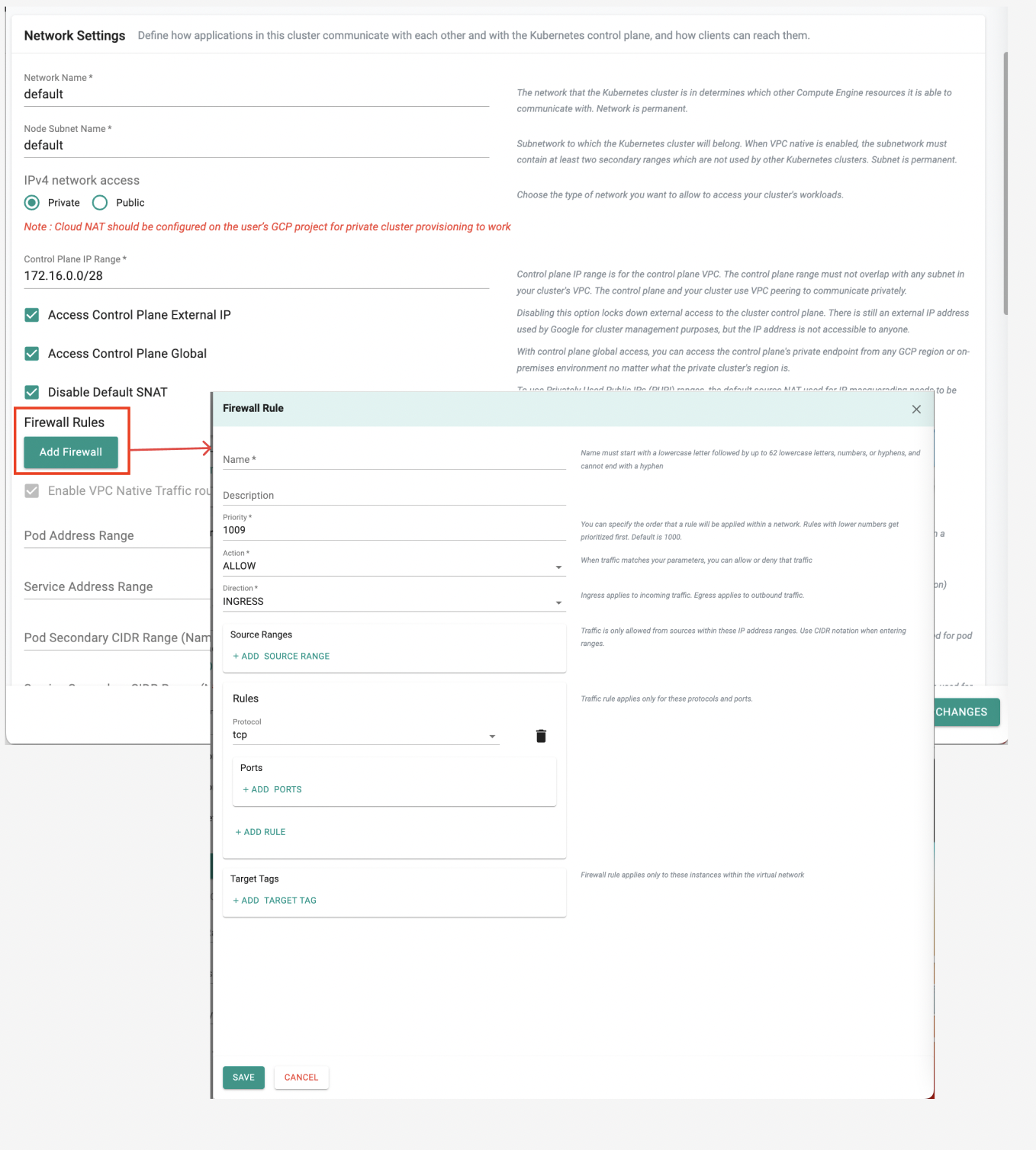

Private Cluster Firewall Configuration Customization¶

Use Case Scenario

Previously, users had to navigate to Google Console or use gcloud commands to manually open additional ports on the firewall for their GKE private cluster. This process often led to inconvenience and added complexity during cluster setup, requiring users to navigate between two different consoles.

With this release, we have added a new cluster configuration option directly through the controller. Now, users can easily specify the additional ports they need opened while creating their cluster as part of the firewall configuration, streamlining the management of GKE private cluster.

For more information, please refer to the GKE documentation

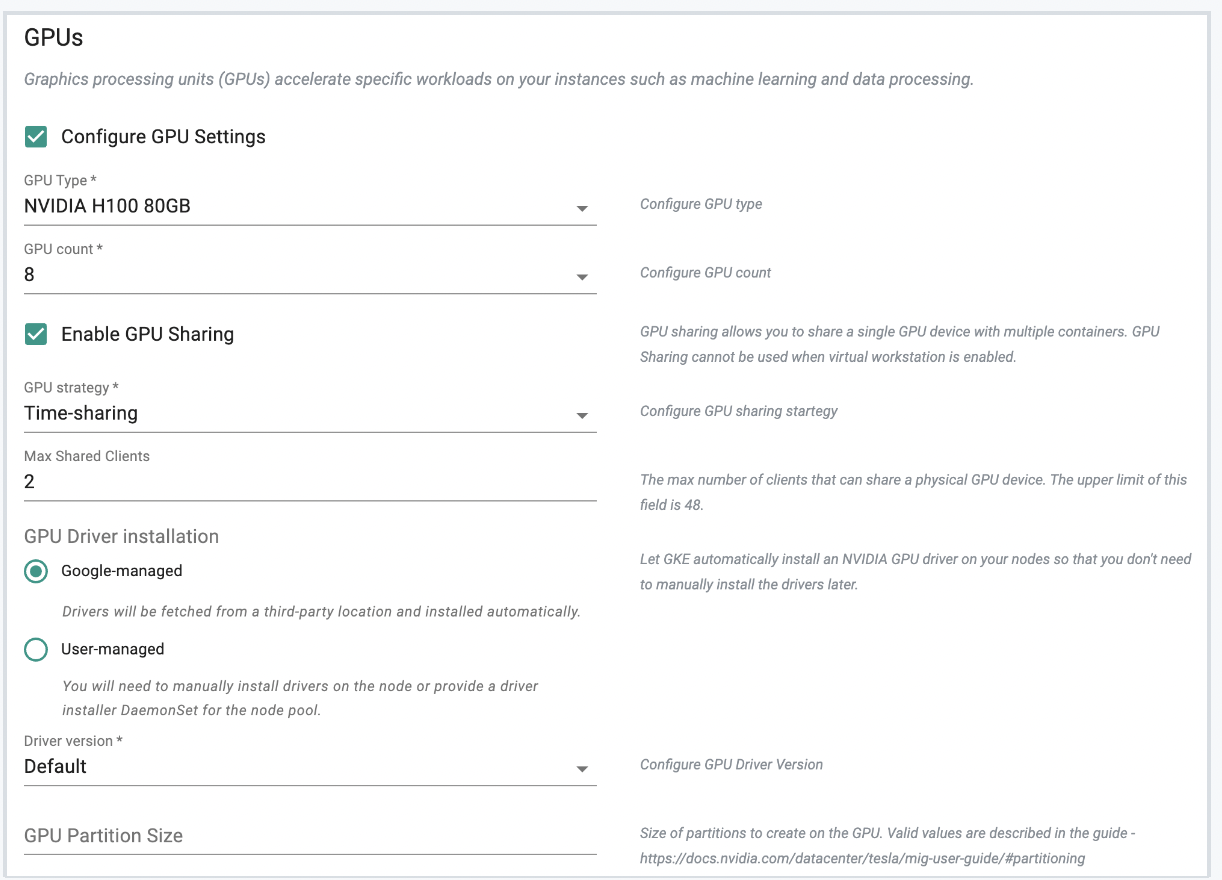

GPU Support for Node Pools¶

In this release, users can now add GPU based node pools. This enhancement enables users to incorporate GPU resources into their node pools on both day 0 and day 2. The support for GPU-based node pools is available across UI, RCTL, Swagger API, and Terraform.

NodePool Configuration

For more information, please refer to the GKE documentation

Important

- To understand the limitations, please refer to GPU support limitations.

Upstream Kubernetes¶

Improved preflight checks¶

We have enhanced the existing preflight checks for provisioning or scaling of Rafay managed upstream Kubernetes clusters to cover for the following scenarios.

- Communication with NTP server: Does the node have connectivity to a NTP server?

- Time skew over 10 seconds: Is the clock on the node out of sync with NTP?

- DNS lookup verification: Is the node able to resolve using the configured DNS server?

- Firewall validation: Does the node have a firewall that will block provisioning?

If any of these checks fail, the installer will abort and exit. Once the requirements are addressed by the administrator, they can attempt provisioning again.

RCTL Users¶

As part of this release, we have added an enhancement to RCTL. When creating the upstream cluster with RCTL apply, the full summary will be displayed at the end.

Important

Download the latest RCTL to access this functionality.

Policy Management¶

OPA GateKeeper¶

Support for OPA Gatekeeper version v3.14.0 has been introduced with this release.

Blueprint¶

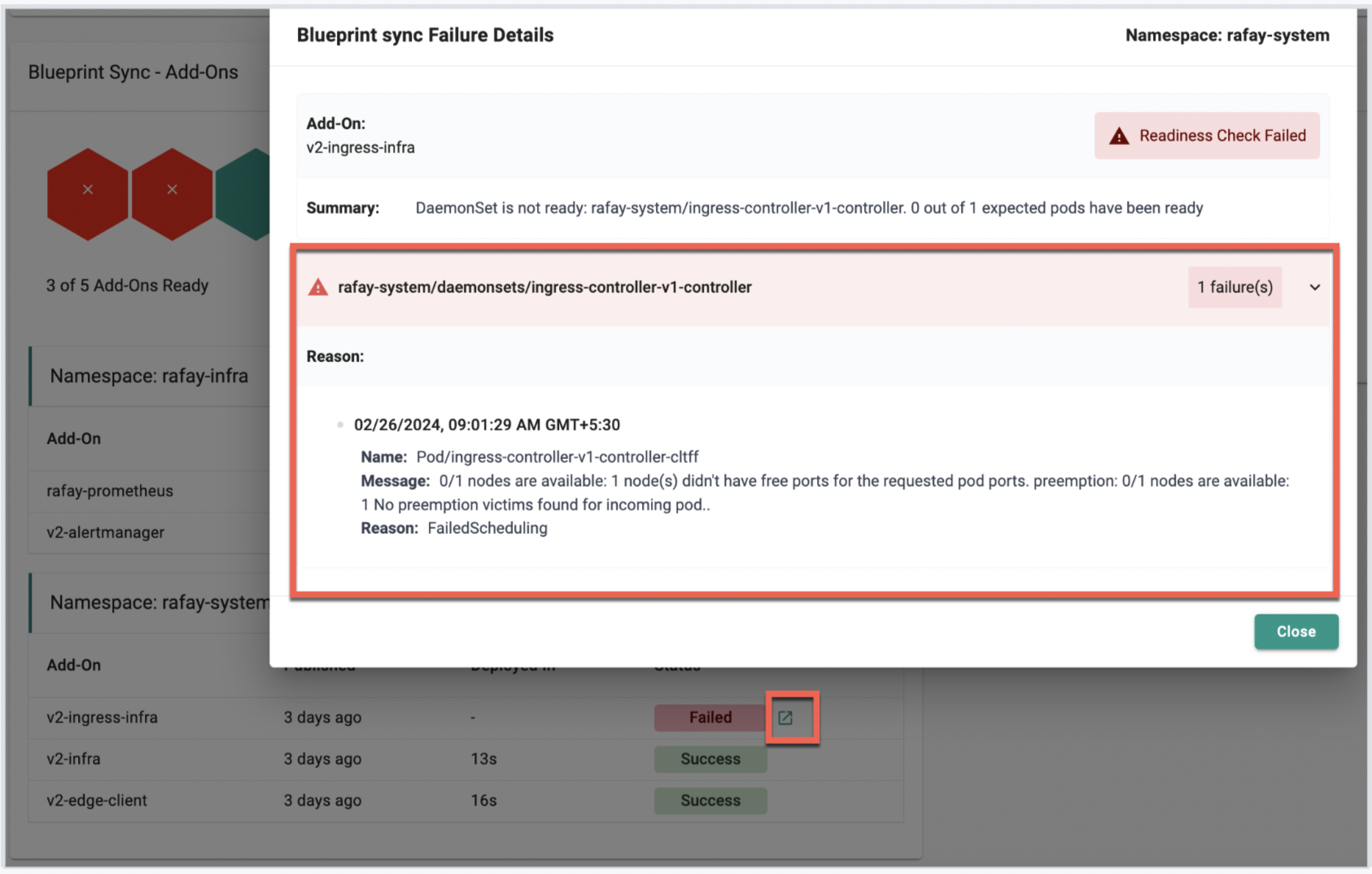

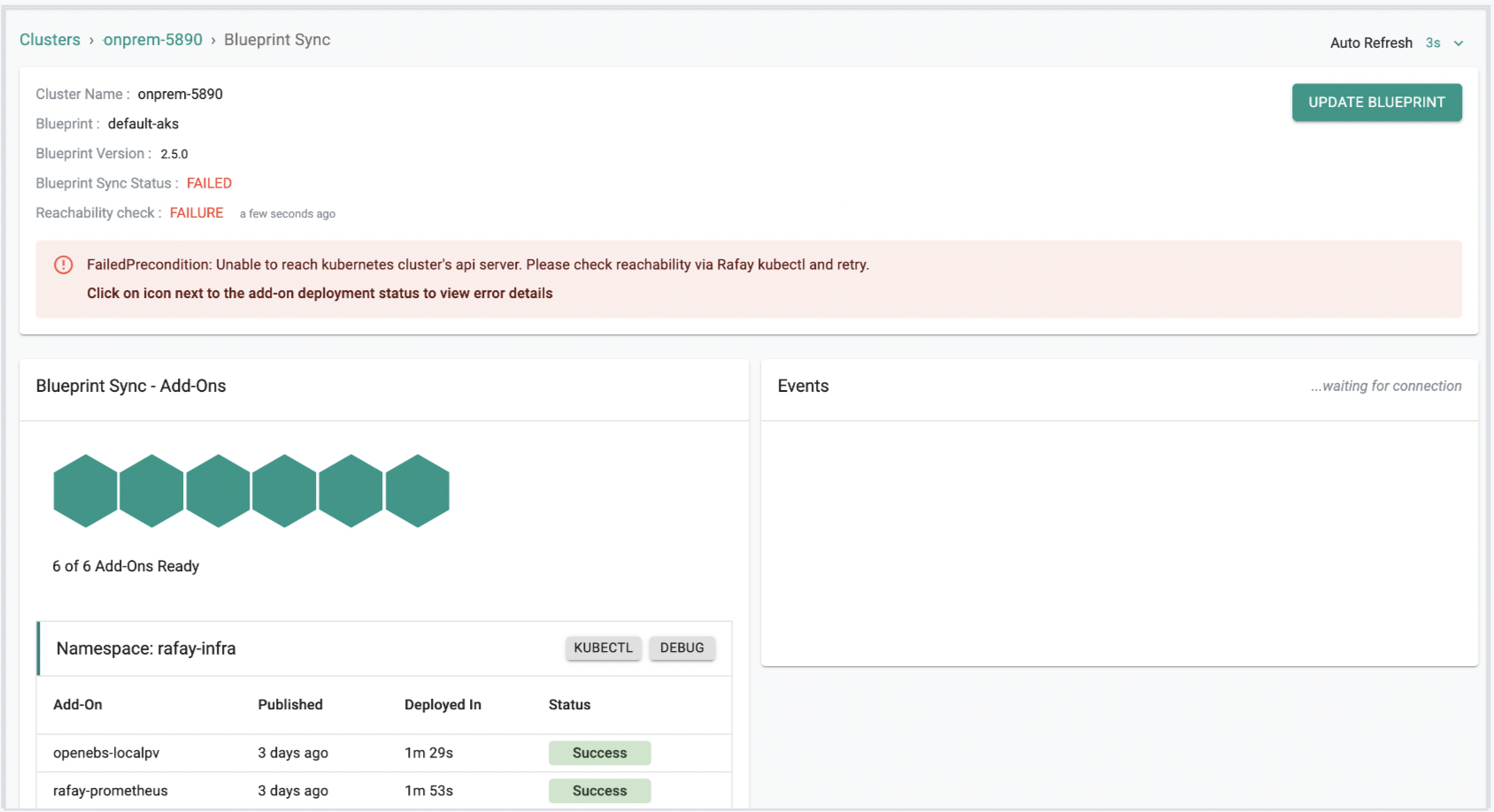

Error Handling and Reporting¶

In this release, we have added a number of improvements to make it easier to troubleshoot blueprint/add-on failures. This includes correlation of K8s events to expose more meaningful error messages from the cluster which makes it easy to pinpoint root cause when there is an add-on deployment failure.

Examples

Here are some scenarios where blueprint updates or add-on additions failed as part of the blueprint. This is how they will be shown with more details.

Addon Failure

Blueprint Failure

Namespace¶

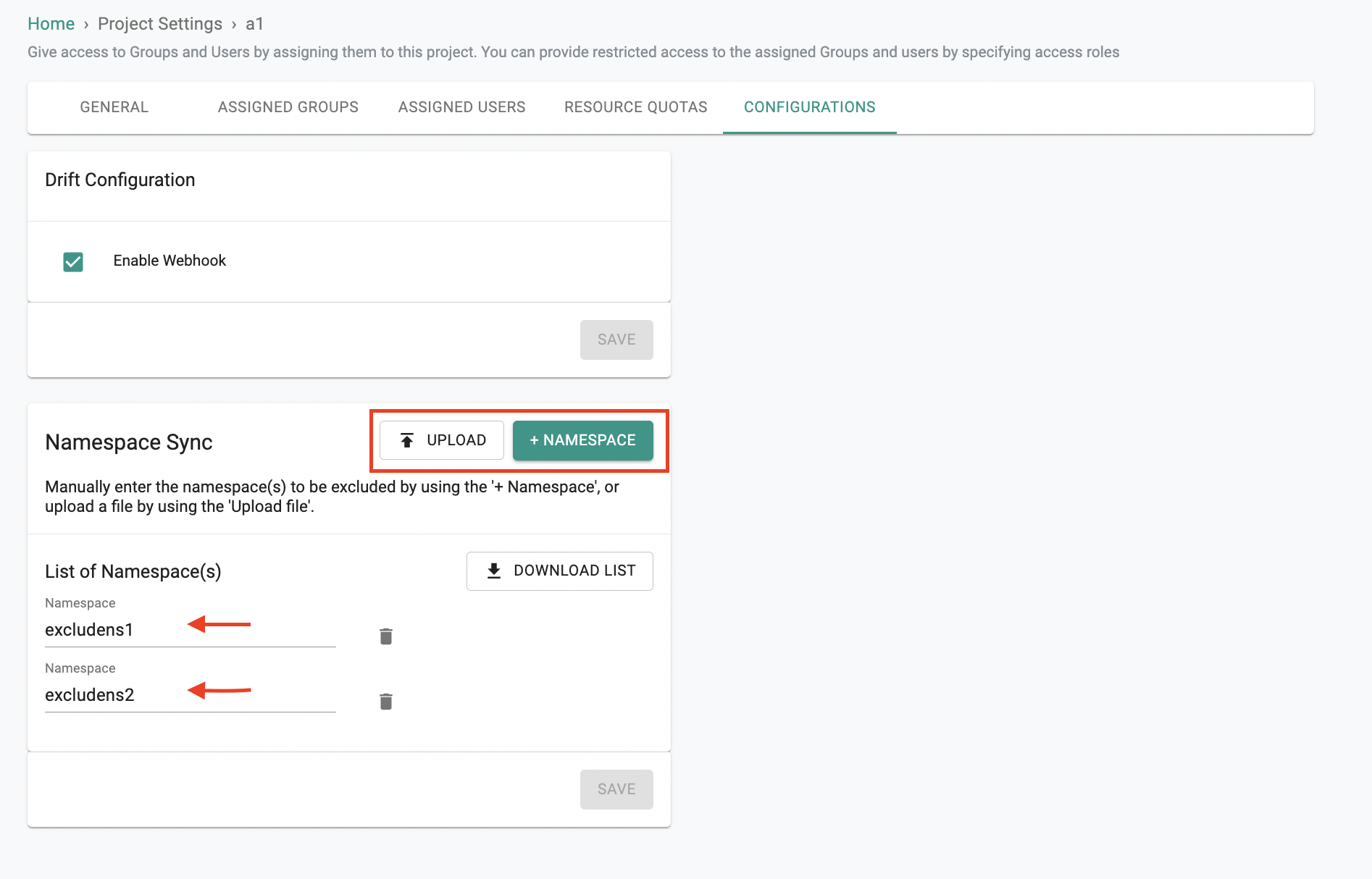

Exclusions for Namespace Sync¶

With previous releases, if namespace sync was enabled, all namespaces created outside the controller (out-of-band) were automatically synced back. Now, you can leverage the new "exclude namespaces" feature at project level to define specific namespaces that should not be synced. This provides granular control allowing you to exclude namespaces that you don't need to synchronize.

Important

If a namespace is removed from the exclusion list and namespace sync is enabled, the namespace will be synchronized to the controller in the next reconciliation loop.

Existing synchronized namespaces will remain unchanged. The effect only applies to new namespaces added as part of this new configuration; any namespaces already synced will continue to remain as they are.

v2.5 Self Hosted Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-28999 | EKS: Coredns issue with permission to endpointslices after upgrade EKS cluster to k8s version |

| RC-30965 | Blueprint update failing with network policy enabled as cilium pods are stuck in pending state |

| RC-29363 | EKS:Node Instance Role ARN is not being detected when converting to the managed cluster |

| RC-33393 | Cluster Template: Provisioning of EKS cluster using cluster template failing with error 'unknown field "instanceRolePermissionsBoundary"' |

| RC-29353 | Error while doing rctl apply to the v3 spec of AKS convert to managed cluster |

| RC-32310 | EKS: Not able to update an existing CloudWatch log group retention |

| RC-32263 | UI: Backup Policy must mandate location 'Control Plane Backup Location' and also 'Volume Backup Location' when selected so |

| RC-32686 | Get Add-on version API using master creds rather than target account |

| RC-31491 | MKS: Cluster nodes fail to provision at times due to silent connection drops |