Multi-tenancy: Best practices for shared Kubernetes clusters¶

Some of the key questions that platform teams have to think about very early on in their K8s journey are:

- How many clusters should I have? What is the right number for my organization?

- Should I set up dedicated or shared clusters for my application teams?

- What are the governance controls that need to be in place?

The model that customers are increasingly adopting is to standardize on shared clusters as the default and create a dedicated cluster only when certain considerations are met.

graph LR

A[Request for compute from Application teams] --> B[Evaluate against list of considerations] --> C[Dedicated or shared clusters];A few example scenarios for which Platform teams often end up setting up dedicated clusters are:

- Application has low latency requirements (target SLA/SLO is significantly different from others)

- Application has specific requirements that are unique to it (e.g. GPU worker nodes, CNI plugin)

- Based on Type of environment - ‘Prod’ has a dedicated clusters and 'Dev', 'Test' environments have shared clusters

With shared clusters (which is the most cost efficient and therefore the default model in most customer environments), there are certain challenges that platform teams have to solve for around security and operational efficiencies.

How does Rafay help?¶

Rafay’s Kubernetes Operations Platform includes out-of-box tooling that platform teams can leverage at scale to address challenges around shared clusters.

Examples include:

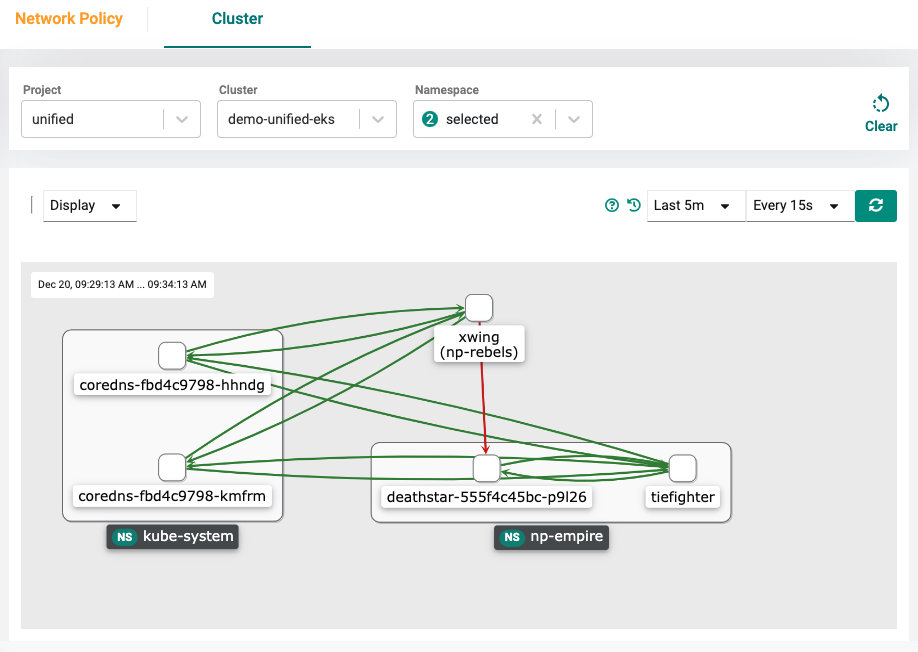

Network Policy Manager¶

By default, namespaces are not isolated in Kubernetes environments. Rafay's Kubernetes Operations Platform provides an easy way to configure/enforce Network Policies for “hard” namespace isolation.

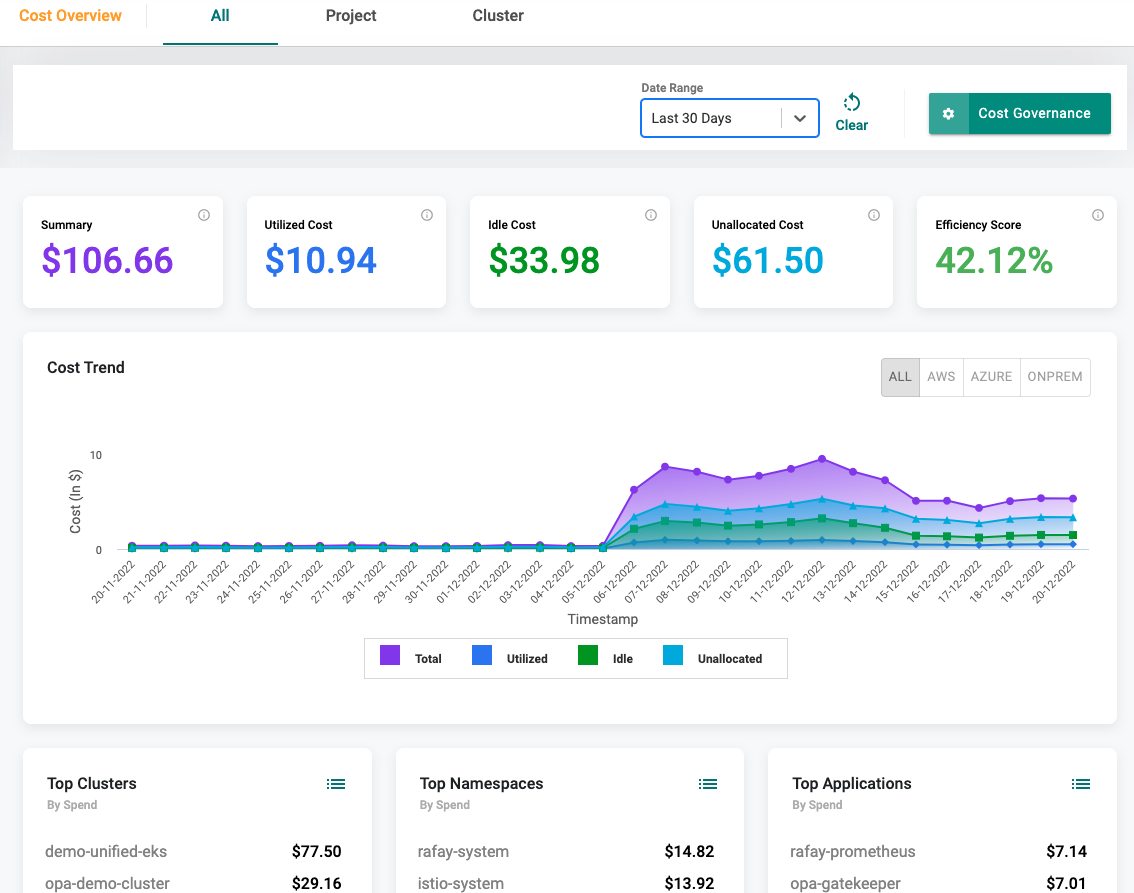

Cost Management¶

Granular visibility into resource utilization & cost metrics by namespace, labels, workloads are available that help platform teams enable showback/chargeback models or drive cost optimization exercises.

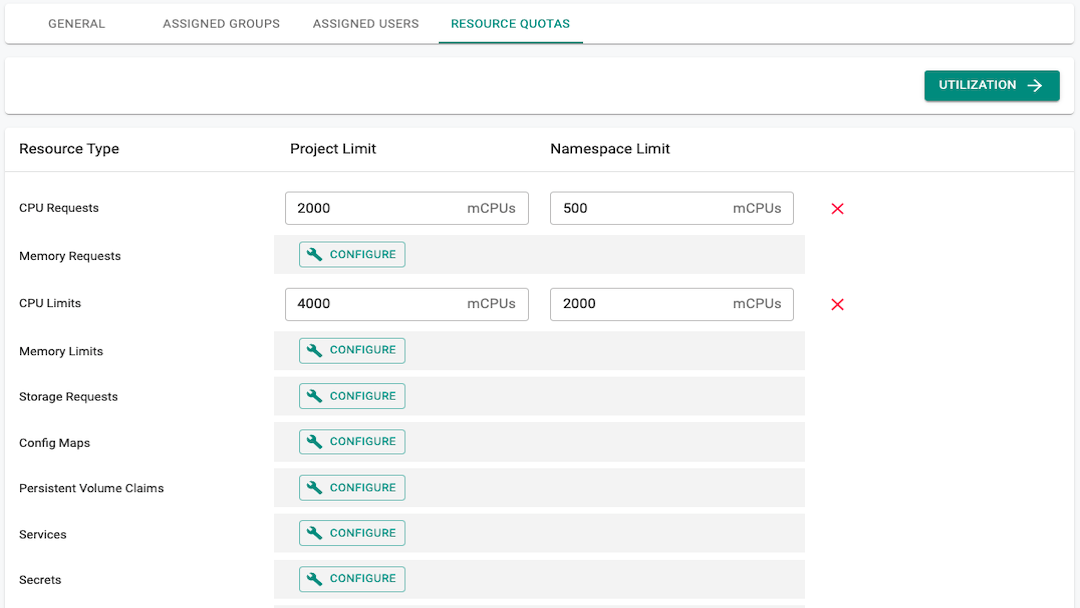

Workspace¶

Platform teams can create projects/workspaces and assign resource quotas to application teams to enable a self-service model. This allows application teams to create/manage namespaces (without cluster-wide privileges) within assigned quotas and create pipelines to deploy applications.

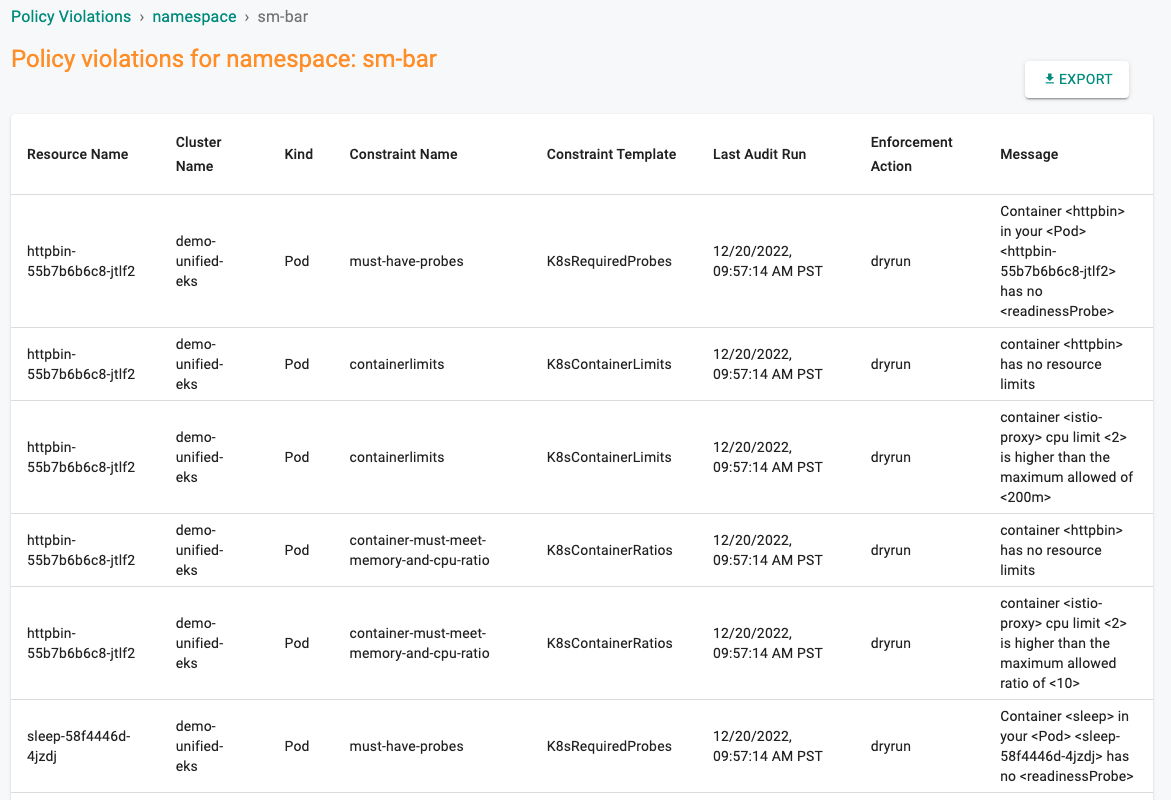

Policy Management¶

The platform provides tooling to configure/enforce namespace specific OPA Gatekeeper policies and provides centralized visibility around policy violations to application teams for the resources that they own

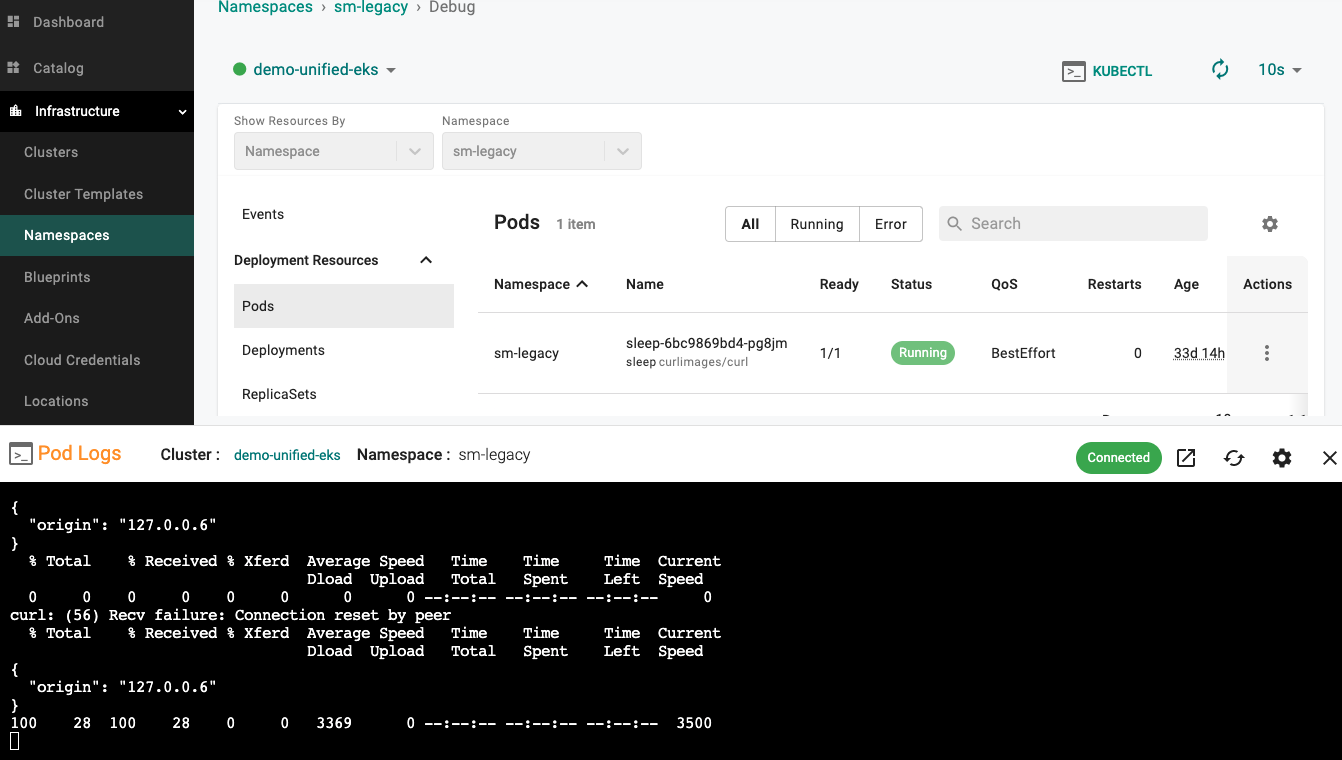

Zero Trust Kubectl Access¶

Platform teams can enable application teams to debug and run kubectl commands securely against only specific resources that they own

Learn More?¶

Sign up here for a free trial and try it out yourself.Get Started includes a number of hands-on exercises that will help you get familiar with capabilities of Rafay's Kubernetes Operations Platform.