Part 3: Blueprint

What Will You Do¶

In this part of the self-paced exercise, you will create a custom cluster blueprint with Nvidia's GPU Operator based on declarative specifications.

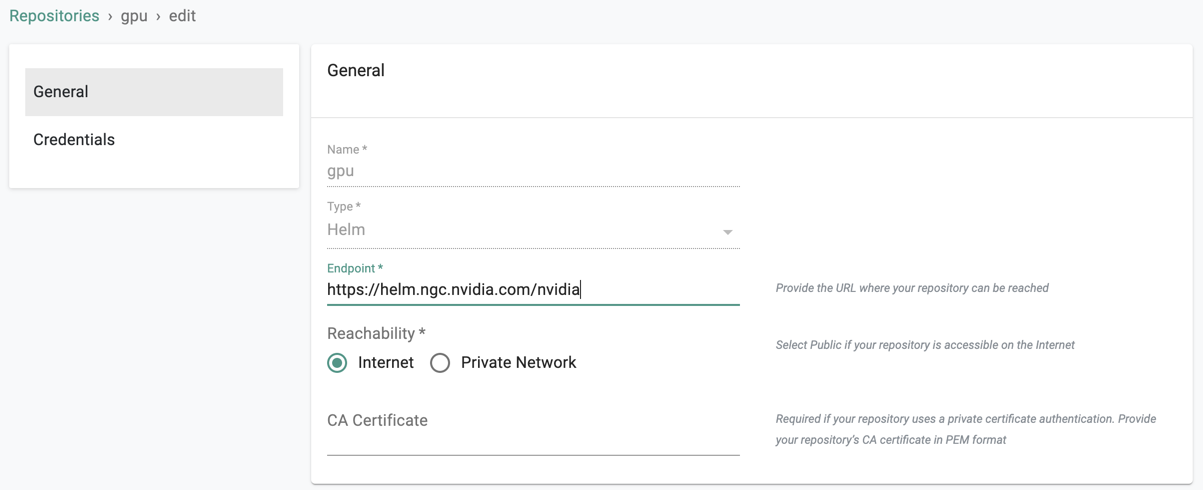

Step 1: GPU Operator Repository¶

Nvidia distributes their GPU Operator software via their official Helm repository. In this step, you will create a repository in your project so that the controller can retrieve the Helm charts automatically.

- Open Terminal (on macOS/Linux) or Command Prompt (Windows) and navigate to the folder where you forked the Git repository

- Navigate to the folder "

/getstarted/gpuaks/addon"

The "repository.yaml" file contains the declarative specification for the repository. In this case, the specification is of type "Helm Repository" and the "endpoint" is pointing to Nvidia's official Helm repository.

apiVersion: config.rafay.dev/v2

kind: Repository

metadata:

name: gpu

spec:

repositoryType: HelmRepository

endpoint: https://helm.ngc.nvidia.com/nvidia

credentialType: CredentialTypeNotSet

Type the command below

rctl create repository -f repository.yaml

If you did not encounter any errors, you can optionally verify if everything was created correctly on the controller.

- Navigate to your Org and Project

- Select Integrations -> Repositories and click on gpu

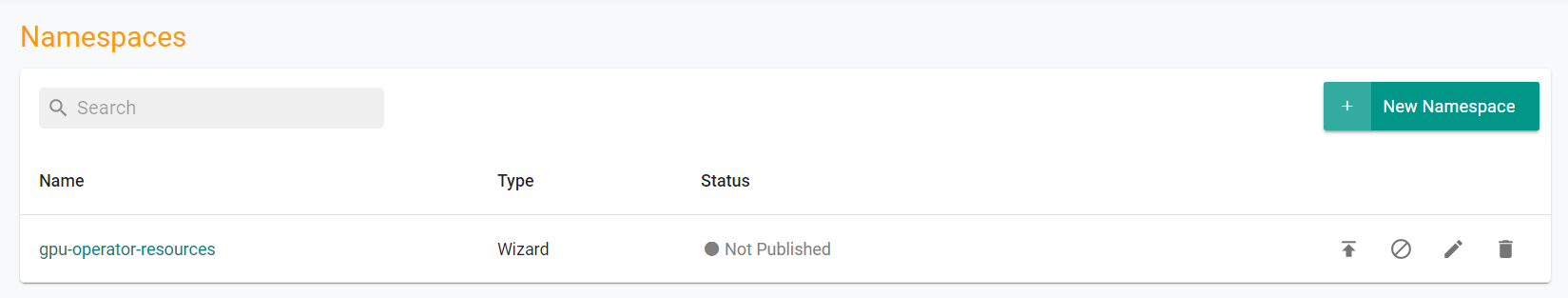

Step 2: Create Namespace¶

In this step, you will create a namespace for the Nvidia GPU Operator. The "namespace.yaml" file contains the declarative specification

The following items may need to be updated/customized if you made changes to these or used alternate names.

- value: demo-gpu-aks

kind: ManagedNamespace

apiVersion: config.rafay.dev/v2

metadata:

name: gpu-operator-resources

description: namespace for gpu-operator

labels:

annotations:

spec:

type: RafayWizard

resourceQuota:

placement:

placementType: ClusterSpecific

clusterLabels:

- key: rafay.dev/clusterName

value: demo-gpu-aks

- Open Terminal (on macOS/Linux) or Command Prompt (Windows) and navigate to the folder where you forked the Git repository

- Navigate to the folder "

/getstarted/gpuaks/addon" - Type the command below

rctl create namespace -f namespace.yaml

If you did not encounter any errors, you can optionally verify if everything was created correctly on the controller.

- In your project, select Infrastructure -> Namespaces

- You should see a namespace called gpu-operator-resources

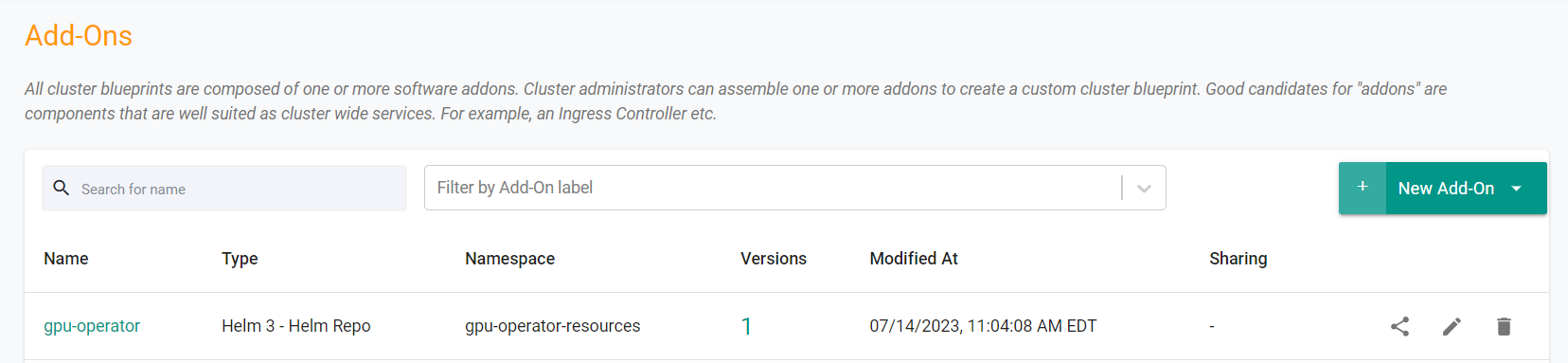

Step 3: Create Addon¶

In this step, you will create a custom addon for the Nvidia GPU Operator. The "addon.yaml" file contains the declarative specification

- "v1" because this is our first version

- Name of addon is "gpu-operator"

- The addon will be deployed to a namespace called "gpu-operator-resources"

- You will be using "v23.3.1" of the Nvidia GPU Operator Helm chart

- You will be using a custom "values.yaml as an override

kind: AddonVersion

metadata:

name: v1

project: defaultproject

spec:

addon: gpu-operator

namespace: gpu-operator-resources

template:

type: Helm3

valuesFile: values.yaml

repository_ref: gpu

repo_artifact_meta:

helm:

tag: v23.3.1

chartName: gpu-operator

Type the command below

rctl create addon version -f addon.yaml

If you did not encounter any errors, you can optionally verify if everything was created correctly on the controller.

- In your project, select Infrastructure -> Add-Ons

- You should see an addon called gpu-operator

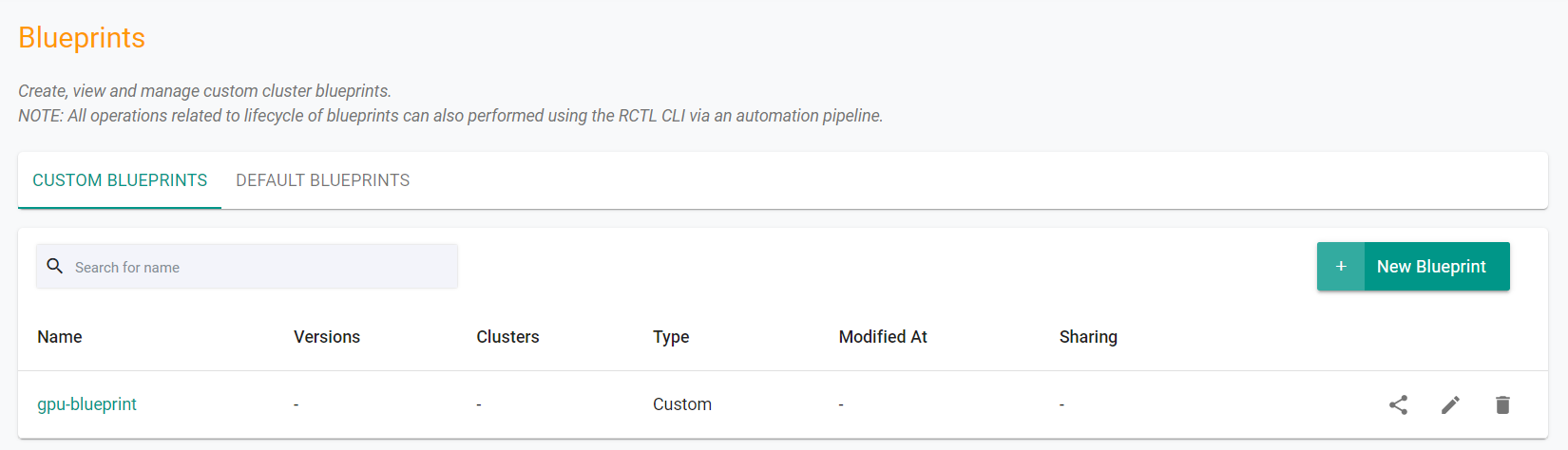

Step 4: Create Blueprint¶

In this step, you will create a custom cluster blueprint with the Nvidia GPU Operator and a number of other system addons. The "blueprint.yaml" file contains the declarative specification.

- Open Terminal (on macOS/Linux) or Command Prompt (Windows) and navigate to the folder where you forked the Git repository

- Navigate to the folder "

/getstarted/gpuaks/blueprint"

apiVersion: infra.k8smgmt.io/v3

kind: Blueprint

metadata:

name: gpu-blueprint

project: defaultproject

spec:

base:

name: default-aks

customAddons:

- name: gpu-operator

version: v1

defaultAddons:

enableIngress: false

enableLogging: true

enableMonitoring: true

enableVM: false

monitoring:

helmExporter:

discovery: {}

enabled: true

kubeStateMetrics:

discovery: {}

enabled: true

metricsServer:

enabled: false

nodeExporter:

discovery: {}

enabled: true

prometheusAdapter:

enabled: false

resources: {}

drift:

enabled: true

sharing:

enabled: false

version: v1

- Type the command below

rctl apply -f blueprint.yaml

If you did not encounter any errors, you can optionally verify if everything was created correctly on the controller.

- In your project, select Infrastructure -> Blueprint

- You should see an blueprint called gpu-blueprint

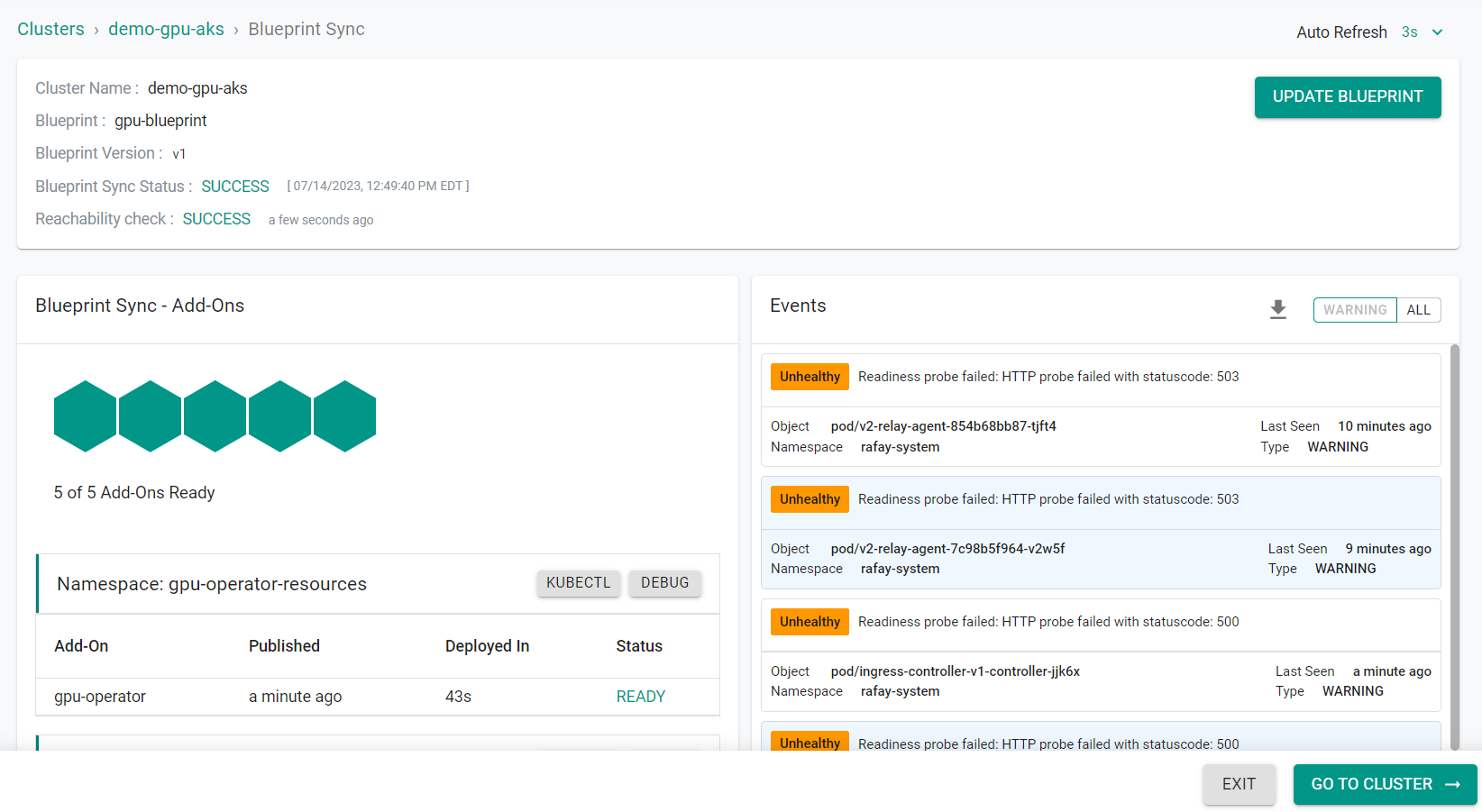

Next, we will update the cluster to use the newly created blueprint.

- Type the command below. Be sure to update the cluster name, demo-gpu-aks, and the blueprint name in the command below with the name of your resources

rctl update cluster demo-gpu-aks --blueprint gpu-blueprint --blueprint-version v1

Step 5: Verify GPU Operator¶

Now, let us verify whether the Nvidia GPU Operator's resources are operational on the AKS cluster

- Click on the kubectl link and type the following command

kubectl get po -n gpu-operator-resources

You should see something like the following. Note, it will take ~6 minutes for all of the pods to get to a running state.

NAME READY STATUS RESTARTS AGE

gpu-feature-discovery-qfdq5 1/1 Running 0 4m2s

gpu-operator-5dbf58b465-zcd6w 1/1 Running 0 4m16s

gpu-operator-node-feature-discovery-master-84ff8d974c-6mv5j 1/1 Running 0 4m16s

gpu-operator-node-feature-discovery-worker-952mw 1/1 Running 0 4m16s

nvidia-container-toolkit-daemonset-82l6b 1/1 Running 0 4m2s

nvidia-cuda-validator-6n72x 0/1 Completed 0 3m3s

nvidia-dcgm-exporter-x6zkf 1/1 Running 0 4m2s

nvidia-device-plugin-daemonset-df88q 1/1 Running 0 4m2s

nvidia-device-plugin-validator-kd6gh 0/1 Completed 0 2m7s

nvidia-operator-validator-4t9fh 1/1 Running 0 4m2s

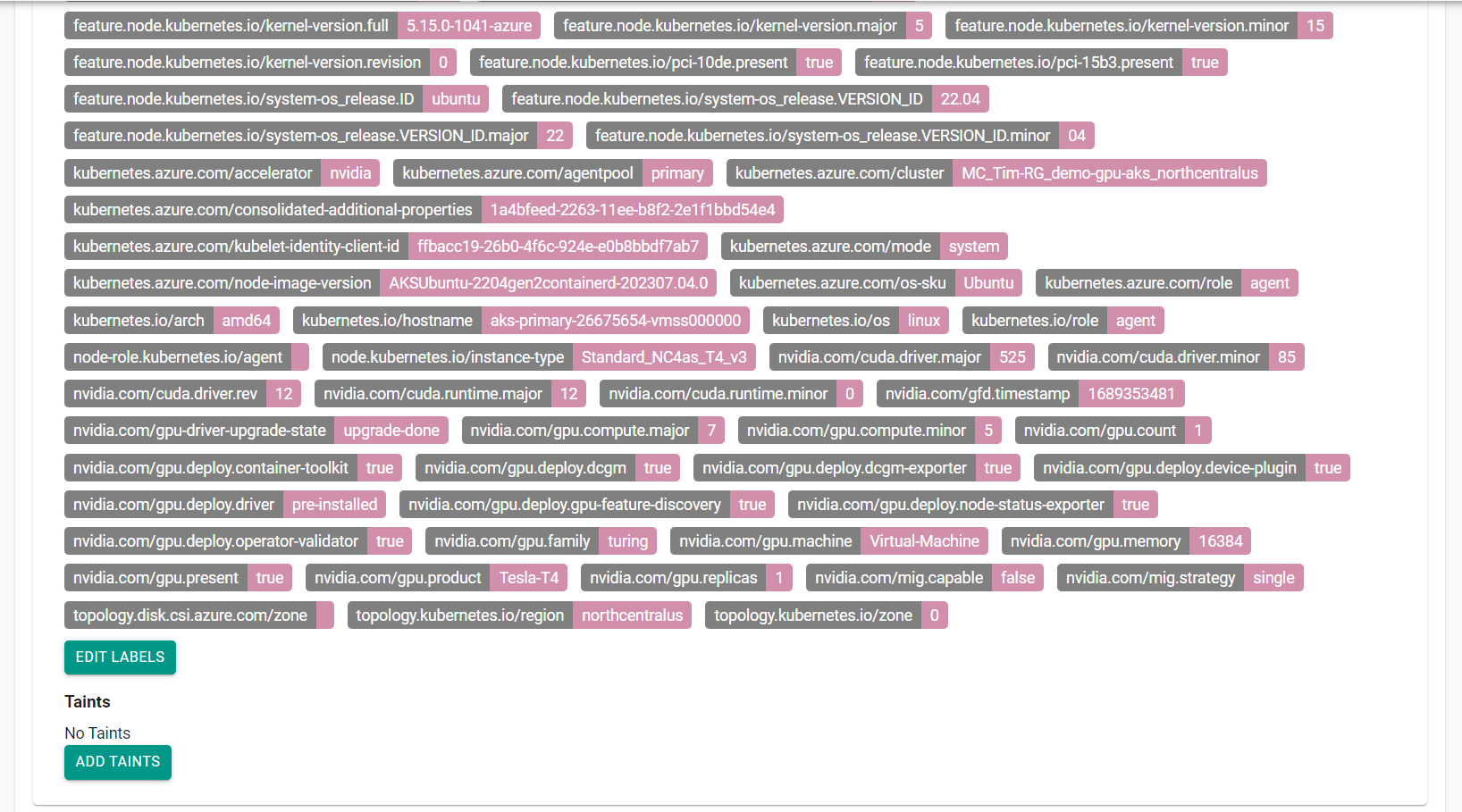

The GPU Operator will automatically add "required labels" to the GPU enabled worker nodes.

- Click on nodes and expand the node that belongs to the "gpu" node group

Recap¶

As of this step, you have created and applied a "cluster blueprint" with the GPU Operator as one of the addons.

You are now ready to move on to the next step where you will deploy a "GPU Workload" and review the integrated "GPU Dashboards"

Note that you can also reuse this cluster blueprint for as many clusters as you require in this project and also share the blueprint with other projects.