2022

v1.21¶

09 Dec, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.21) with their cluster blueprints to be able to use many of the new features described below. Customers must upgrade to the latest version of the RCTL CLI to use the latest functionality.

Amazon EKS¶

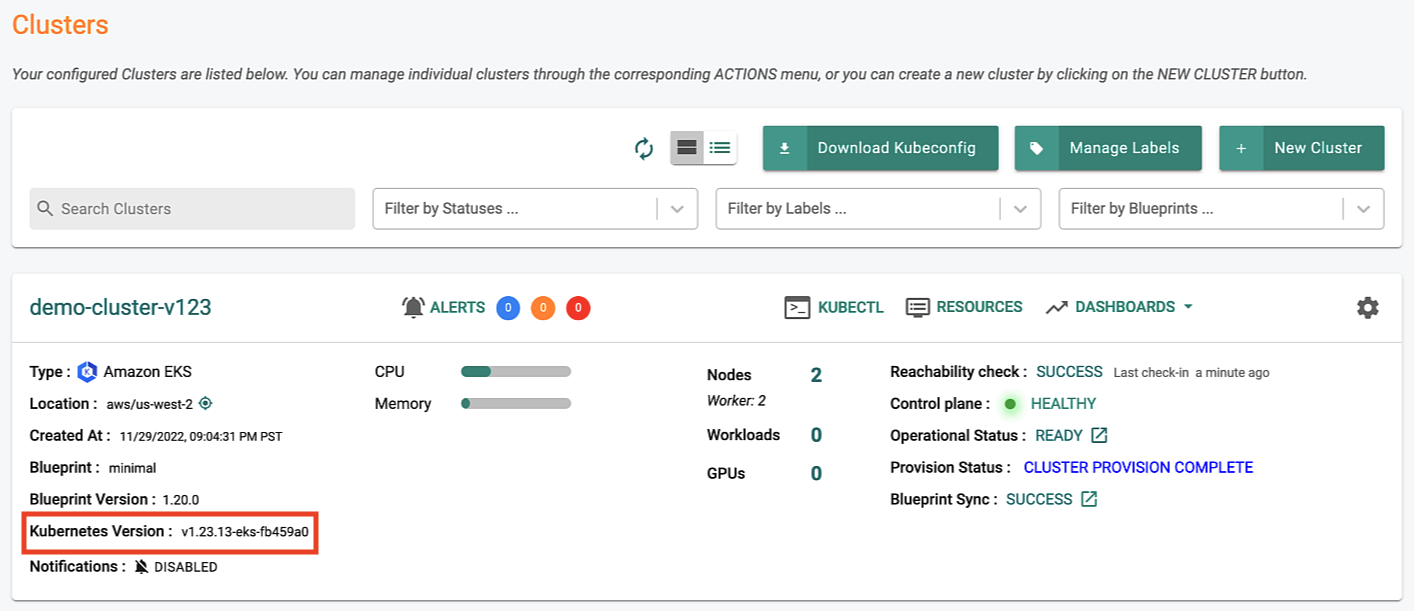

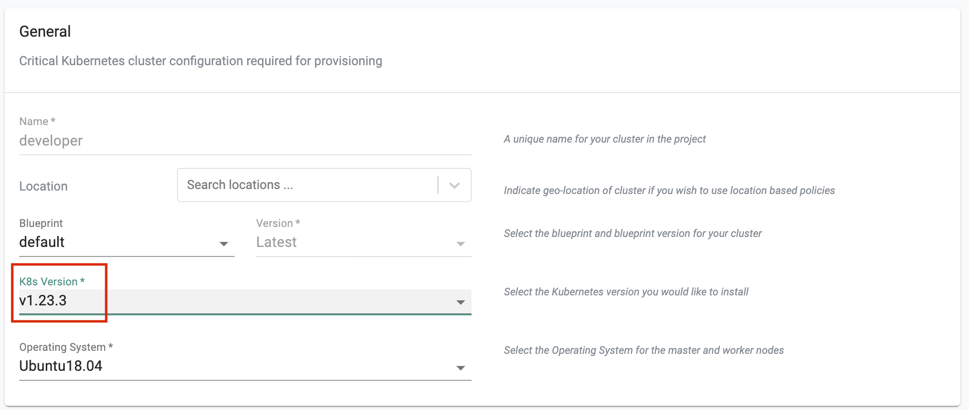

New v1.23 Clusters¶

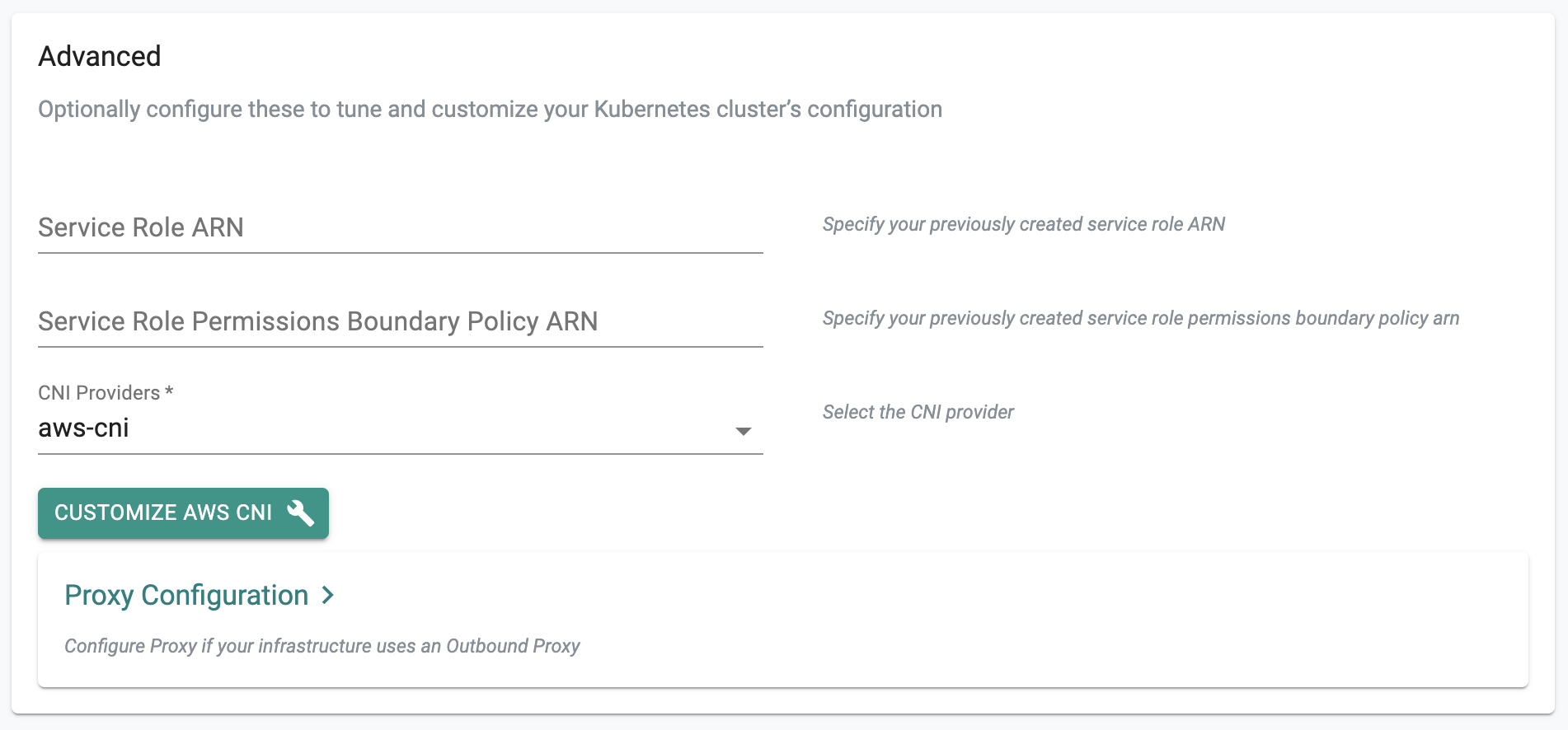

New Amazon EKS clusters can now be provisioned based on Kubernetes v1.23. This process will automatically deploy the AWS recommended versions of the "EBS CSI driver" add-on for storage and critical add-ons for the EKS cluster to operate properly (i.e. aws-node, kube-proxy and core-dns).

Users can optionally "override" the default versions of the "critical addons" Day-1 (during cluster provisioning) and Day-2 (after the cluster is provisioned).

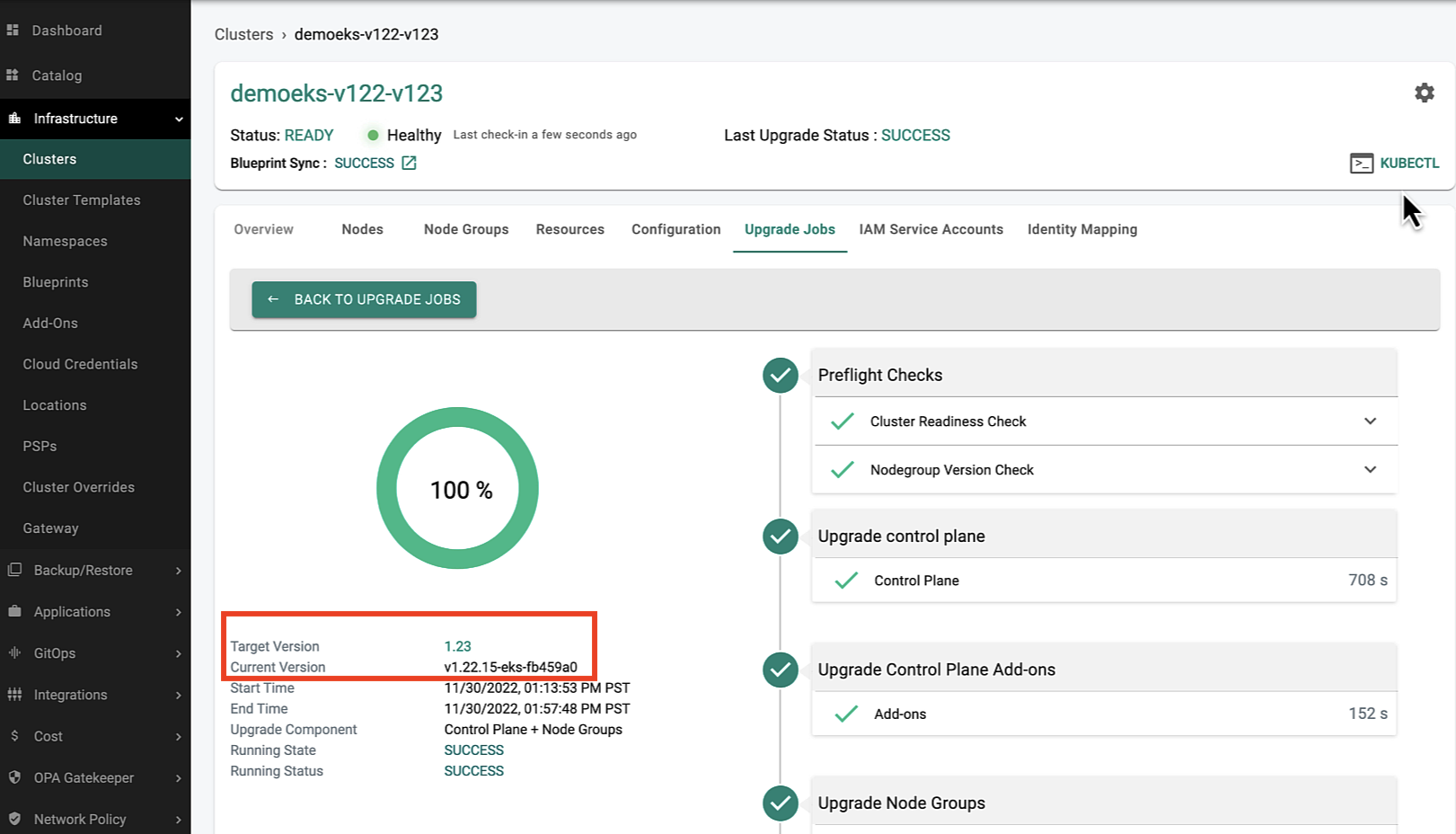

In Place Upgrades¶

Existing EKS clusters provisioned and managed by the controller can now be upgraded "in-place" to Kubernetes v1.23.

The in-place upgrade process will automatically install the EBS CSI driver add-on before initiating the upgrade to ensure there is no disruption to applications using storage.

Important

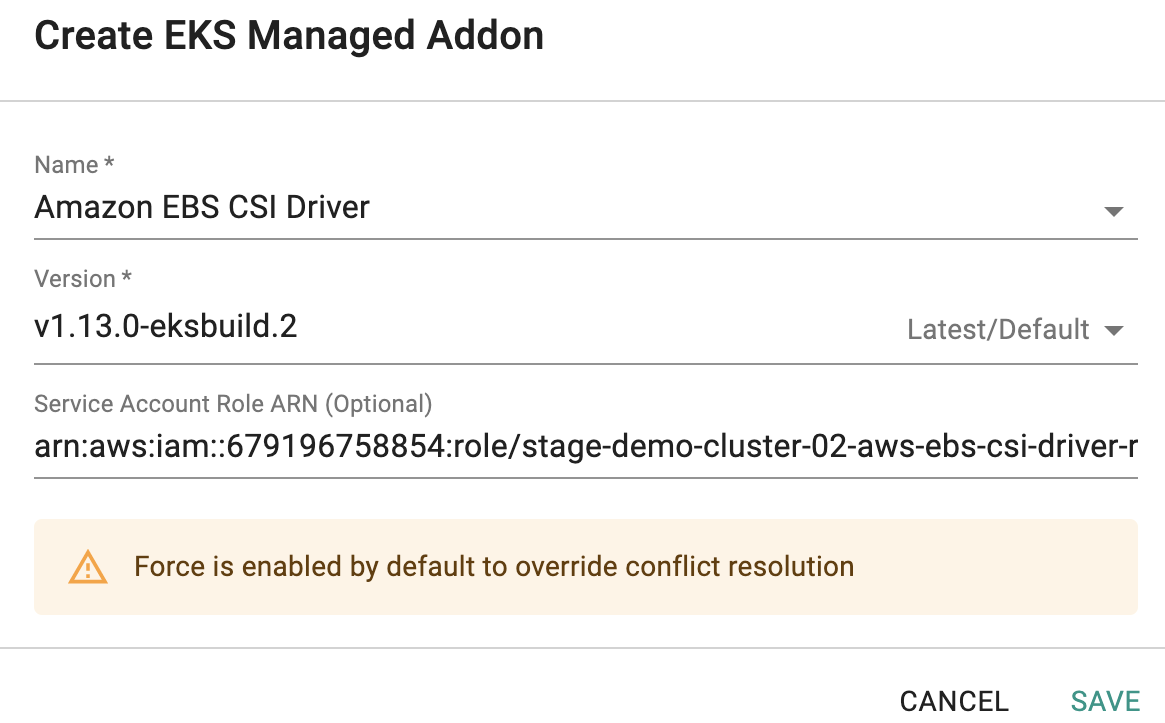

When the cluster is provisioned with cloud credentials based on "minimal permissions", the addon creation will fail since it will not have the permissions to create the IRSA for the EBS CSI driver. Users can create new service account role ARN which has all the necessary policies and using that trigger the addon creation as a Day-2 operation.

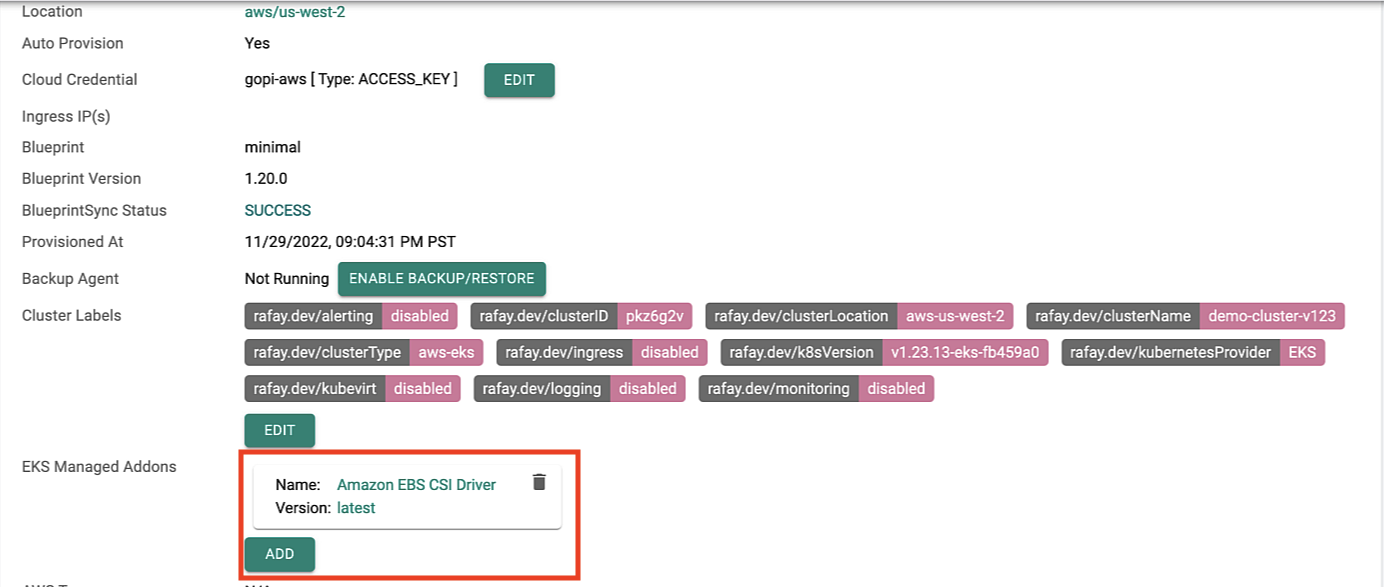

Managed Addons¶

Users can now override the "default" versions of the critical cluster add-ons (EBS CSI driver, aws-node, kube-proxy and core-dns) either Day-1 (before cluster provisioning) or Day-2 (after cluster provisioning).

The details and the versions of the critical add-ons are clearly identified and reported to the user as part of the cluster configuration.

Google GKE¶

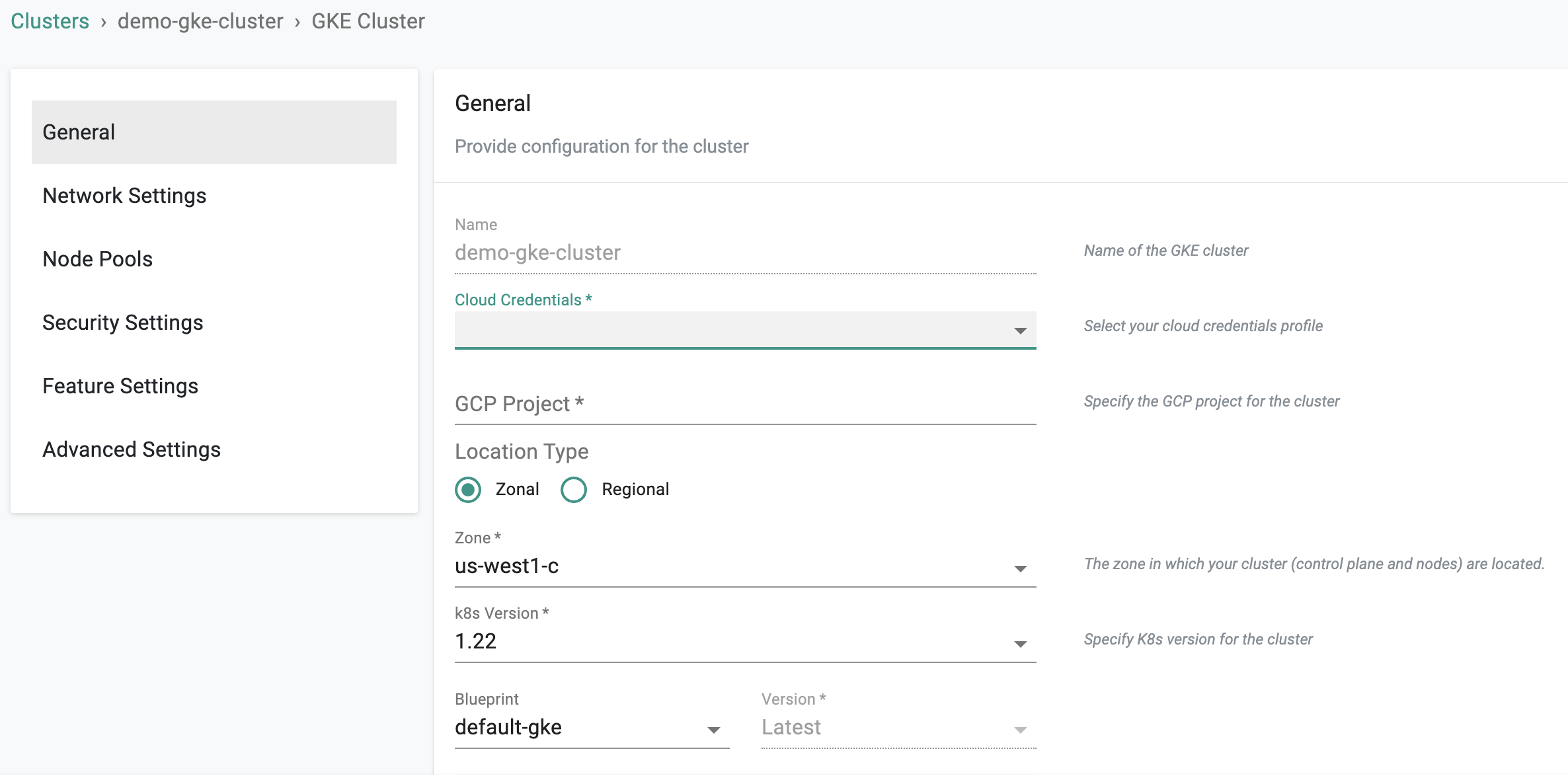

A number of UI enhancements have been made to streamline the cluster configuration workflows for GKE clusters.

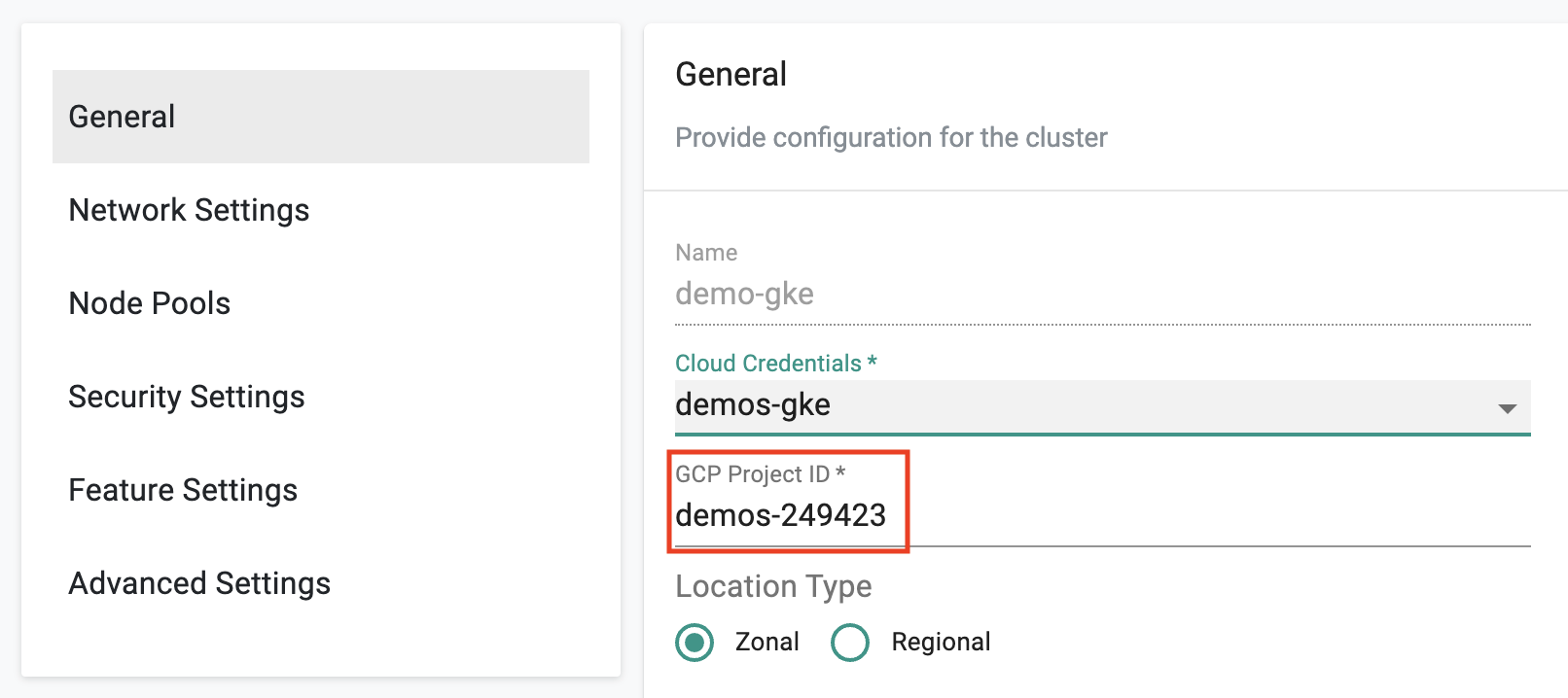

Auto Fill GCP Project¶

Once the user selects the cloud credential, the associated "GCP Project ID" is detected and auto completed in the console. This ensures that the user does not have to login into the GCP console just to retrieve this information.

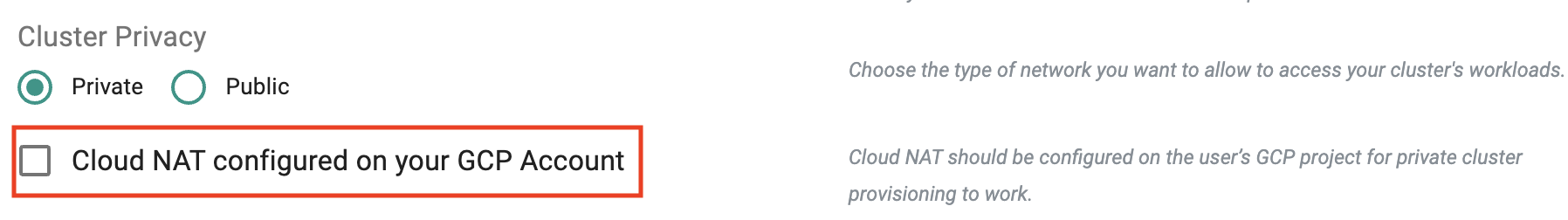

Cloud NAT¶

For "private" GKE clusters (i.e. API server not accessible on the Internet), users are prompted to acknowledge that they have configure Cloud NAT to ensure that cluster provisioning will complete successfully.

Azure AKS¶

Day-2 Enhancements¶

For AKS clusters provisioned and managed by the controller, admins can now perform additional Day-2 operations such as add/update "taints, node labels, tags, add-on profiles". These operations can be performed using the RCTL CLI, web console or the Terraform provider (v1.12 and above).

Important

Azure limits to add either CriticalAddonsOnly=true or taint effect PreferNoSchedule as a custom taint for System nodepool. For more information, visit here

Secrets Manager Integration¶

Self Service¶

Organizations can now offload the administration of the "SecretProviderClass" for each namespace to the application team. Workspace admins can now create and manage "SecretProviderClass" resources for the namespaces they administer and manage.

Different Regions¶

The SecretProviderClass wizard now supports scenarios where the EKS cluster and AWS Secrets Manager are in different AWS regions. This enables "secure" global application deployments spanning multiple EKS clusters.

GitOps & Workloads¶

Pipeline Job Visibility¶

Users now have fine grained visibility into Pipeline Jobs that are in the queue.

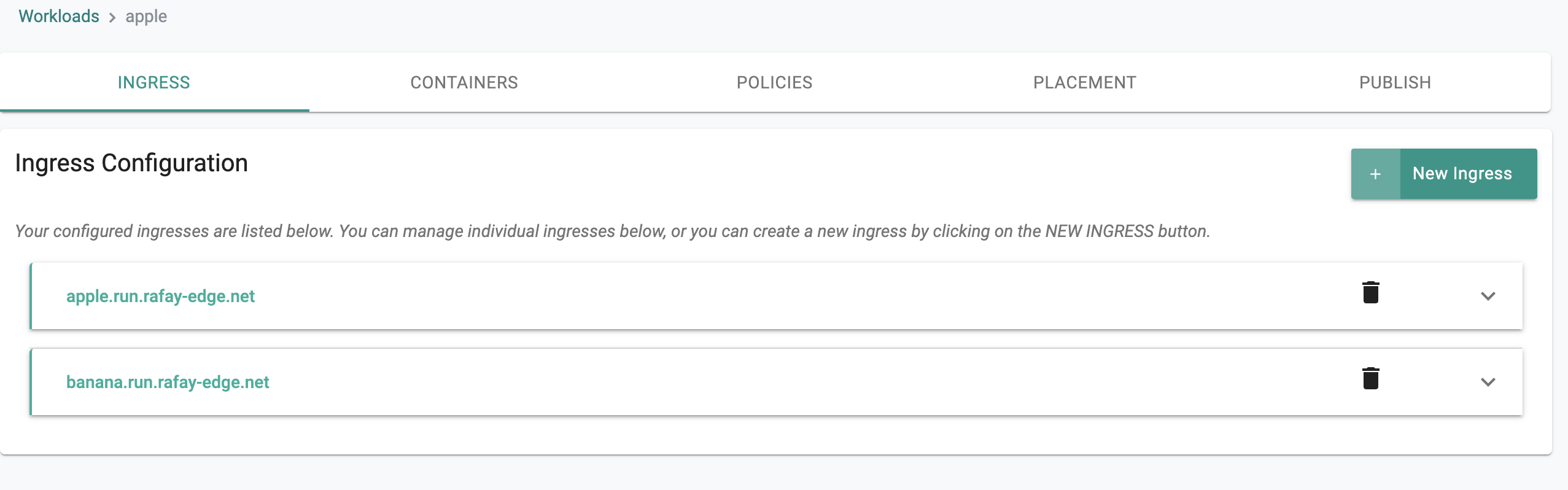

Multiple Hostnames in Single Ingress¶

The workload wizard has been enhanced to support the use of multiple hostnames in a single Ingress object.

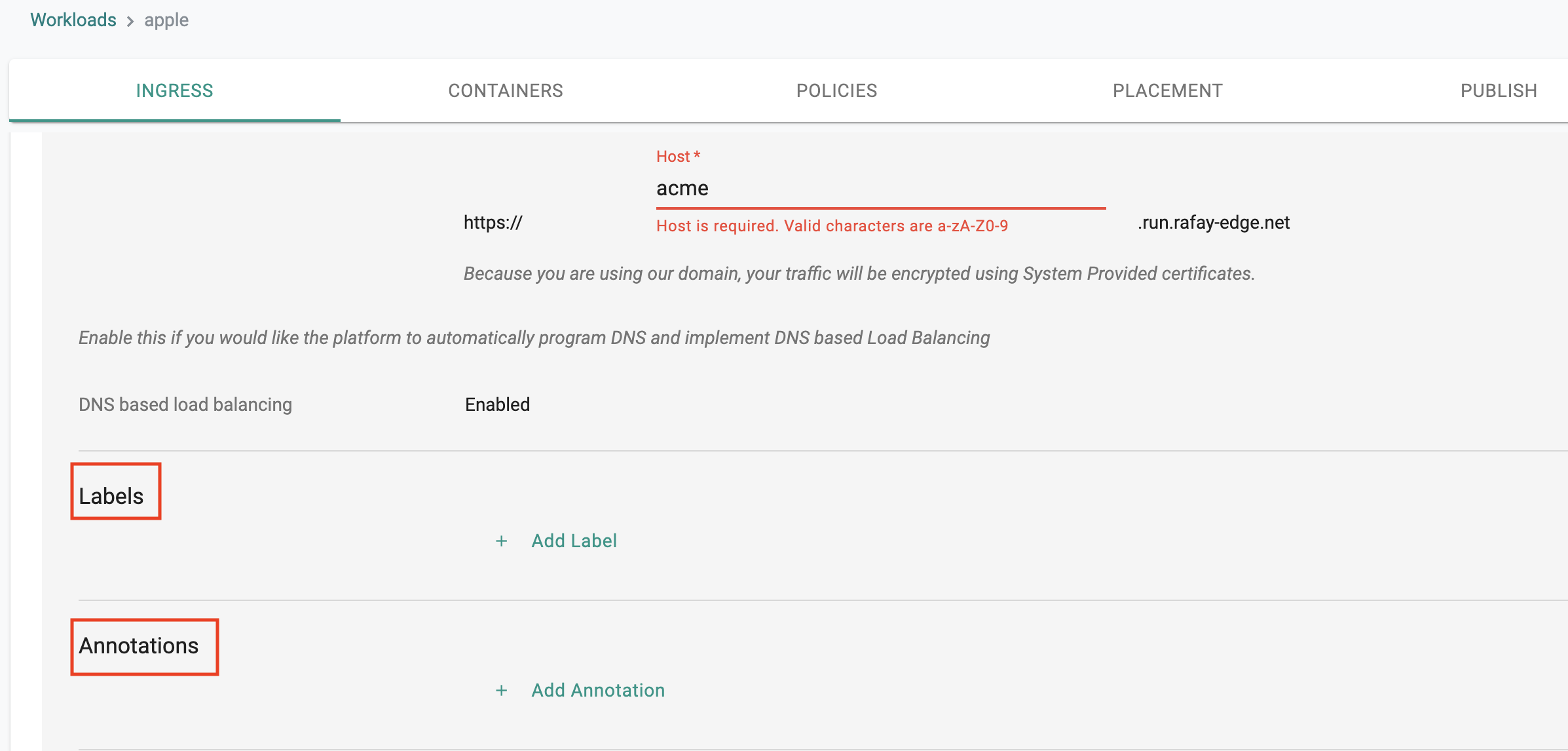

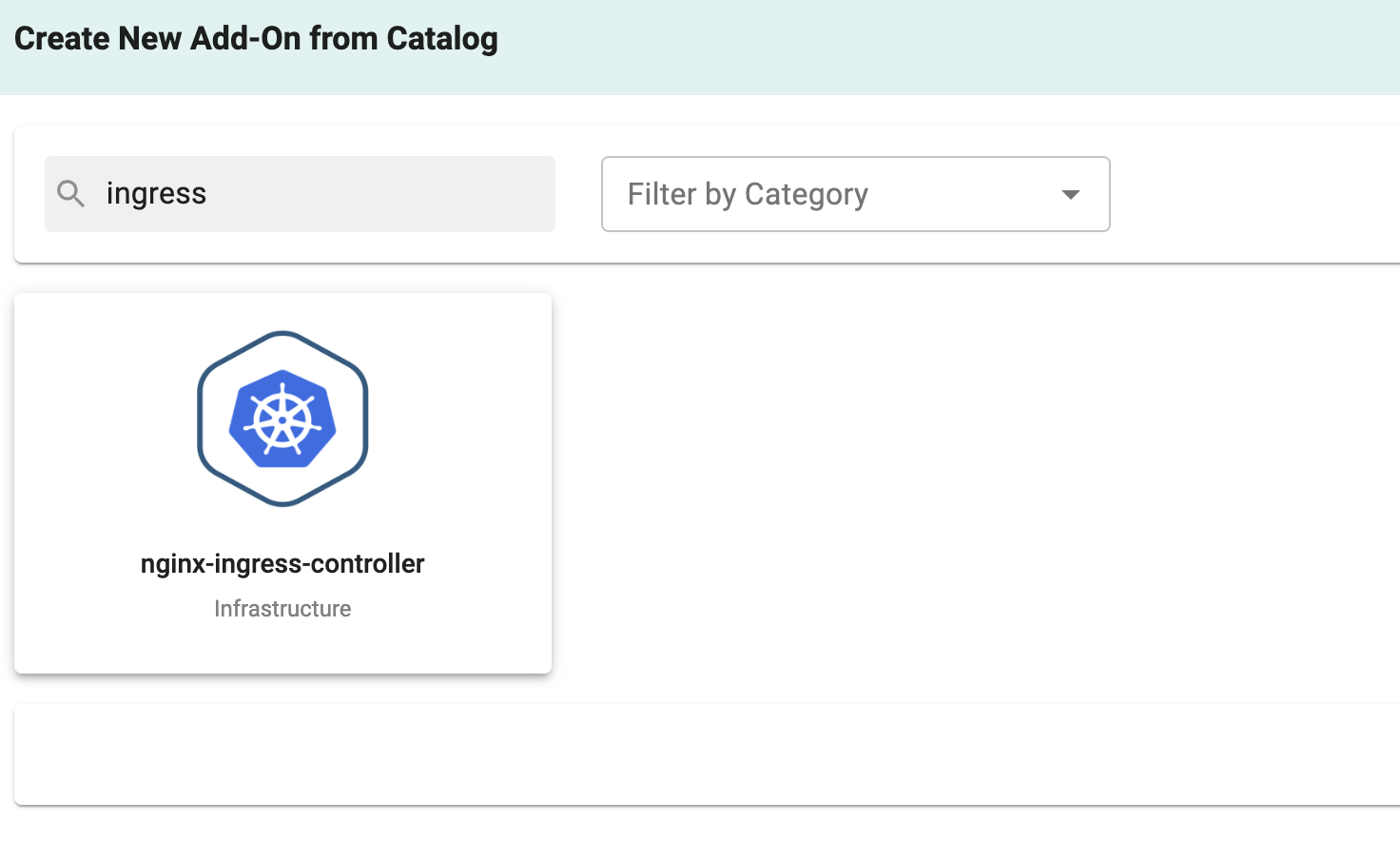

Ingress Annotations¶

The workload wizard has been enhanced to support the use of Ingress annotations.

Cluster Blueprints¶

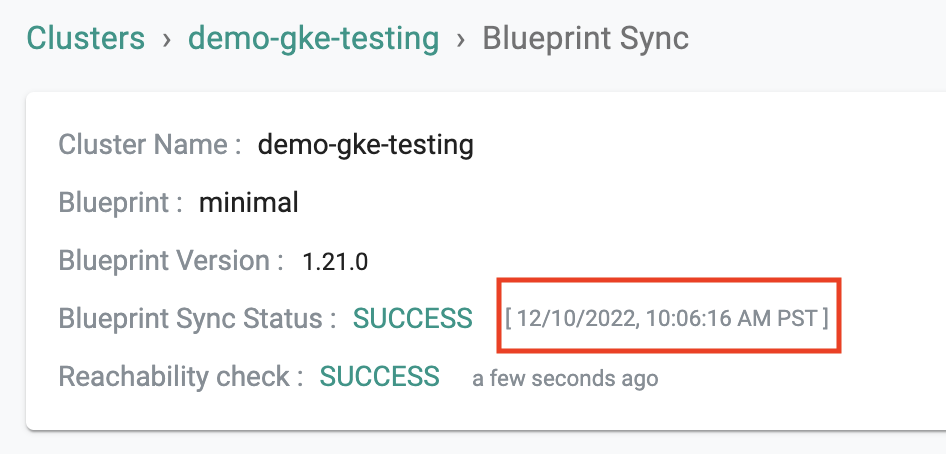

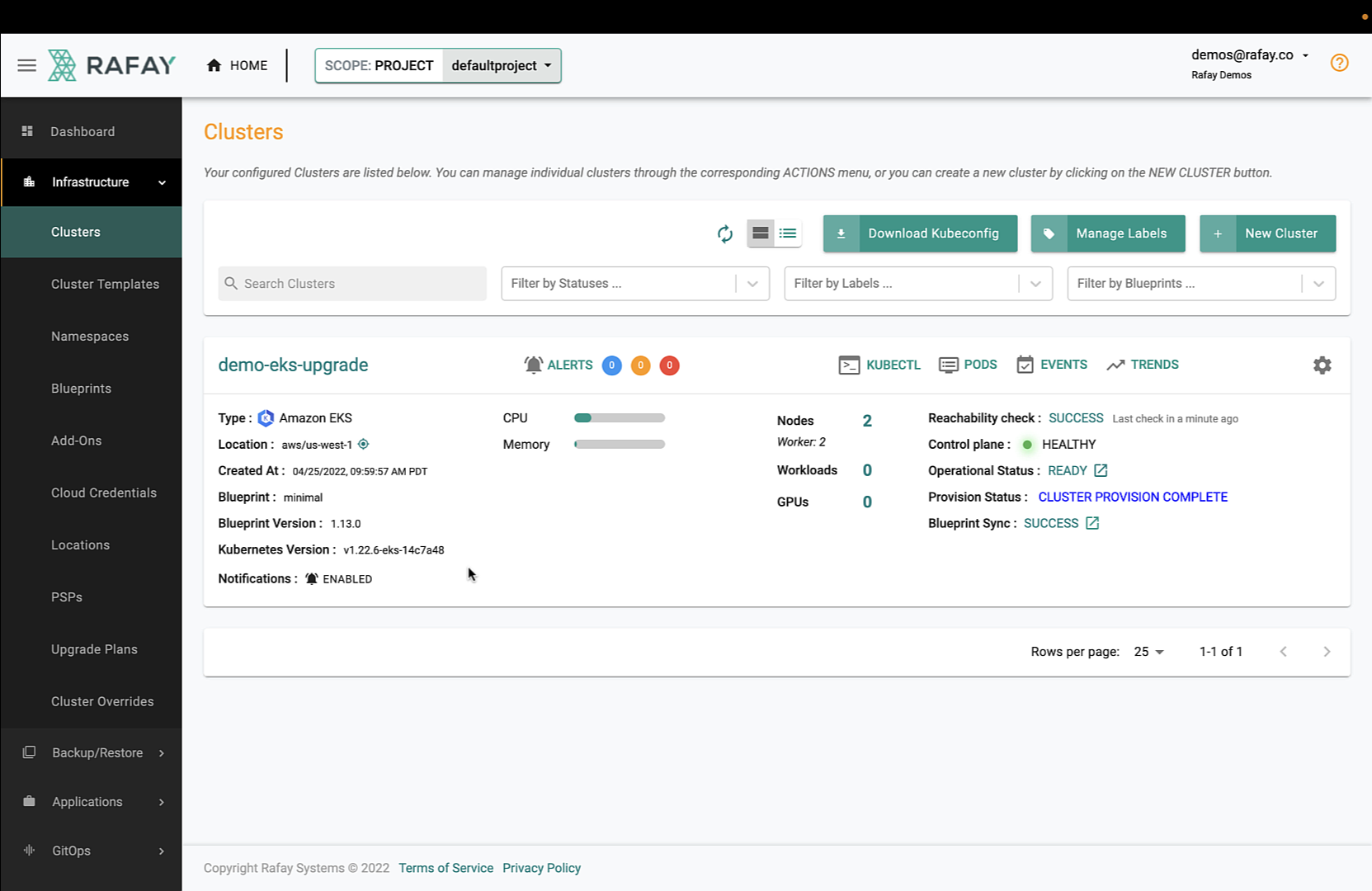

Sync Complete Timestamp¶

Users can now present the blueprint "sync complete" timestamp as evidence for compliance to auditors. This timestamp identifies the exact time when the cluster blueprint sync was deemed complete i.e. all k8s resources associated with the cluster blueprint have been deployed on the cluster.

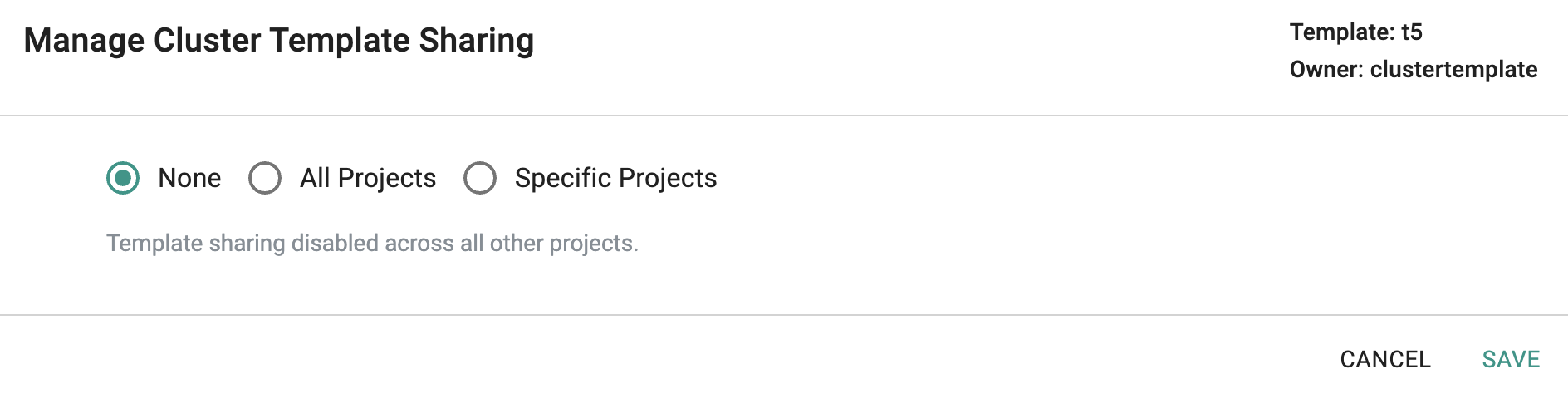

Sharing¶

Cluster blueprints configured with "fleet labels" can now be shared with other projects. This allows organizations to update their cluster blueprints across the entire Org quickly and efficiently.

SSO¶

Union of Local and IdP Groups¶

Organizations that use a combination of "local" and "IdP groups" can now configure and use a "union of groups" for role based access control.

Dashboards¶

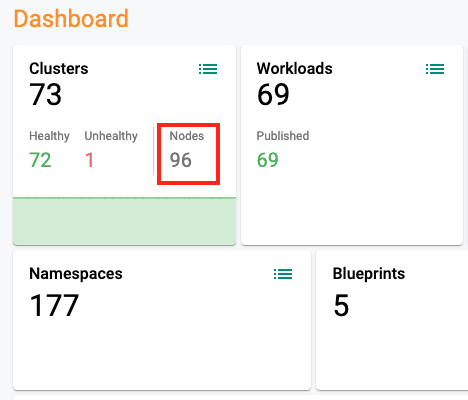

Active Nodes under Management¶

Org and Project dashboards now provide information on total active nodes under management.

Network Policy Manager¶

Time Period¶

Users can now select a time period to view metrics.

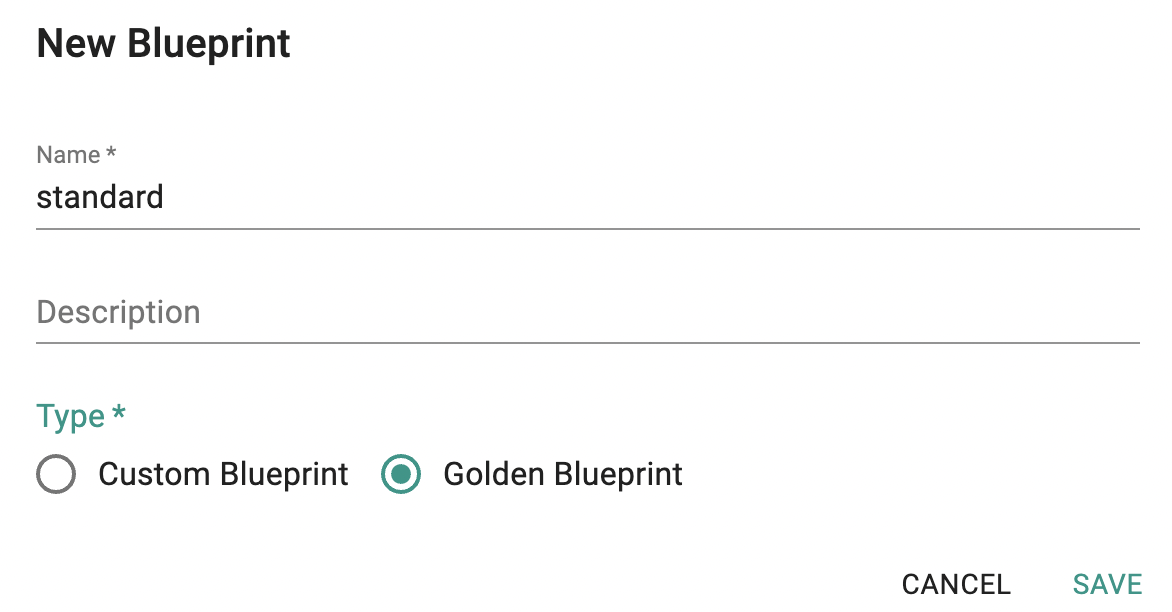

Golden Blueprints¶

Users can now configure Network Policies with Golden Blueprints to ensure that certain baseline policies are applied by default across clusters.

Terraform Provider¶

The Terraform provider has been updated and enhanced with support for additional IaC scenarios and resources. See documentation for v1.12 of the provider for additional details.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-21179 | Git-to-System Sync fails for an EKS Cluster with “withLocal“ and “withShared“ variables under Security Group & “sharedNodeSecurityGroup“ and “manageSharedNodeSecurityGroupRules“ variables defined under VPC |

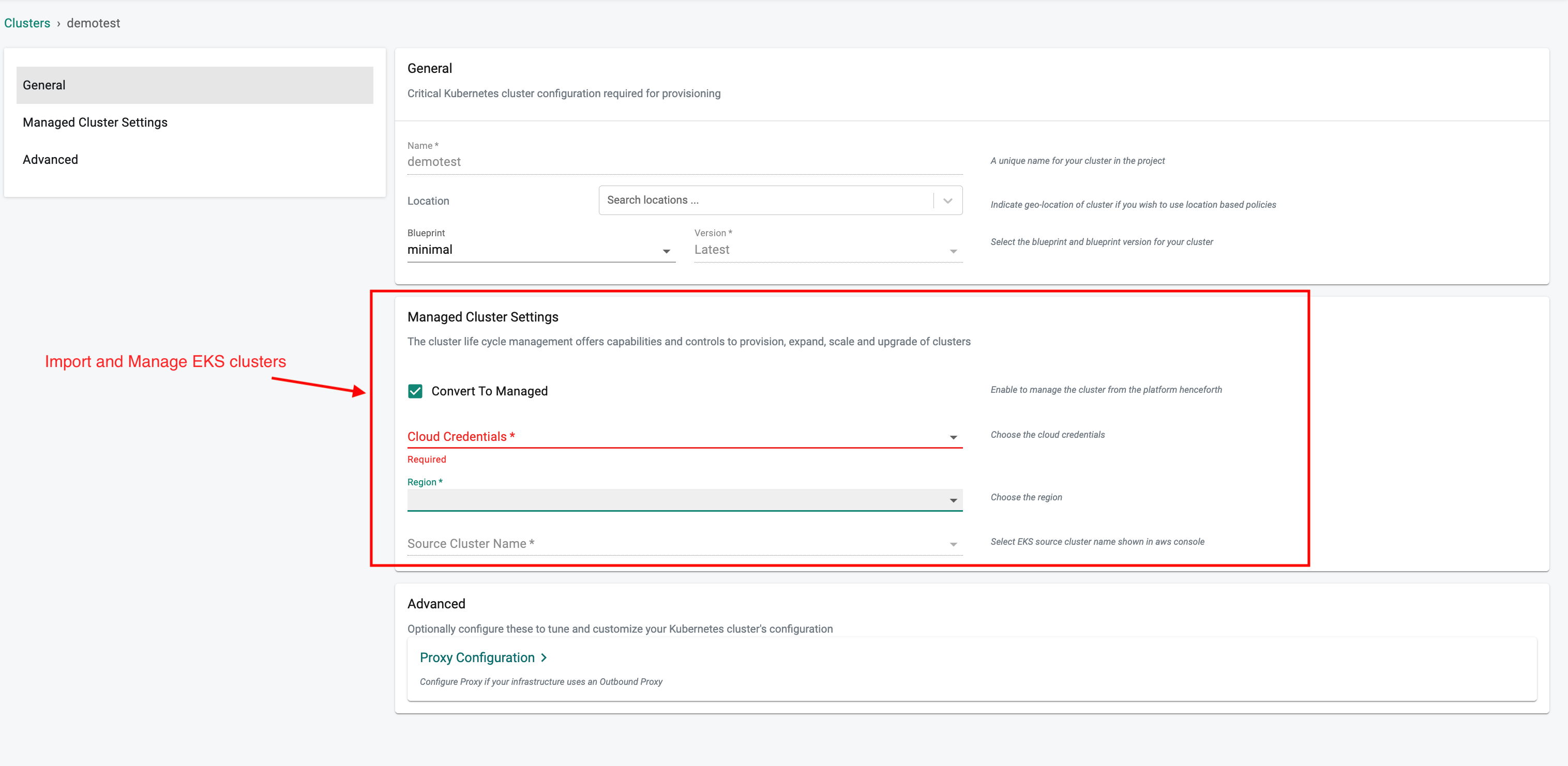

| RC-21080 | Nodegroup labels getting incorrectly set intermittently during the Convert To Managed operation for EKS clusters |

| RC-20822 | No validation to allow publishing the workload only when there is a cluster in placement |

| RC-21112 | During ConvertToManaged, all subnets of VPC are getting synced instead of Cluster/Nodegroup subnets |

| RC-20612 | Workload deployment fails when unpublish/delete is in progress with the same name |

| RC-19937 | RCTL: rctl get workload |

| RC-21148 | After an imported EKS cluster is converted to managed, nodegroup upgrade fails if edge-client is running on an imported self-managed nodegroup |

v1.20¶

18 Nov, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.20) with their cluster blueprints to be able to use many of the new features described below.

GKE Lifecycle¶

Lifecycle management for GKE clusters has been significantly enhanced with a newly developed Cluster API based provider for GKE.

Self-Service UI¶

Users can now configure and provision GKE clusters using an intuitive, self-service wizard.

Declarative Cluster Spec¶

Users can use a declarative cluster specification to specify "Desired State" for their GKE clusters.

RCTL CLI¶

Users can use the RCTL CLI with declarative cluster specifications to create, scale, upgrade and delete GKE clusters.

rctl apply -f gke_cluster_spec.yaml

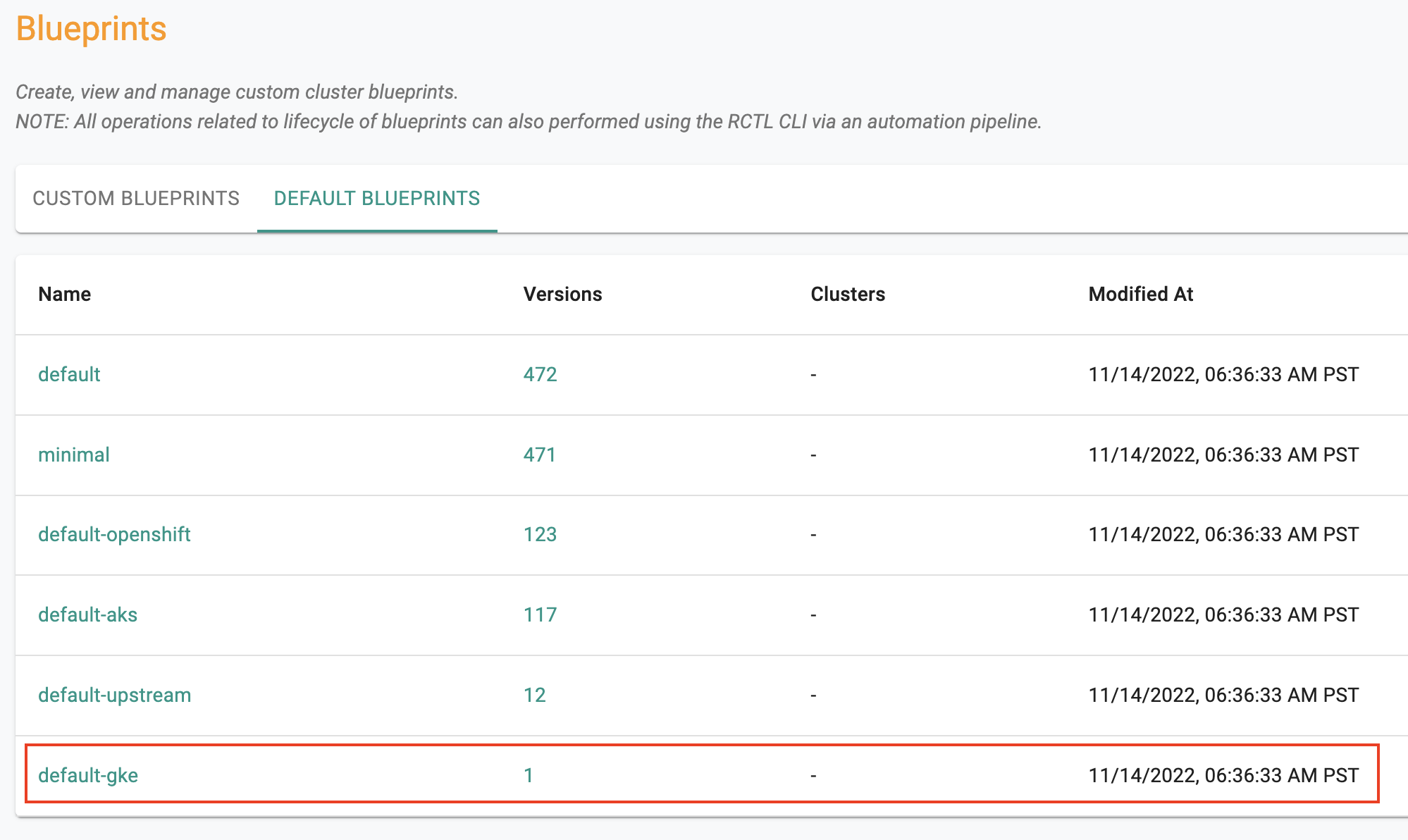

Default GKE Blueprint¶

A turnkey, "default blueprint" for GKE clusters is now available for users to use as an optional baseline.

Info

Learn more about GKE Lifecycle Management here.

Kubernetes on vSphere¶

Taints and Labels¶

Admins can now edit taints and labels on cluster nodes.

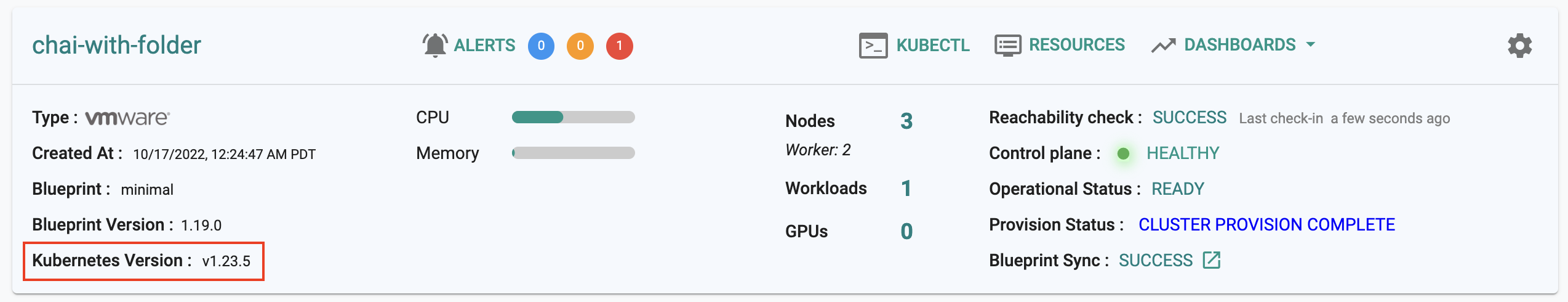

Display Kubernetes Version¶

The cluster card now displays the Kubernetes version of the cluster.

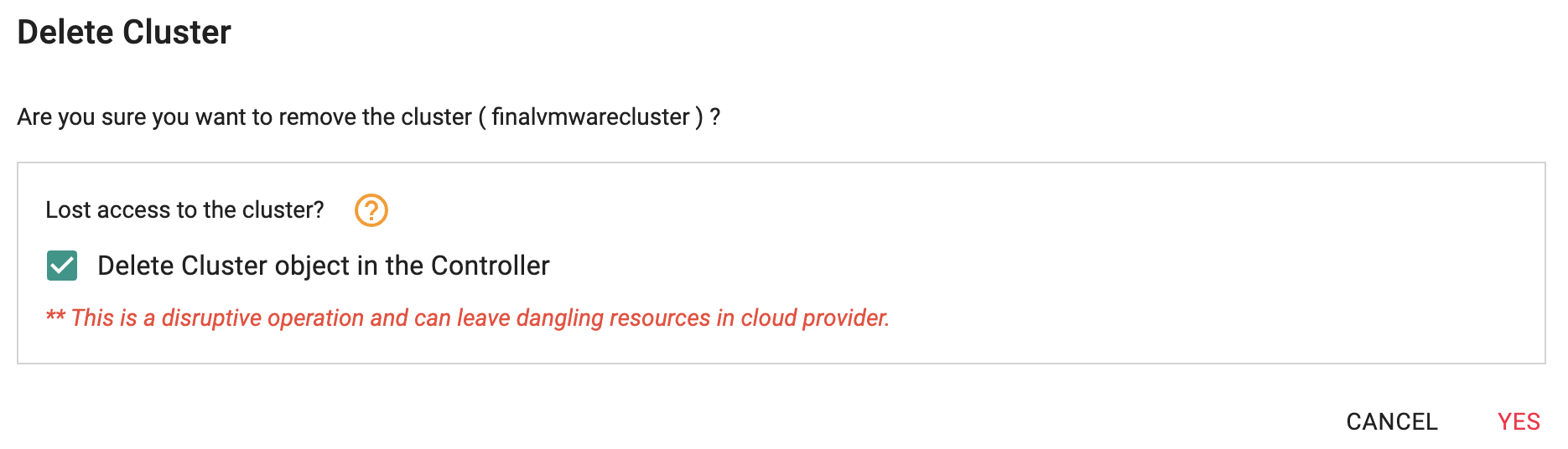

Force Delete¶

Admins can now use a force delete option to delete and clean up orphaned clusters from their projects.

Info

Learn more about this here.

Kubernetes for Bare Metal and VMs¶

Enhanced Declarative Specs¶

The schema for declarative specifications has been enhanced to support all the functionality supported for Upstream Kubernetes on Bare Metal and VM based environments.

Info

Learn more about the declarative specs here.

CLI Enhancements¶

The RCTL CLI now uses declarative cluster specifications for lifecycle management for clusters of this type.

Users can use the declarative cluster specification to provision clusters (Day-1 operations). For changes in Day-2, they just need to make the updates in the cluster specification YAML and use RCTL to apply. The controller will automatically identify the changes, map that to the required action and achieve the desired state.

rctl apply -f upstream_cluster_spec.yaml

Multi Minion¶

The RCTL CLI has been enhanced to support the configuration of multiple minions on cluster nodes allowing coexistence with the customer's existing Salt infrastructure.

Cluster Blueprints¶

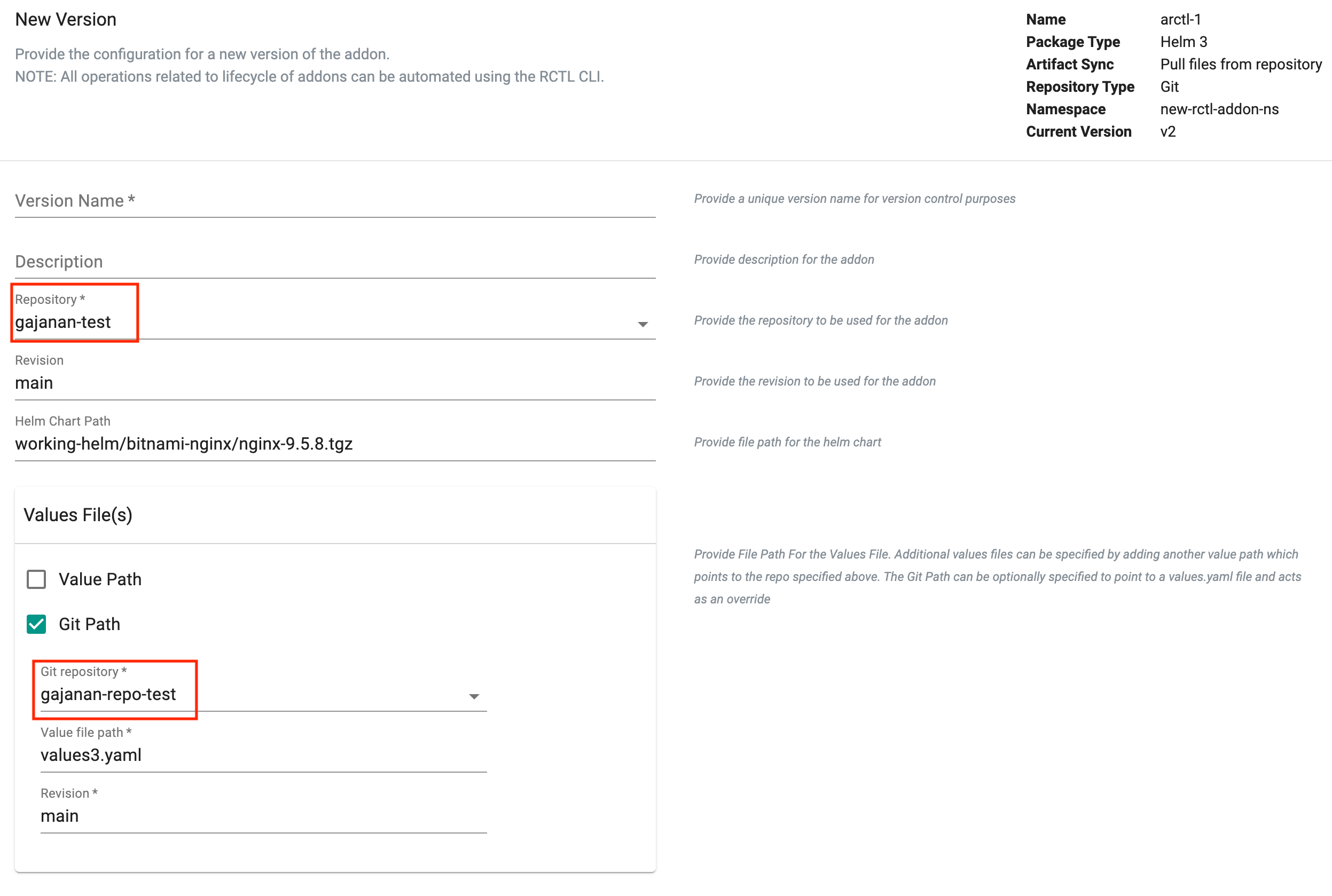

Git Repo based Values Override¶

For "addons" based on Helm charts from a Git repository, users can now specify the "values.yaml" override file from a "different" Git repository.

- Helm Chart -> Git Repo-1

- Values Override -> Uploaded File

- Values Override -> Git Repo-1

Important

When both an "uploaded" values.yaml as well as a separate "Git repo" based values.yaml overide are specified, the latter will be given preference.

SSO¶

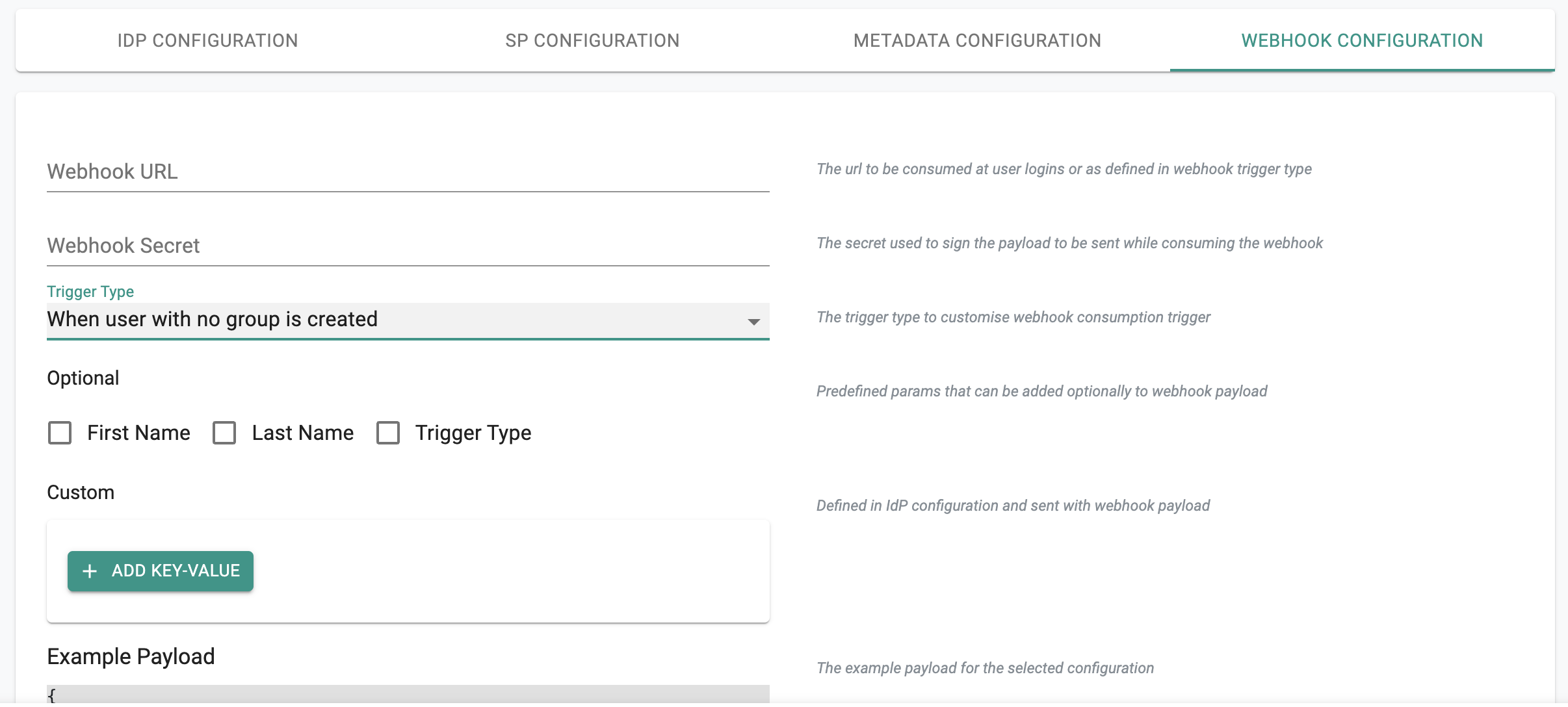

Webhooks Notifications¶

Webhook notifications can be configured to be sent to an external webhook receiver whenever there is an IDP user login. For example, this can be used to streamline the "new user onboarding experience" especially for IdP/SSO users that are not configured with any group information.

Info

Learn more about webhooks for IDP here.

Namespaces¶

Project Labels¶

Kubernetes namespaces created out of band of the platform using the "zero trust kubectl" channel (e.g. kubectl create ns acme) will be automatically injected with the "label" identifying the name of the "project" in which the cluster is deployed.

Info

Learn more about automatic injection of "project" name to namespace labels here.

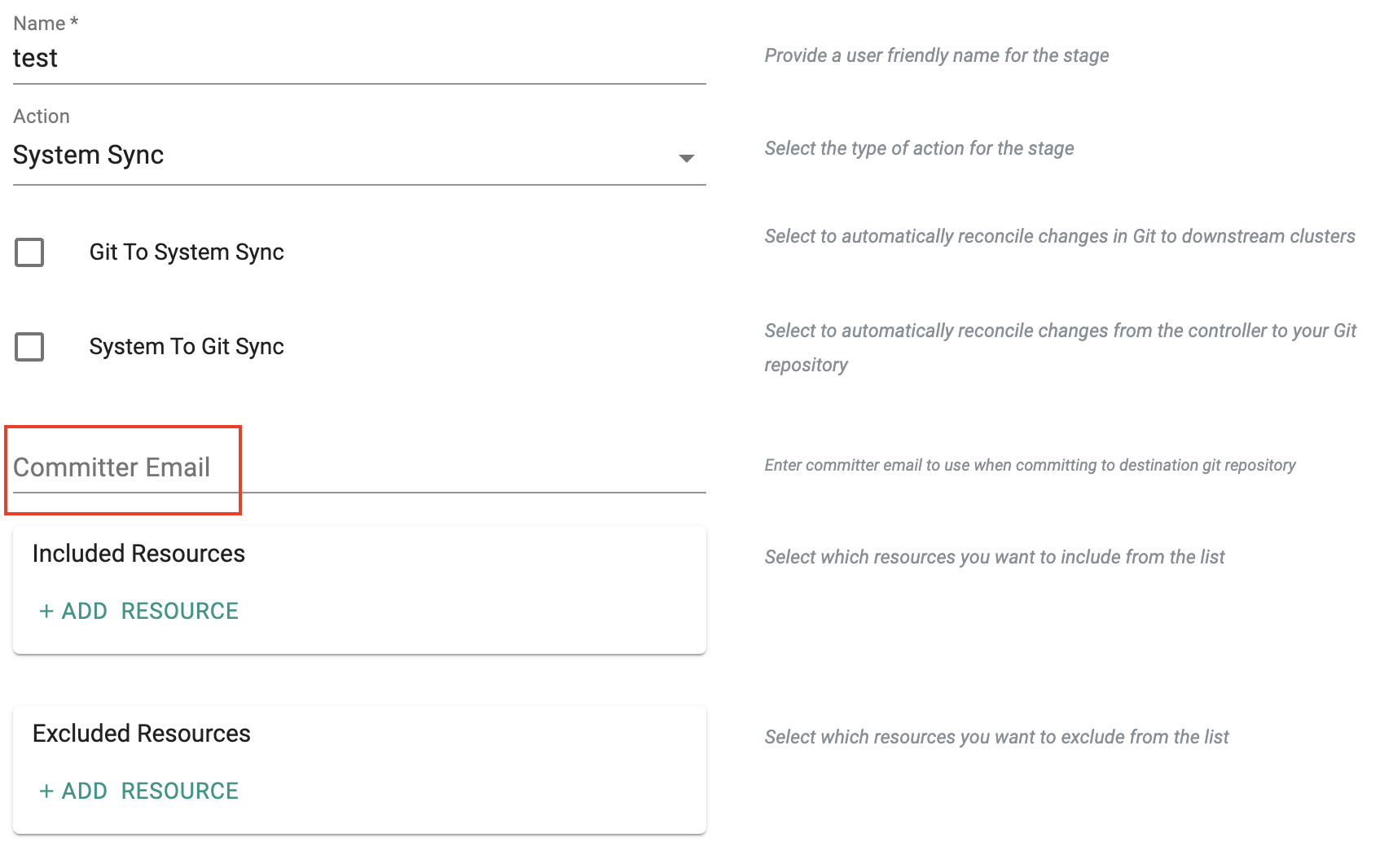

GitOps¶

For GitOps pipelines enabled with "Write Back to Git" can now optionally include a "committer" email address for tracking and audit logging purposes.

Workloads¶

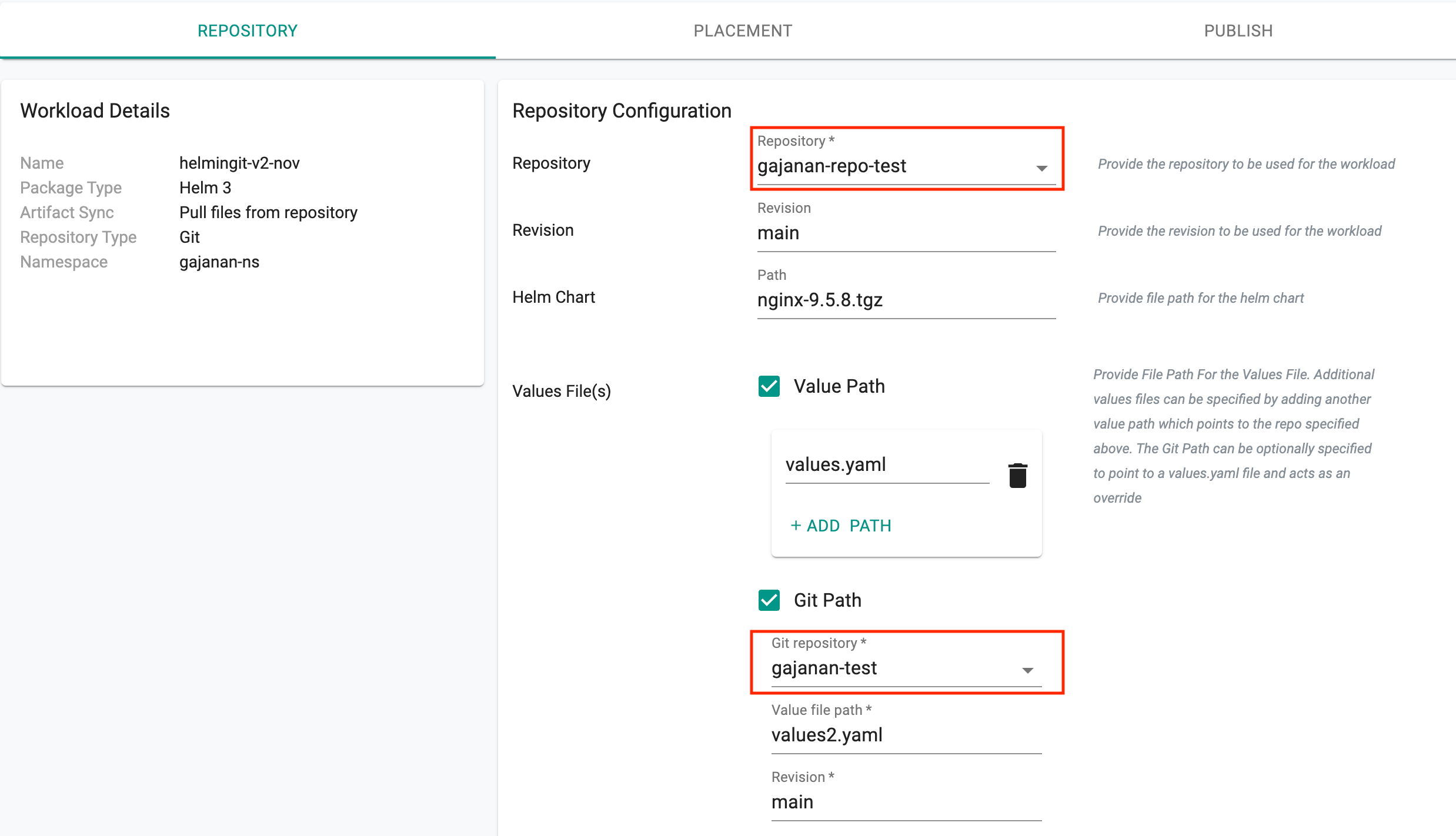

Git Repo based Values Override¶

For "workloads" based on Helm charts from a Git repository, users can now an "uploaded" values.yaml override file AND an override from a "different" Git repository from the Helm chart.

- Helm Chart -> Git Repo-1

- Values Override -> Uploaded File

- Values Override -> Git Repo-2

Important

When both an "uploaded" values.yaml as well as a separate "Git repo" based values.yaml overide are specified, the latter will be given preference.

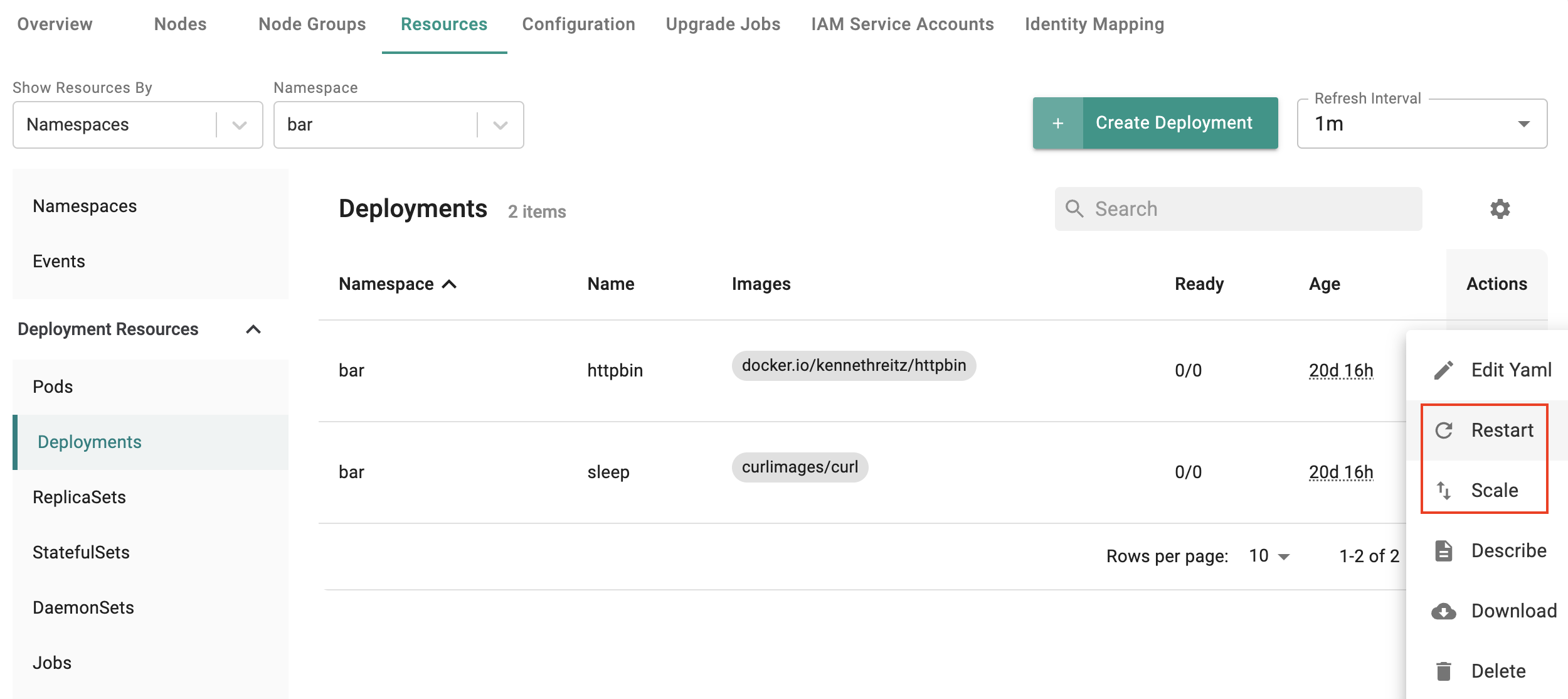

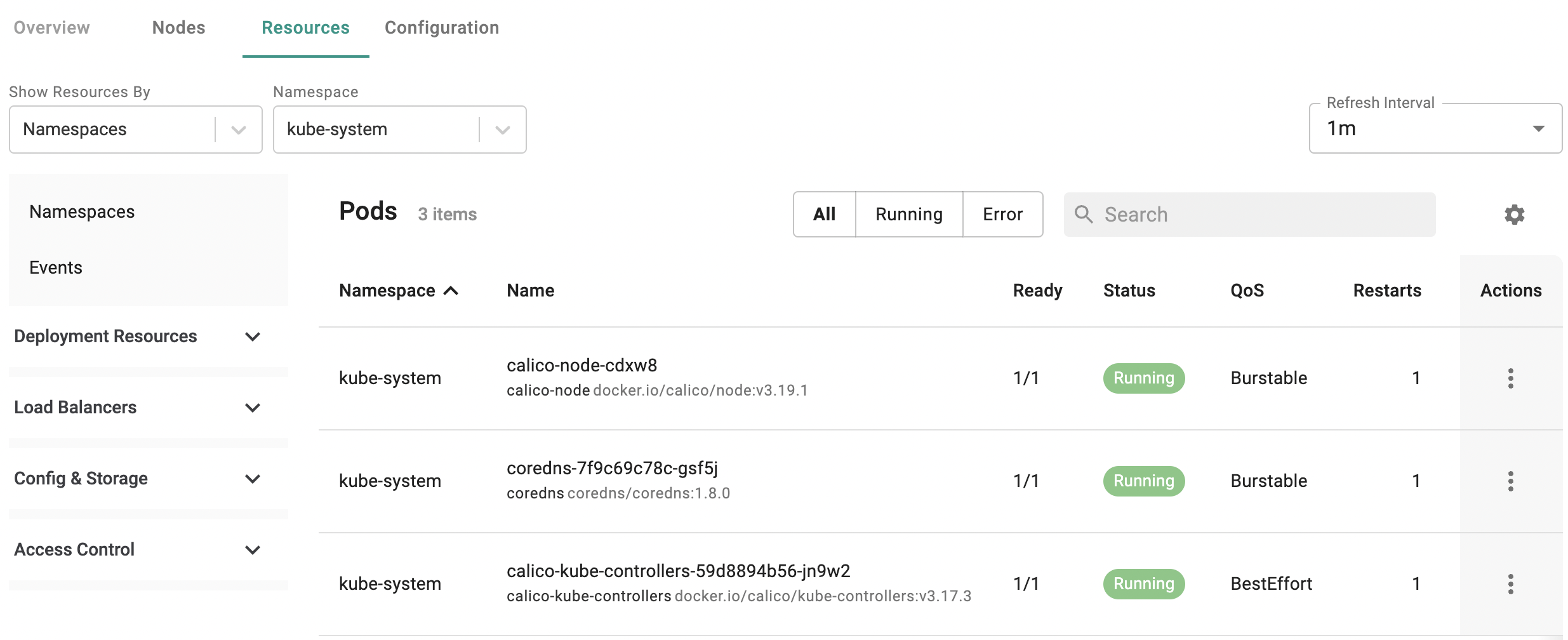

Kubernetes Resource Dashboards¶

Scale Deployments¶

Authorized users can now scale their deployments (up/down) directly from the integrated Kubernetes resources dashboard.

Redeploy Deployments¶

Authorized users can now redeploy/restart their deployments directly from the integrated Kubernetes resources dashboard.

Important

Both scaling and redeploy from the Kubernetes dashboard will be blocked if the drift detection capability is enabled for the workload.

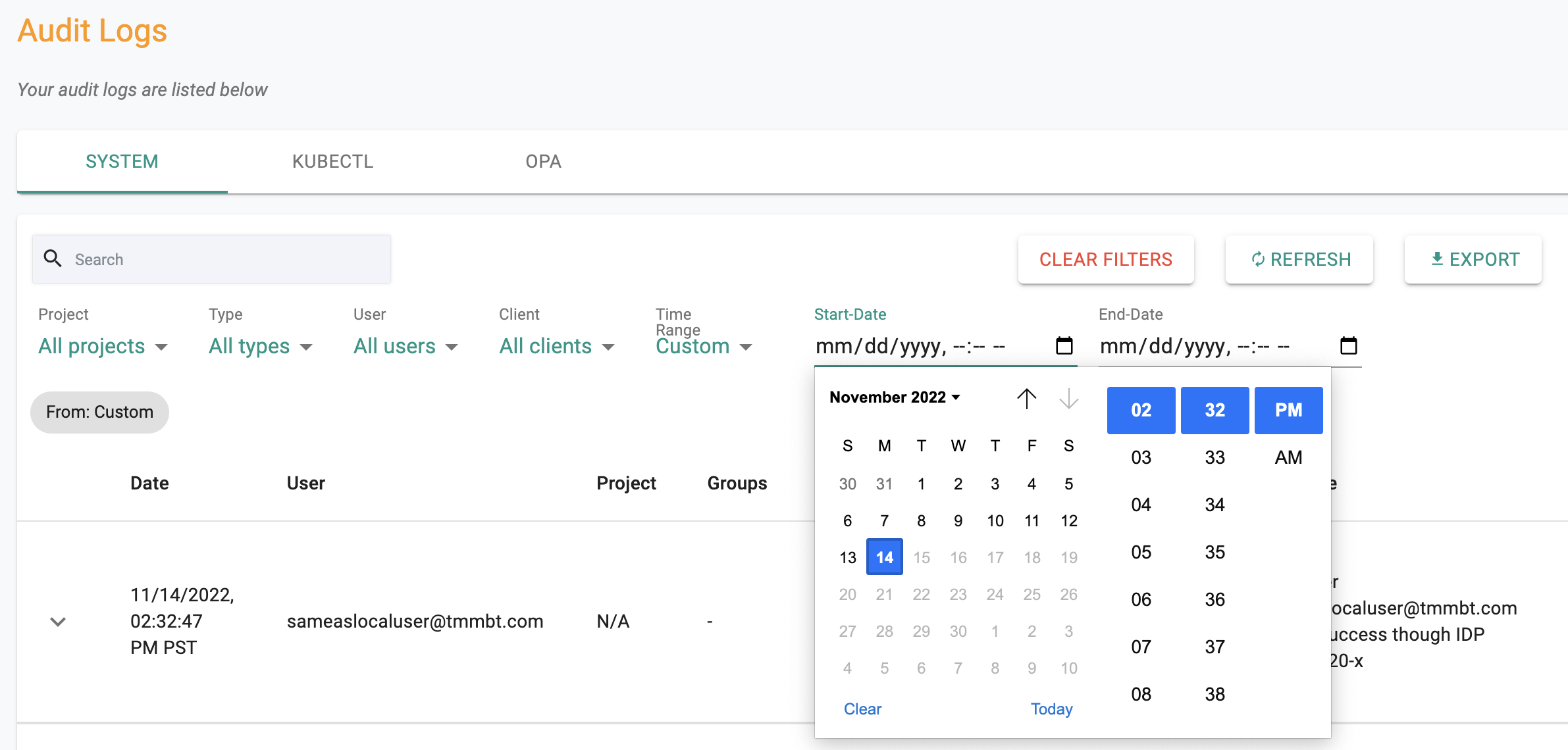

Audit Logs¶

Custom Date/Time Filter¶

Users can now use a custom date and time filter for search in the audit logs.

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following repositories.

| Category | Description |

|---|---|

| Identity Service | Dex |

| Security | Aqua |

| Certificate Management | Cert-Manager |

Terraform Provider¶

The Terraform provider has been updated and enhanced with support for additional IaC scenarios and resources (v1.1.1 and v1.1.2)

Major items worth highlighting are Terraform resources for

- Cost Management

- Network Policy Manager

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-20646 | Vault: Secretstore webhook is unable to renew the token |

| RC-20106 | Vault: Secretstore webhook should return an user friendly error when image is not available in the registry |

| RC-19896 | Workloads View refresh every few seconds causes the loss of search results |

| RC-18976 | Intermittent connect failures to salt master while provisioning nodes with Upstream K8s |

v1.19¶

28 Oct, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.19) with their cluster blueprints to be able to use many of the new features described below.

Cost Management Service¶

One Step Enablement¶

Administrators can enable cost visibility by simply enabling this in their cluster blueprint. All the required software is automatically installed and configured on targeted clusters. Cost data is aggregated from all clusters and persisted on the controller for visualization and analytics.

Shared Monitoring Stack¶

The integrated "cost management" and the "visibility and monitoring" services share the same Prometheus based monitoring stack ensuring that there is no need for unnecessary duplicate deployments consuming resources on the cluster.

IAM Roles for CUR Access¶

A secure IAM role is supported for secure access to AWS CUR files in the customer's AWS account. Organizations are strongly recommended to use this over IAM Users.

Integrated Cost Dashboards¶

Users with various roles (Org Admins, Project Admins etc.) in the platform are provided contextual and RBAC enabled visibility into costs associated with resources under their management.

Chargeback/Showback Workflows¶

Administrators can create and customize chargeback/showback groups to automate the generation of chargeback reports that can then be exported out of the controller.

Info

Learn more about this new service here

Upstream Kubernetes for Bare Metal and VMs¶

Kubernetes v1.25¶

New upstream clusters can be provisioned based on Kubernetes v1.25.x. Existing upstream Kubernetes clusters managed by the controller can be upgraded in-place to Kubernetes v1.25.

Info

Learn more about support for k8s v1.25 here.

CNCF Conformance¶

Upstream Kubernetes clusters based on Kubernetes v1.25 (and prior Kubernetes versions) are fully CNCF conformant.

Usability Enhancements¶

Admins no longer have to download and save the node activation credential files. Instead, they are now provided with a simplified copy/paste experience.

Kubernetes Patch Releases¶

Existing upstream Kubernetes clusters can be updated in-place to the latest Kubernetes patches for supported versions (v1.24.x, v1.23.x, v1.22.x, v1.21.x).

Important

Customers are strongly recommended to update their existing clusters at least quarterly to ensure that authentication certificates do not expire resulting in unnecessary disruption.

Info

Learn more about support for k8s patch releases here.

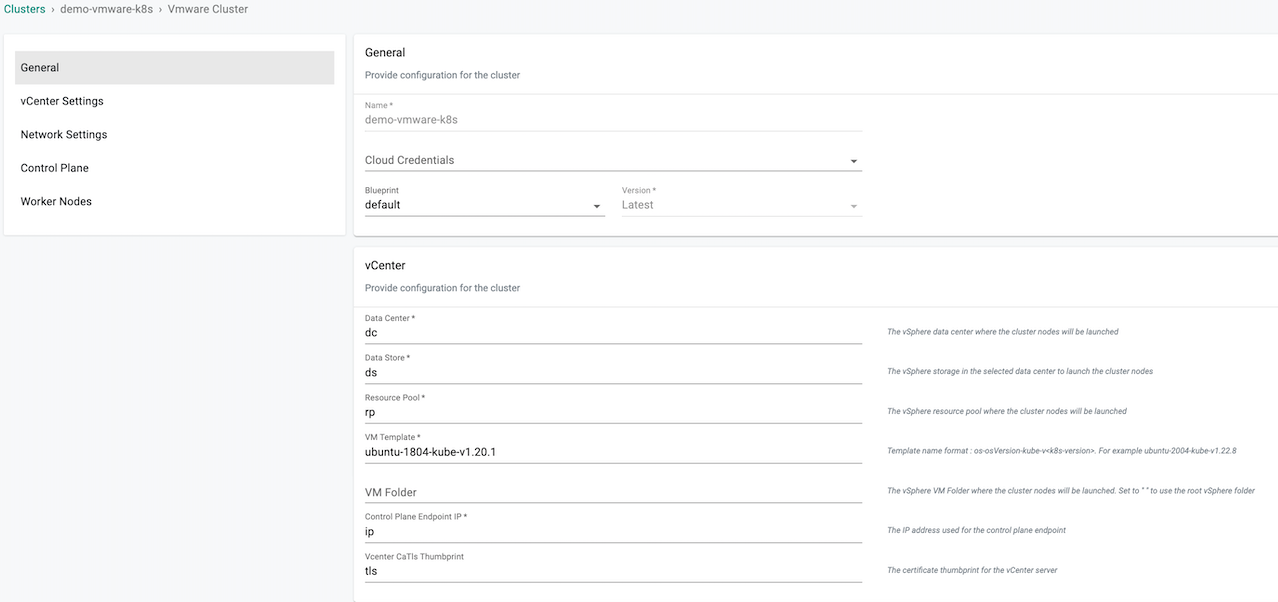

Upstream Kubernetes for vSphere¶

Auto Populated VM Templates¶

The user is now presented with a drop-down selector for the supported VM Templates providing a much better user experience.

Cloud Credential Validation¶

Users can now validate the cloud credentials for VMware right from the web console.

Gateway Connectivity Status¶

Admins can now quickly identify and address issues related to network connectivity between the gateway and vCenter and the gateway and Controller. Connectivity status and health is now clearly displayed on the console.

Download Cluster Spec via CLI¶

Admins can now download the declarative cluster specification for the Kubernetes cluster programmatically using the RCTL CLI.

Info

Learn more about this new service here

Amazon EKS¶

Minimized IP Address¶

Many large enterprises have extremely limited IP address availability in their VPCs. The cluster provisioning process has been enhanced to minimize the use of IP addresses especially when custom networking is used.

Proxy for Bottlerocket¶

AWS Bottlerocket based node groups can now be provisioned in environments where the use of a HTTP proxy is required.

Takeover Enhancements¶

The takeover of brownfield EKS clusters capability has been enhanced. Existing IRSAs are now automatically detected and can be managed directly from the controller.

Info

Learn more about this here

Azure AKS¶

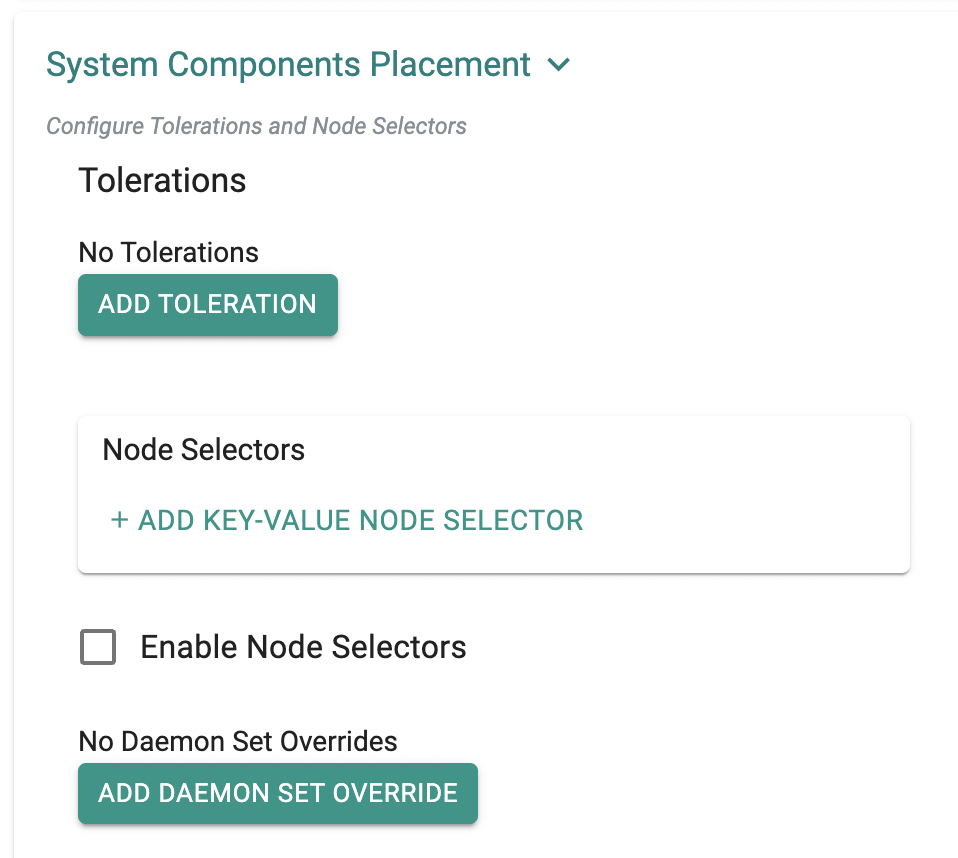

Taints/Tolerations for System Components¶

On managed AKS clusters, the system components can now be configured to be deployed on specific nodes based on taints and tolerations.

Info

Learn more about this here

Imported Clusters¶

Taints/Tolerations for System Components¶

On imported clusters, the system components can now be configured to be deployed on specific nodes based on taints and tolerations.

Info

Learn more about this here

Cluster Blueprints¶

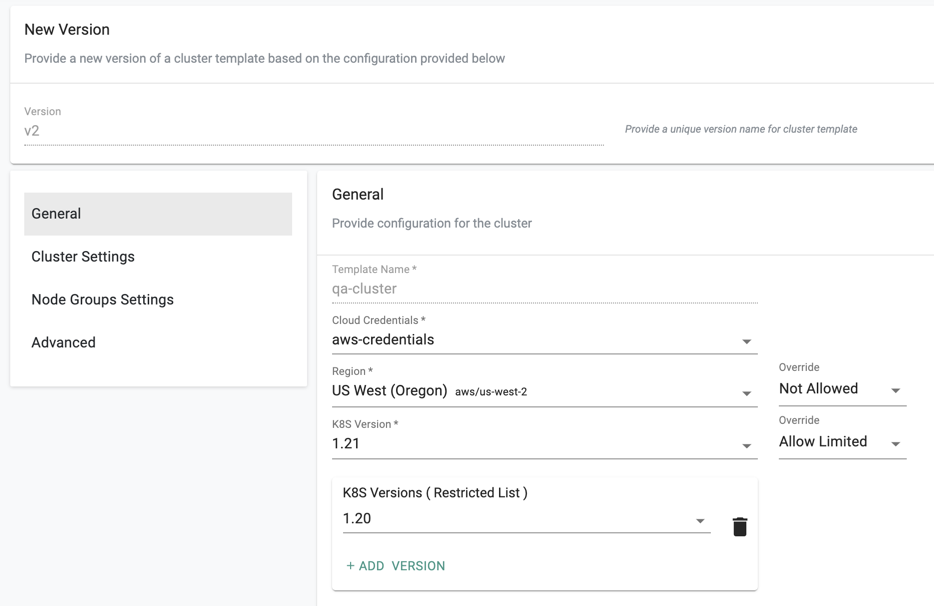

Usability¶

During the creation of a version of a cluster blueprint, the details are now presented as logical sections in a vertical menu making it easier for the user to configure.

Sorted Addons¶

The list of addons is now presented to the users as a sorted list making it easier to find items.

Info

Learn more about this here

RBAC¶

Project Switcher¶

A new auto complete based project switcher is now available. Users that have access to multiple projects in an Org can now find and switch to the desired project significantly faster. Just start entering the name of the desired project and auto complete will list it for you.

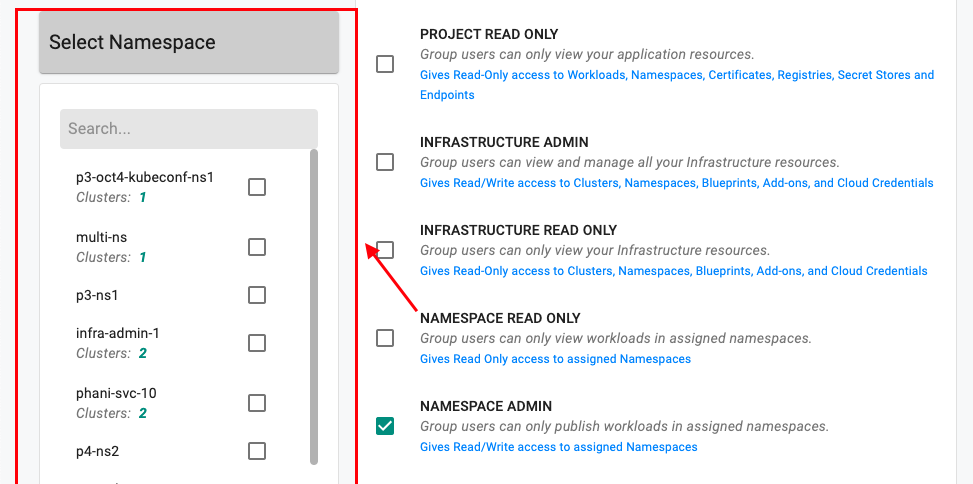

Read Only Workspace Role¶

A new "read only" role has been introduced for workspace admins.

Info

Learn more about this here

GitOps & Workloads¶

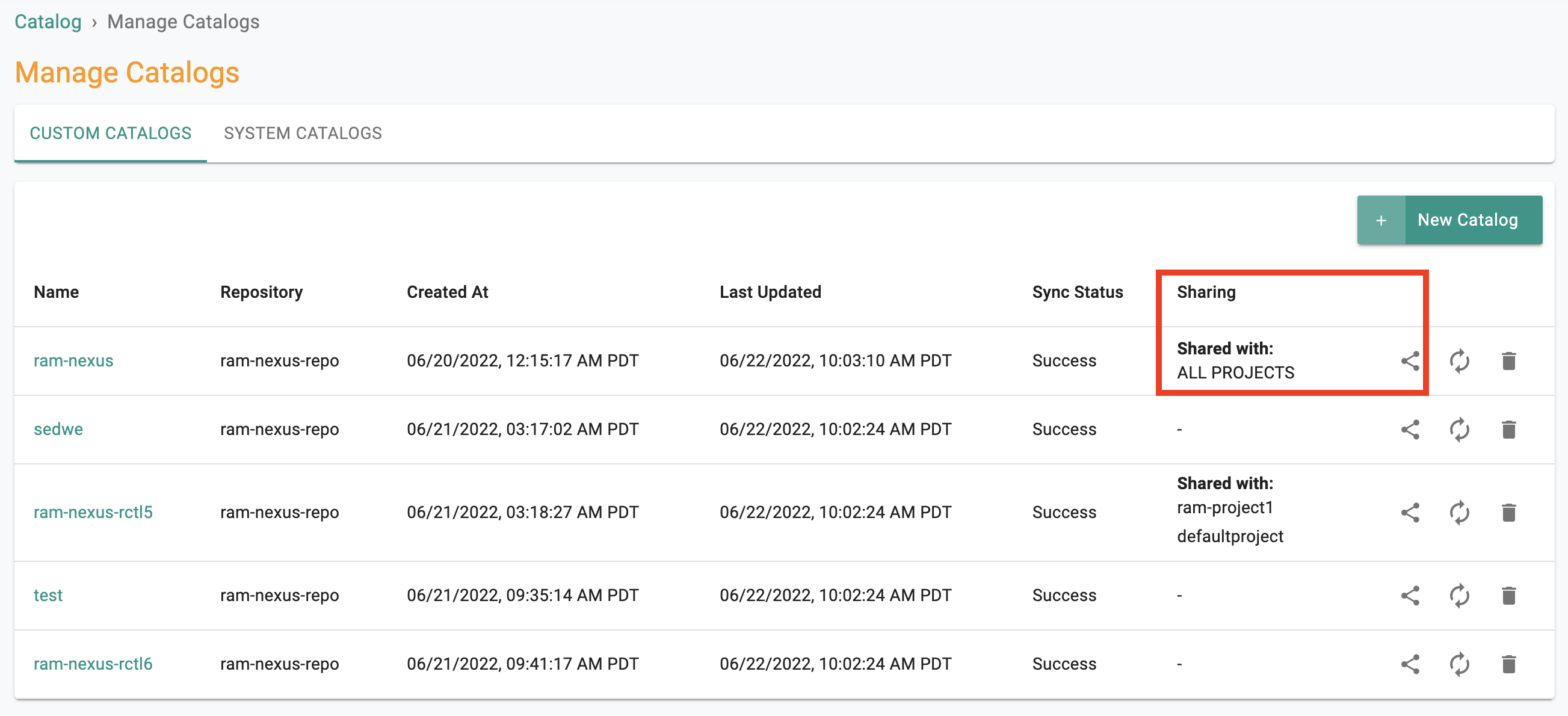

Sharing of Repositories¶

Git and Helm repositories can now be shared with All or Selected projects in an Org.

Sharing of Agents¶

Agents can now be shared with All or Selected projects in an Org.

Chart and Values from Different Git Repos¶

Workloads can now be configured to pull charts and values from different Git repositories.

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following repositories.

| Category | Description |

|---|---|

| Container Registry | Harbor |

| Container Registry | JFrog |

| Database | CockroachDB |

| Database | Cassandra |

| Database | MongoDB |

| Database | Postgres Operator |

| Monitoring | Dynatrace |

| Security | Sonarqube |

| Security | Deepfence |

| Identity | Ping Identity |

| Machine Learning | Apache Airflow |

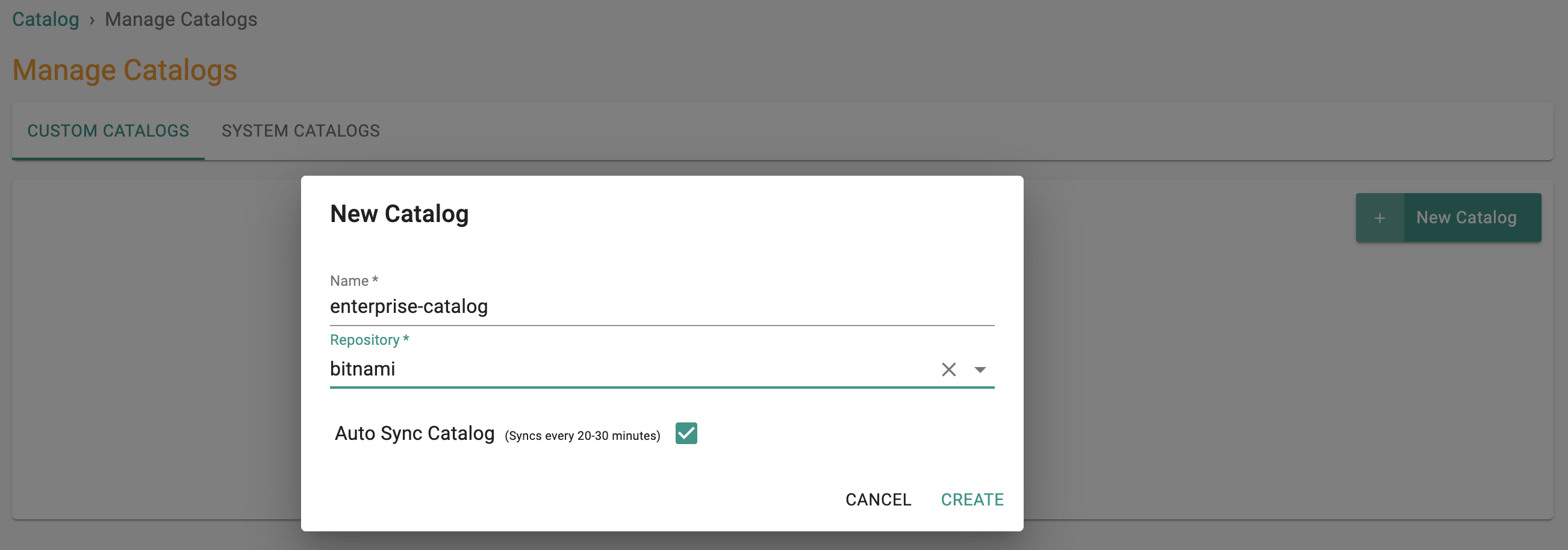

Deprecation (Bitnami Catalog)¶

The "default-bitnami" catalog has been deprecated and will be removed in the near future. Users are recommended to migrate to the equivalent "upstream" helm charts in the default-helm catalog where available.

Terraform Provider¶

The Terraform provider has been updated and enhanced with support for additional IaC scenarios.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-19363 | EKS nodegroup actions are not working on Safari browser |

| RC-17541 | Can’t update a blueprint when the cluster has a status that is not CLUSTER PROVISION COMPLETE |

| RC-17544 | Cluster creation GUI fails aborts if Managed Node config checked and “Instance Profile” ARN is filled in |

| RC-19790 | UI: VMware validate gateway input is not being mandated for credential add/update action |

| RC-19061 | System Sync: If folder path is configured with "/" only, empty folders are created for each spec file |

v1.18.1¶

07 Oct, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.18) with their cluster blueprints to be able to use many of the new features described below.

Namespaces¶

Auto Reconciliation¶

Unmanaged Kubernetes namespaces created directly on clusters (using kubectl etc) can now be "automatically detected, reconciled with the controller and brought under centralized management". This ensures that there are no rogue, unmanaged, non-compliant namespaces on managed clusters.

Info

Learn more about this here

Cluster Sharing¶

The "cluster sharing" across projects capability has been enhanced in this release so that this can be performed declaratively using cluster specifications (i.e. rctl apply -f spec.yaml) and via IaC (i.e. terraform provider).

Info

Learn more about this here

RBAC¶

The Role Assignment page now shows the clusters that each namespace belongs to when a namespace admin role is selected.

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following repositories.

| Category | Description |

|---|---|

| Security | Araali |

| DevOps | GitLab |

| Backup | Cloudcasa |

| APM | Honeycomb |

| GitOps | FluxCD |

v1.18¶

30 September, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.18) with their cluster blueprints to be able to use many of the new features described below.

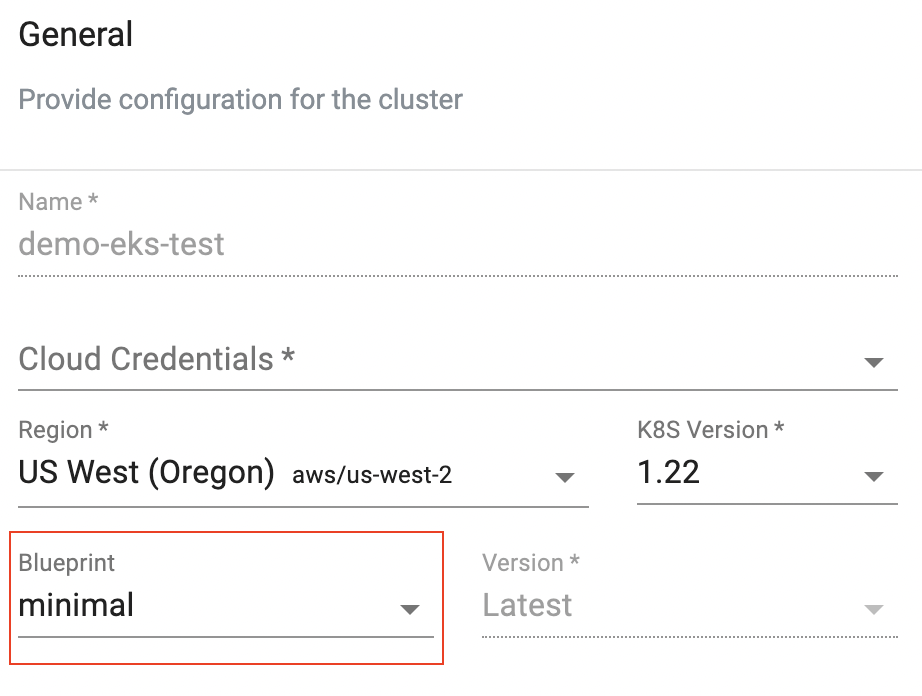

Blueprints¶

Minimal Blueprint as Default¶

The minimal blueprint is now automatically selected as the default blueprint for all new clusters (EKS, AKS, GKE and Upstream Kubernetes) provisioned or imported into the Controller.

Info

Learn more about this here

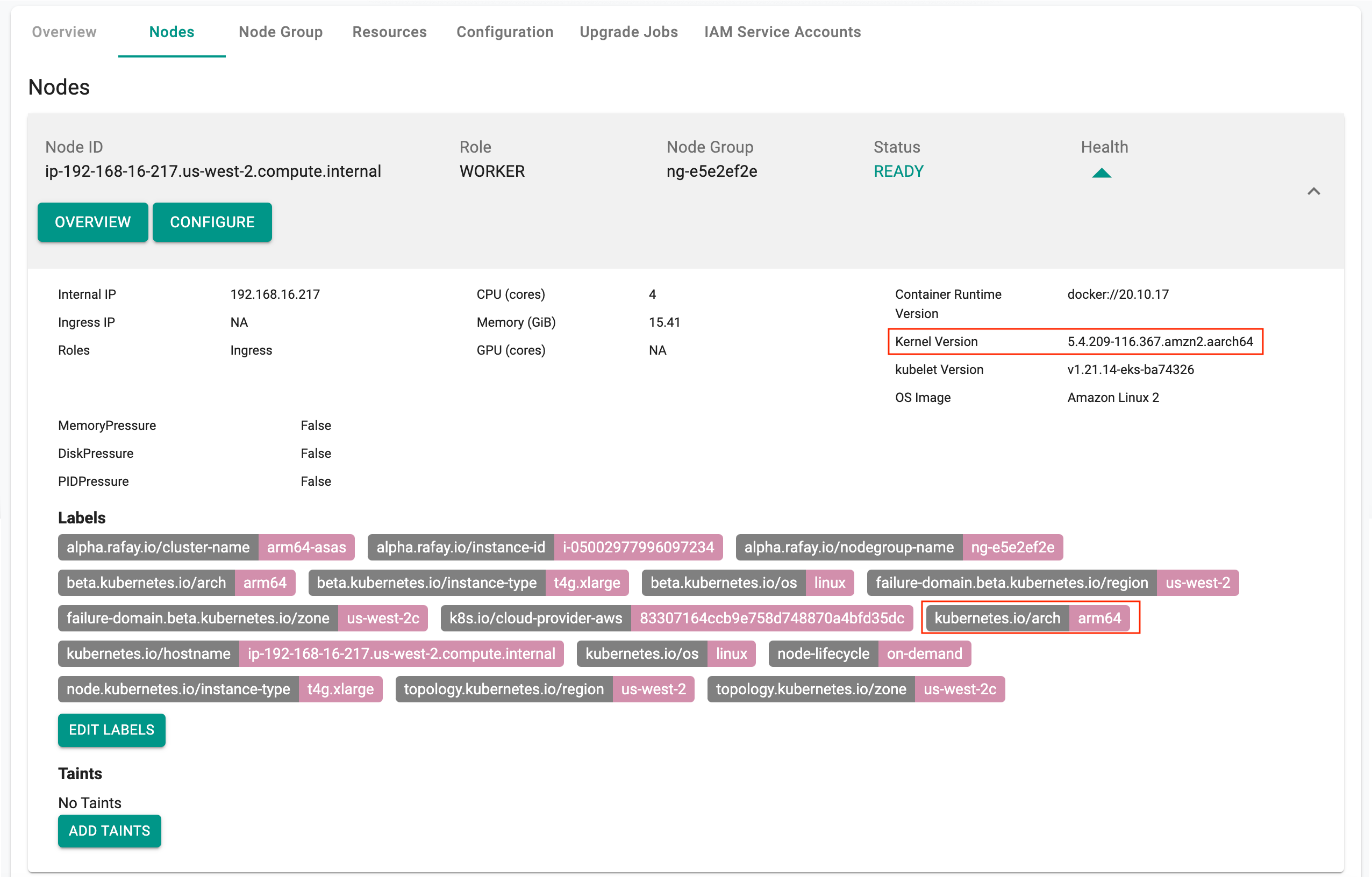

Multi Arch for Minimal Blueprint¶

The components deployed as part of the minimal blueprint are now cross compiled. The minimal blueprint can now be deployed on both AMD64 and ARM64 architecture-based clusters.

Info

Learn more about this here

Dashboards¶

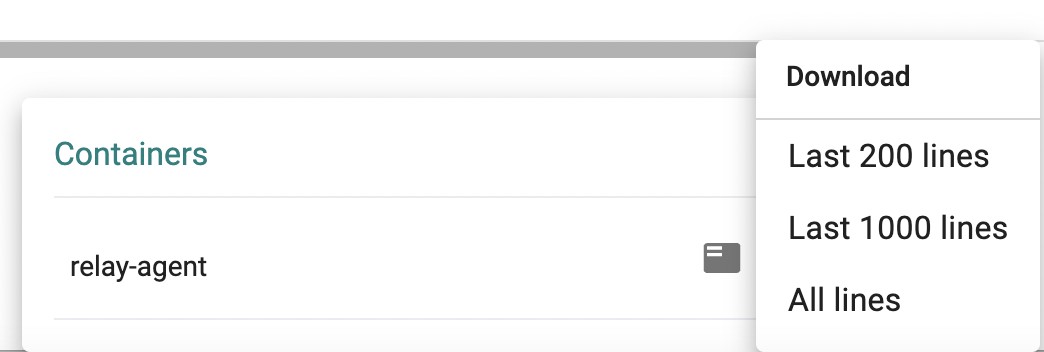

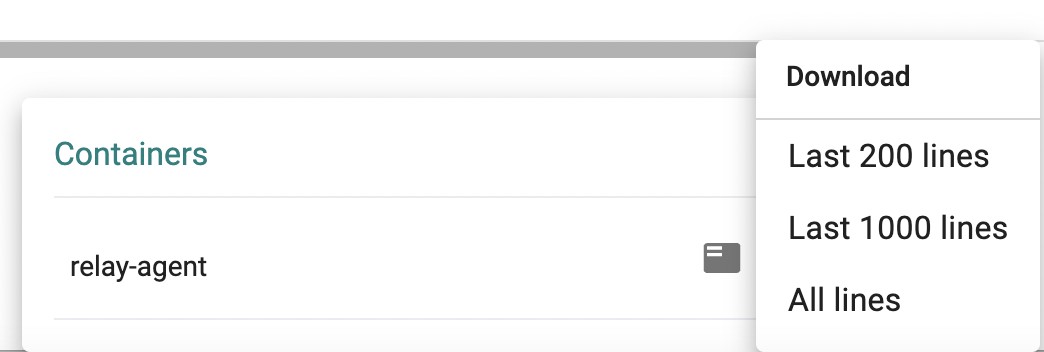

Download Container Logs¶

Users (developers and operations personnel) can now use the integrated Kubernetes resources dashboard to navigate to a specific pod and download the entire log for containers. This was previously limited to the last 200 lines.

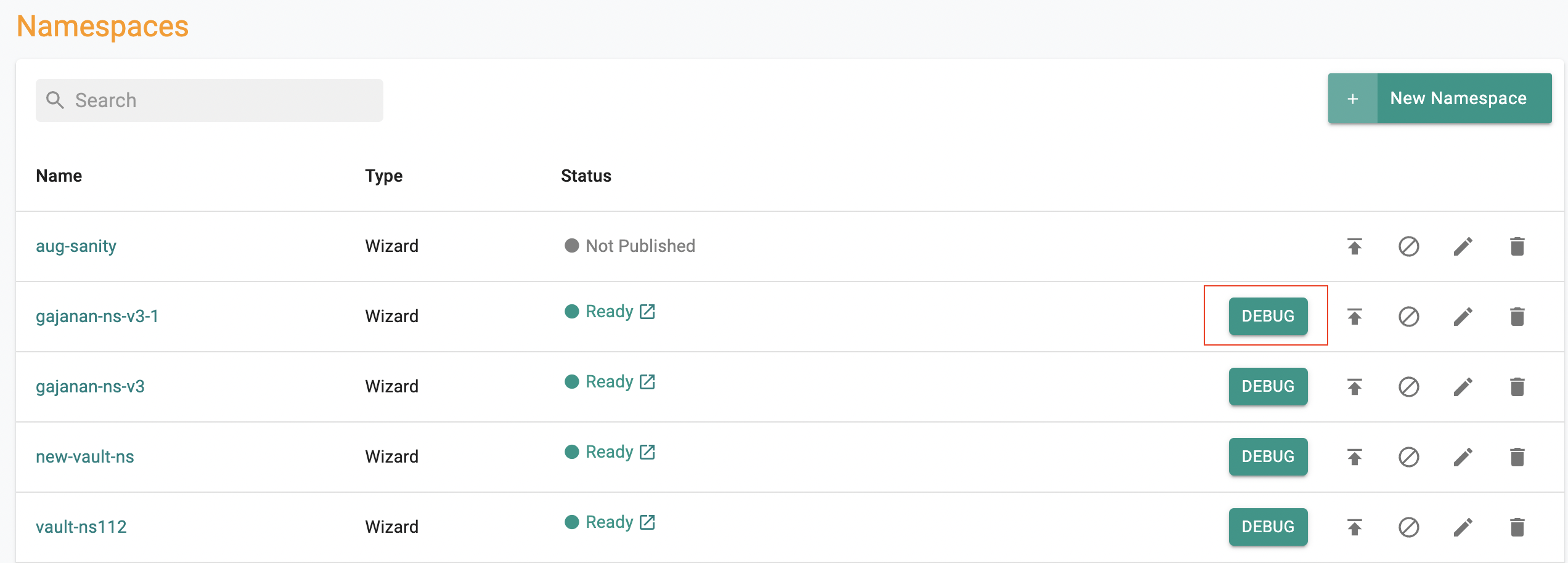

Namespace¶

Troubleshooting¶

Users now have the convenience of being able to troubleshoot and debug Kubernetes resources in a namespace directly from the namespace listing page.

Amazon EKS¶

Taints/Tolerations Day-2¶

Building on the Day-1 support for "taints/tolerations support for system components" in the prior release, users can now make Day-2 changes. For example, migrate the system components to a new/replacement node group etc.

Graviton 2 Only Clusters¶

With the minimal cluster blueprint, users can now provision and operate EKS Clusters with only ARM64 architecture based AWS Graviton worker nodes.

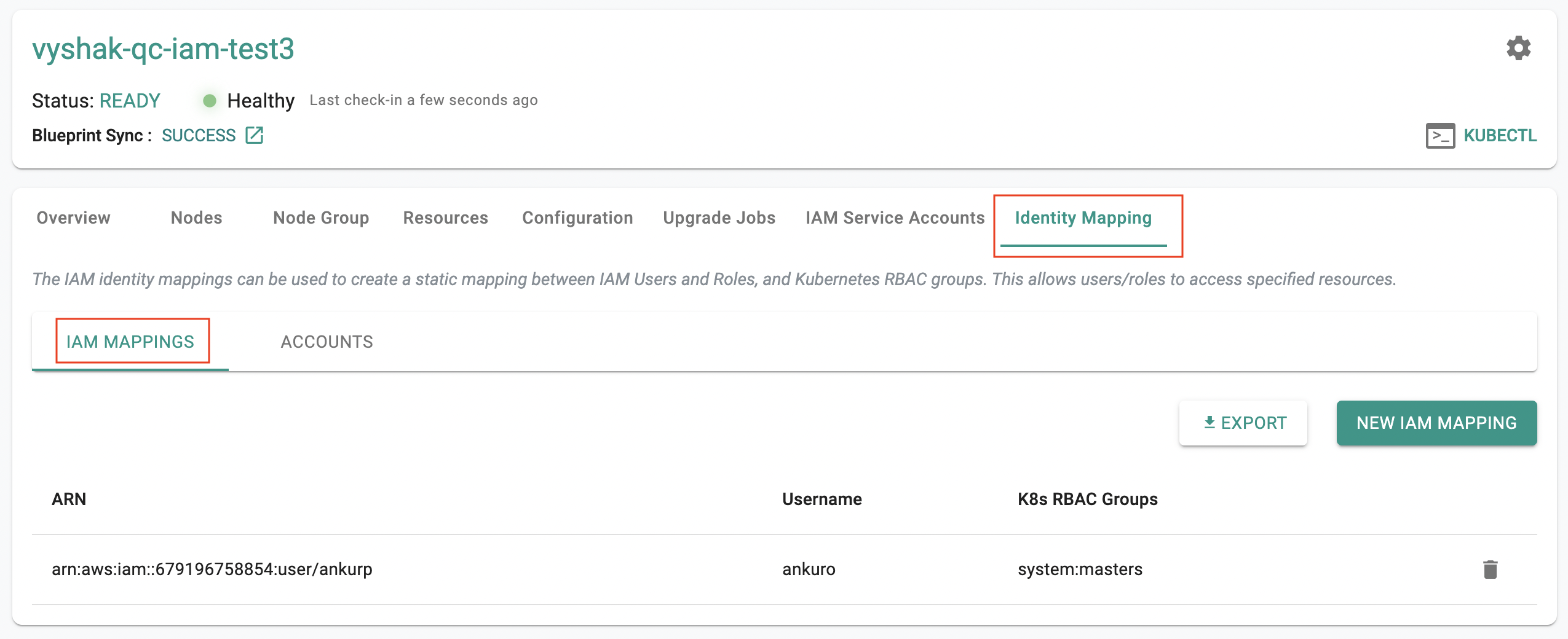

AWS Console Users¶

Users authorized to use the AWS Console for can now be added to or removed from specific EKS clusters so that they can use the EKS dashboard to view k8s resources on the cluster.

Info

Learn more about this here

Secrets Manager Integration - GA¶

The beta program for the integration with AWS Secrets Manager is now complete and this feature is now Generally Available. The integration has also been enhanced to support the use of "annotations" to automate the injection of secrets for required resources.

Note

The annotations are agnostic to how application deployments are performed i.e. users can use the annotations for app deployments via Kubectl, Helm or Workloads.

Info

Learn more about this here

Azure AKS¶

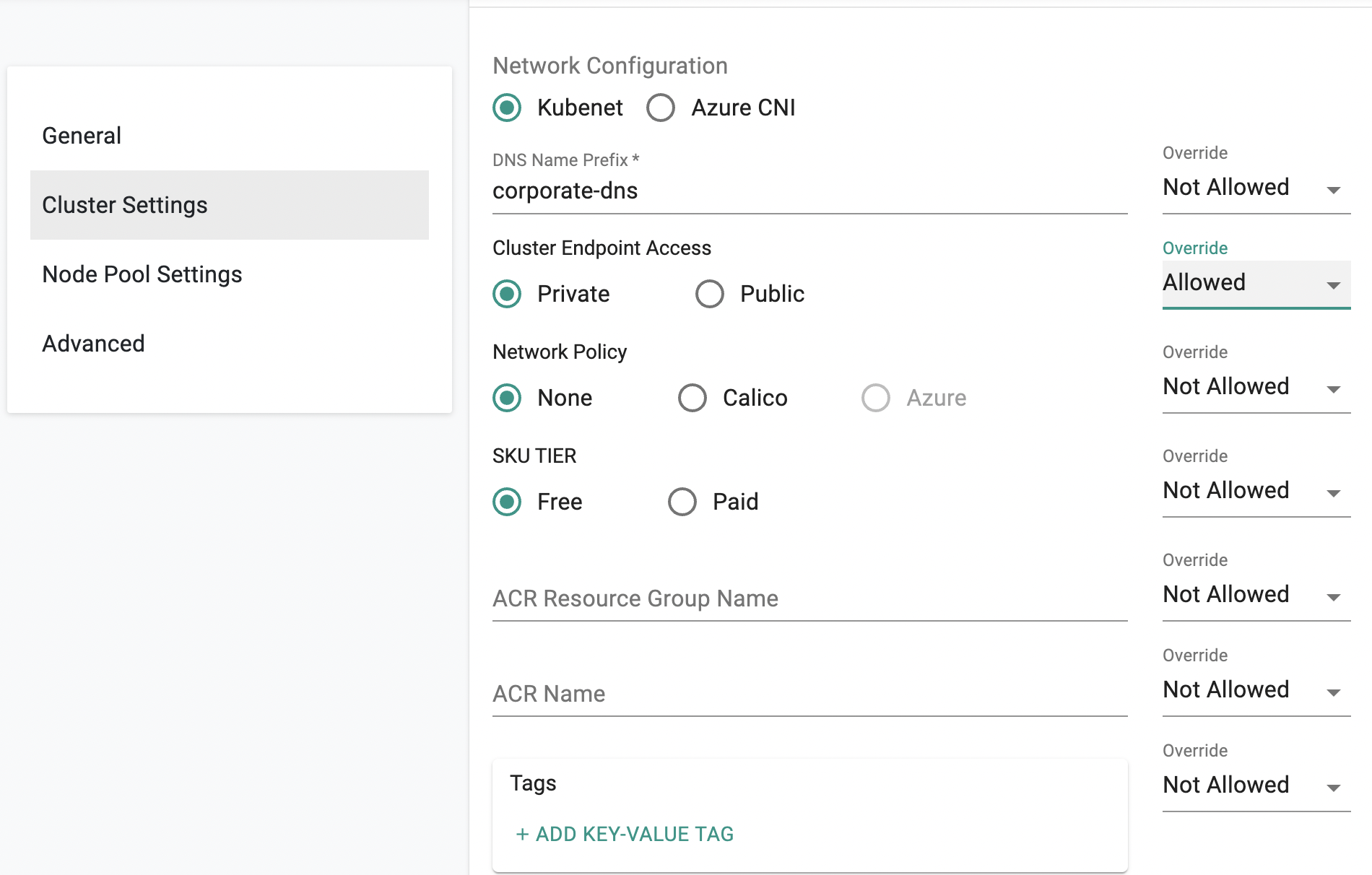

Cluster Templates¶

Empower and enable developer self-service for provisioning Azure AKS clusters without losing control over governance and policy. Infrastructure admins can specify and encapsulate the freedom/restriction for infrastructure resource creation, abstracting the details of the resource creation by exposing limited configuration for the user to deal with.

Info

Learn more about this here

Upstream Kubernetes¶

Managed Storage¶

The OpenEBS based local provisioner (local storage) is now optional during provisioning of upstream Kubernetes clusters. It is available as a managed addon in either minimal or default-upstream base blueprints. Admins can add the OpenEBS based storage either on Day-1 or Day-2.

Info

Learn more about this here

Policy Management¶

Versioning for OPA Constraints¶

OPA Constraints can now be versioned.

Installation Profiles¶

Installation profiles are now supported as a set of parameters that can be used during the setting up and installation of the OPA Gatekeeper Managed Add-On.

Multiple Policies¶

It is now possible to attach multiple OPA policies in the same blueprint.

Spec for OPA Constraint, Installation Profile and Blueprint has been updated based on the enhancements listed above. Please refer to the CLI section for more information.

Info

Learn more about this here

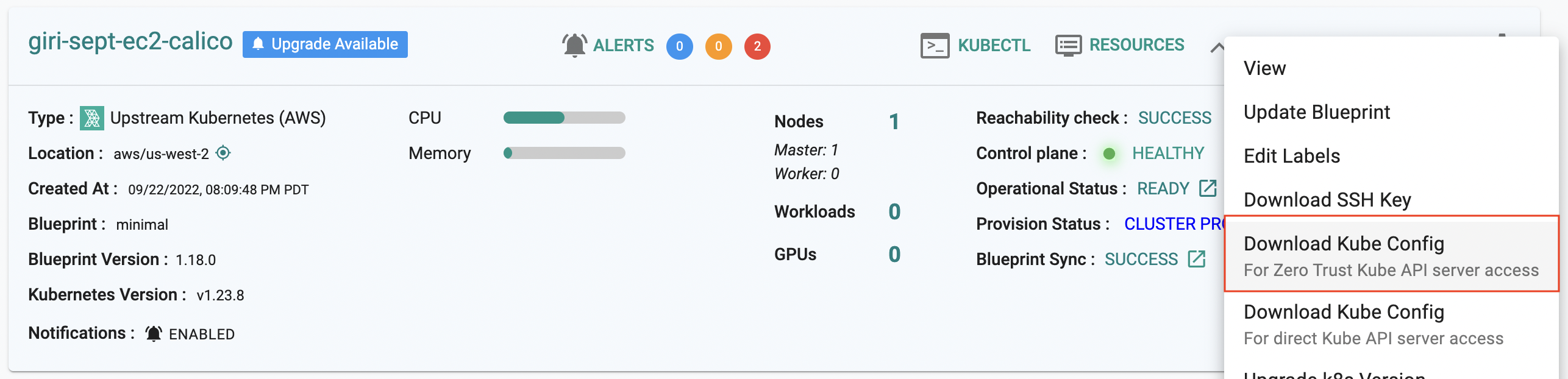

Zero Trust Kubectl¶

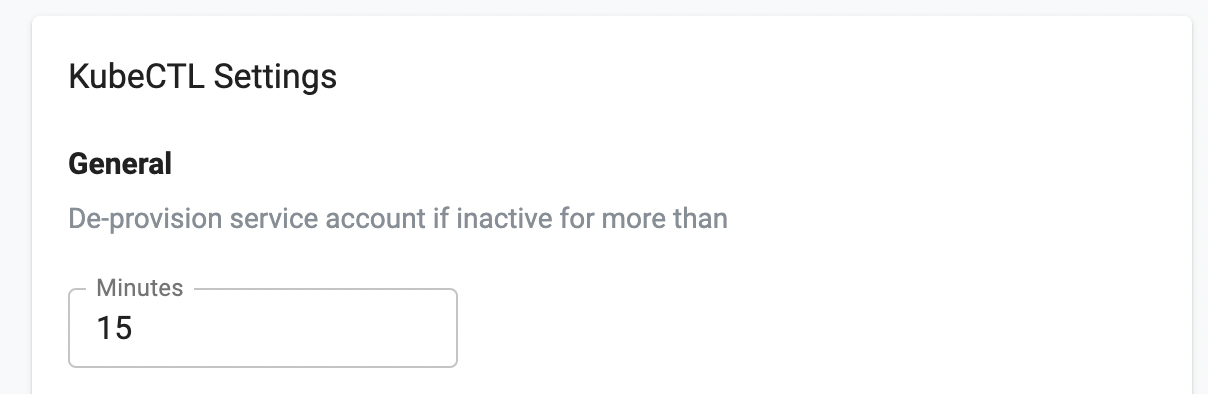

Configurable SA Lifetime¶

An Org wide setting is now available to specify the "idle time" for the ephemeral service account created on downstream, managed clusters. Once the ephemeral service account lifetime expires, it is immediately removed from the downstream clusters.

Single Cluster Kubeconfig¶

It is now possible for users to download the ZTKA kubeconfig file for only a single cluster (instead of the consolidated kubeconfig).

Info

Learn more about this here

GitOps & Workloads¶

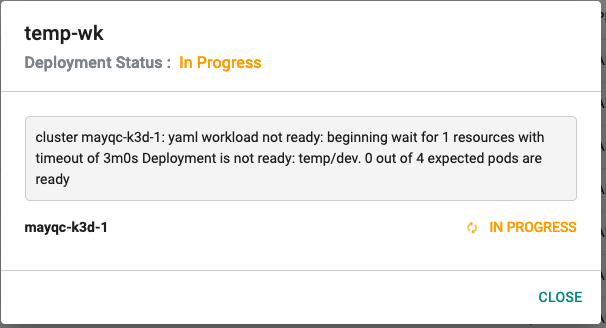

Better Error Messages¶

Users are presented with a more intuitive error message faster during workload publish operations.

Download Container Logs¶

Users (developers and operations personnel) can now use the integrated Kubernetes resources dashboard for their workloads, navigate to a specific pod and download the entire log for containers.

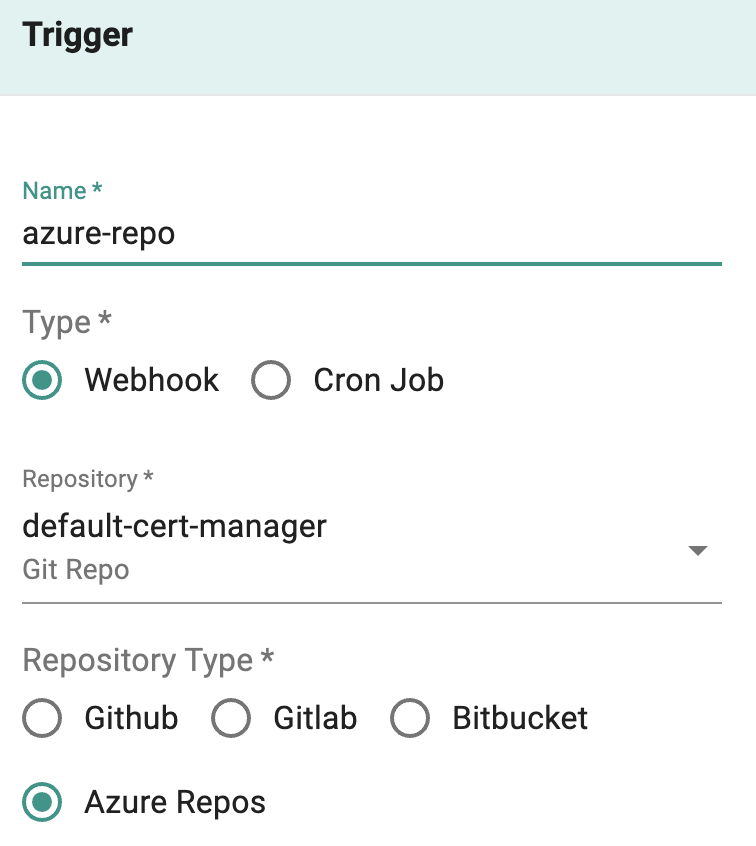

Webhook Triggers for Azure DevOps Repos¶

Users can now configure and receive webhook triggers from Azure DevOps Repositories for use with the integrated GitOps Pipelines.

Info

Learn more about this here

Network Policy¶

Support for AKS with Kubenet¶

The network policy service has been optimized to be compatible with the Kubenet CNI on Azure AKS clusters.

Visibility Dashboards by Role¶

Users with different roles now have contextual access to the required network policy dashboards seamlessly powered by RBAC.

Info

Learn more about this here

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following repositories.

| Category | Description |

|---|---|

| Policy | Kyverno |

| Secrets | External Secrets, Azure Secrets Store CSI Provider |

| Security | Snyk, Lacework, Calico |

| Scaling | Keda |

| Virtual Clusters | Loft.sh |

| Continuous Deployment | Armory |

Display Catalog Name¶

The source/name of the application is now displayed for every item. This makes it easier for the user to select the correct application chiclet without having to navigate one level further.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-18163 | Connector pod OOM killed |

| RC-17365 | Audit logs not getting filtered properly |

| RC-17095 | Clusters created by API do not have the state set |

| RC-16946 | Provide the drop-drop option with both Ubuntu 18.04 and 20.04 when provisioning with qcow2 and upstream Kubernetes |

| RC-16871 | INFRA_READ_ONLY user can reset cluster |

| RC-16617 | Delay in workload placement causing CircleCI pipelines to fail |

| RC-13967 | RCTL CLI config init is talking to console.rafay.dev by default causing issues on self hosted controller |

| RC-12179 | Search box is not working properly for audit log search |

| RC-9688 | Resources are displayed and not updated when the cluster is down |

v1.17¶

26 August, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.17) with their cluster blueprints to be able to use many of the new features described below.

Upstream Kubernetes¶

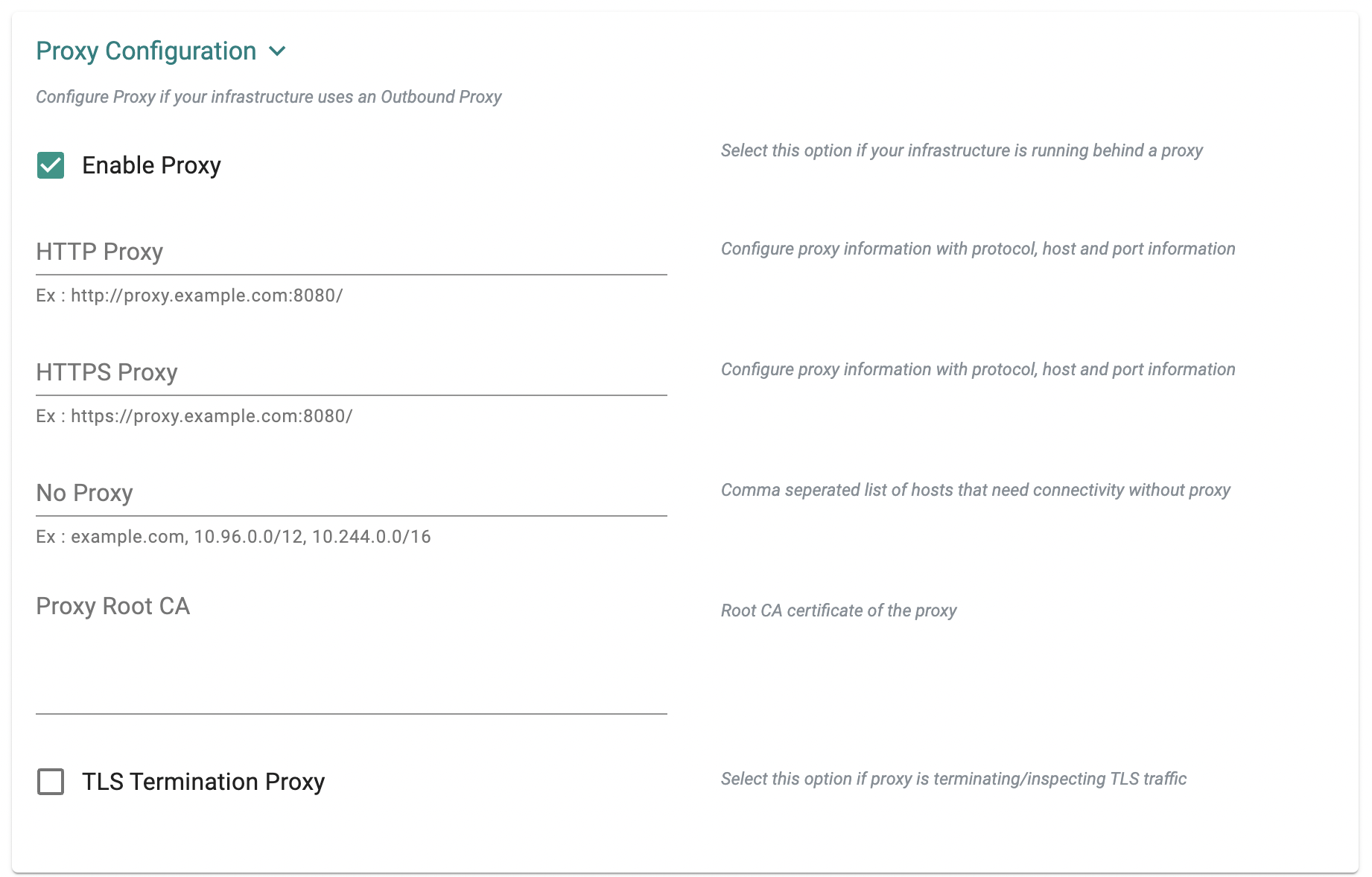

Proxy Support for vSphere Clusters¶

Users can now configure and use a network proxy for vSphere based Kubernetes clusters.

Info

Learn more about this here.

Infra GitOps for vSphere Clusters¶

Infra GitOps workflows are now supported for vSphere based upstream Kubernetes clusters. With this, users can use declarative cluster specs to perform both Day-1 (provisioning) and Day-2 (scaling, upgrades) operations on these clusters. The exact same RCTL CLI command can be used for both Day-1 and Day-2 operations.

Provision, Scale, Upgrade, Update Blueprint

rctl apply -f cluster.yaml

Amazon EKS¶

Taints & Tolerations for System Components¶

Administrators can configure taints and tolerations for the system components (i.e. k8s mgmt operator and managed services) so that the associated k8s resources are only provisioned to specific nodegroups.

Example EKS cluster specifications to enable this are available in our public Git repository for examples

Info

Learn more about this here

Unified Cluster Spec YAML¶

Users can now use a "unified declarative cluster specification" both for Day-1 operations (i.e. provision EKS cluster) and Day-2 operations (i.e. scale, upgrade etc.).

Note

The legacy two yaml documents based specification will continue to work in parallel until deprecation is announced.

Custom AMI for Bottlerocket and Windows¶

Custom AMIs are now supported for Bottlerocket and Windows based AMI.

AWS Secrets Manager Integration (Beta)¶

The turnkey integration (beta) for Amazon EKS CLusters with AWS Secrets Manager has been enhanced based on customer feedback.

- Admins now have an option to configure rotation interval for secrets

- Configuration wizard has been enhanced to add support for SecretProviderClass resource

- Support for annotating the service account with role ARN - this is for scenarios where the application owner needs to create the service account based on already created IRSA as part of the application deployment

Info

Learn more about the turnkey integration with AWS Secrets Manager here

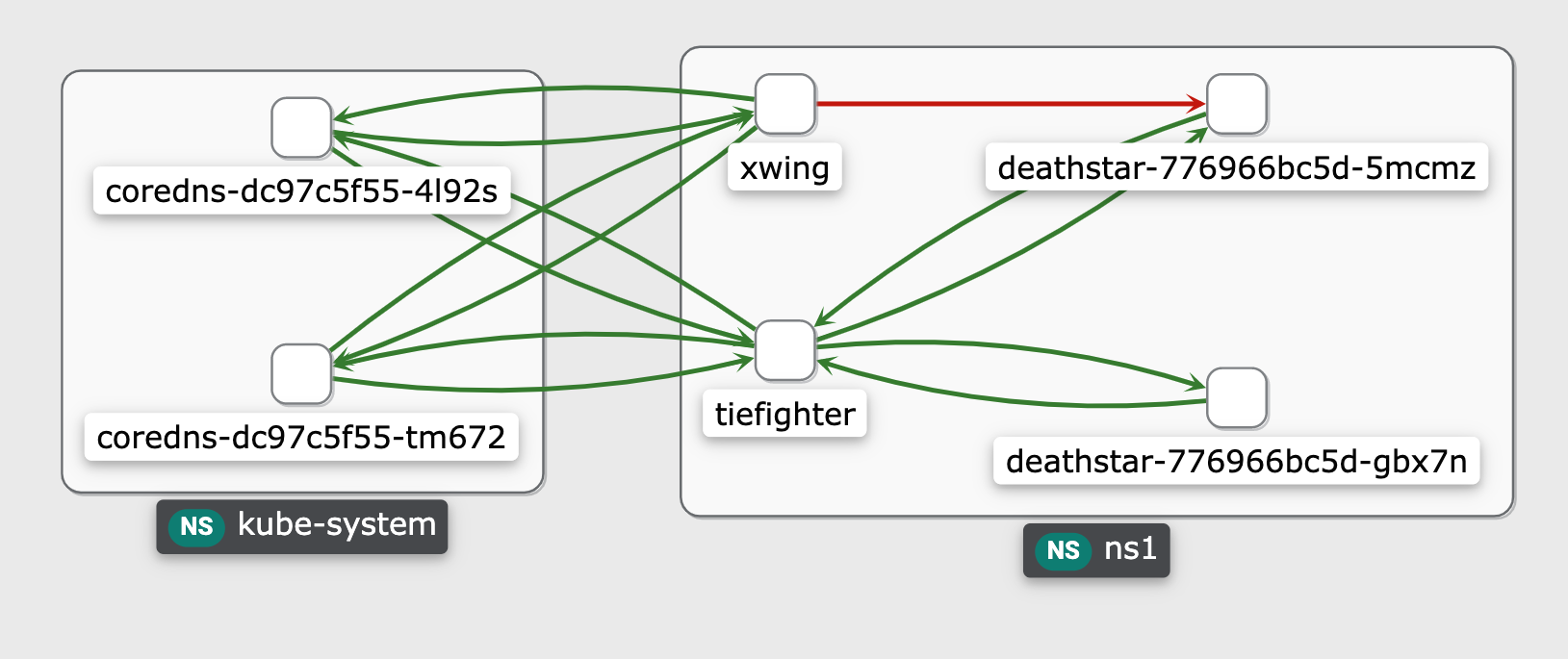

Network Policy Service¶

A new service focused on enabling "network policies" across a fleet of clusters is now available in the platform for all customers. Once enabled, Cilium is automatically installed and configured on the target clusters as a chained CNI using cluster blueprints.

Users have visibility into network flows for their clusters and applications directly in the web console (i.e. traffic into cluster, traffic between pods, traffic between namespaces, traffic out of cluster). Administrators can configure and enforce declarative network policies scoped to a cluster or a namespace.

Info

Learn more about this here.

Blueprints¶

Dashboard for Minimal Blueprint¶

The integrated Kubernetes resources dashboard no longer requires the Visibility & Monitoring addon. Users can now remotely and securely access the integrated k8s resources dashboard even if their clusters are configured to use the minimal blueprint or a custom blueprint with the visibility and monitoring addon disabled.

Golden Blueprint¶

Organizations now have the ability to create and manage a customized organization baseline blueprint aka. golden blueprint. Custom cluster blueprints can then be created on top of the golden blueprint ensuring that downstream business units and teams will always have custom cluster blueprints guaranteed to have the required addons from the golden blueprint.

Info

Learn more about this here.

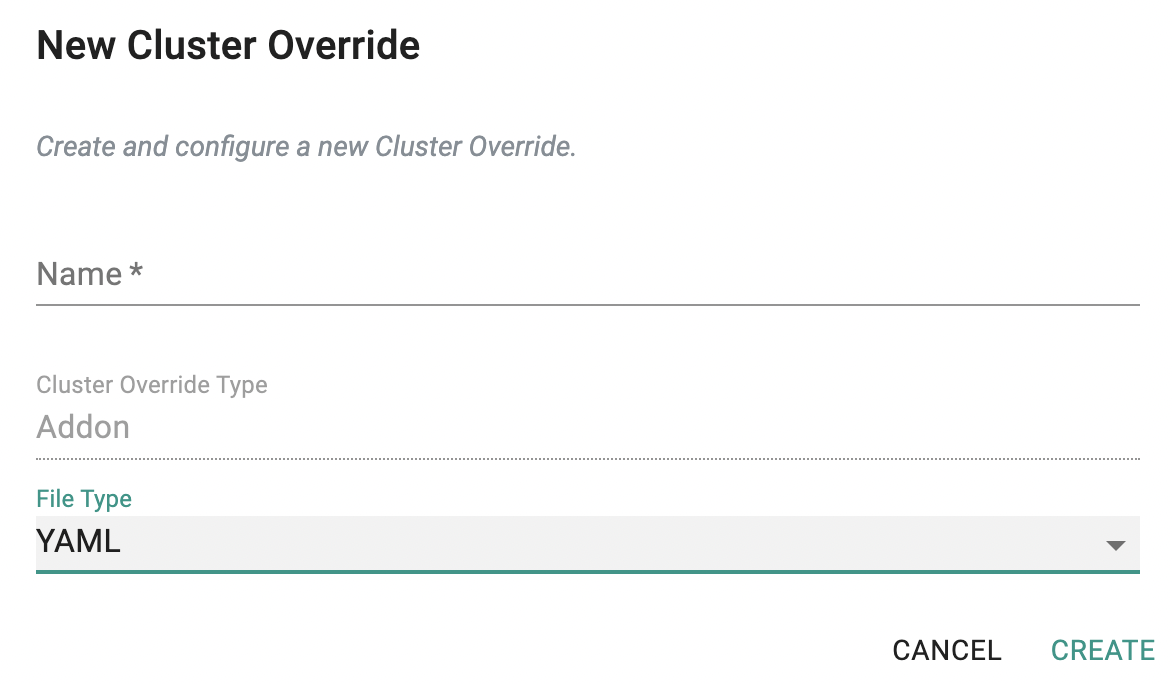

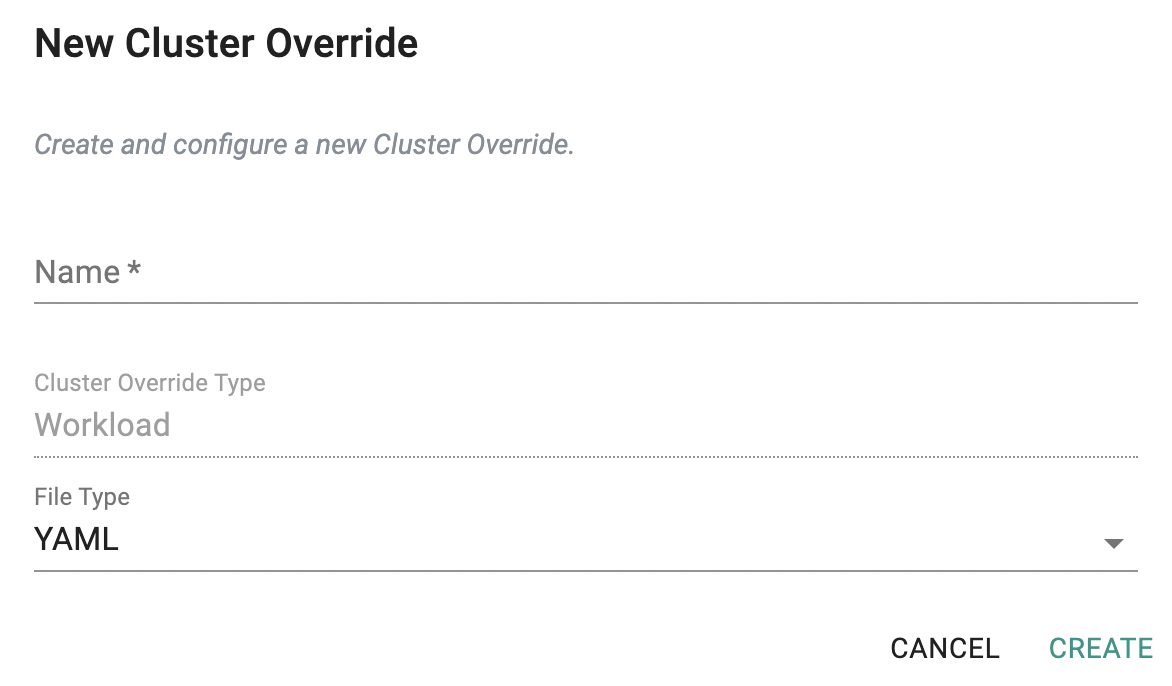

YAML Overrides for Addons¶

Users can now configure overrides for YAML based addons to dynamically customize blueprint deployments on different clusters.

Workloads¶

YAML Overrides¶

Users can now configure overrides for YAML based workloads to dynamically customize workload deployments on different clusters.

RCTL CLI Enhancements¶

Templating¶

Users that use the declarative "rctl apply -f file.yaml" command can now use templates to dynamically generate spec files. This will help users avoid having to invest in the development and maintenance of complex, fragile scripts to parameterize input across 100s of YAML files.

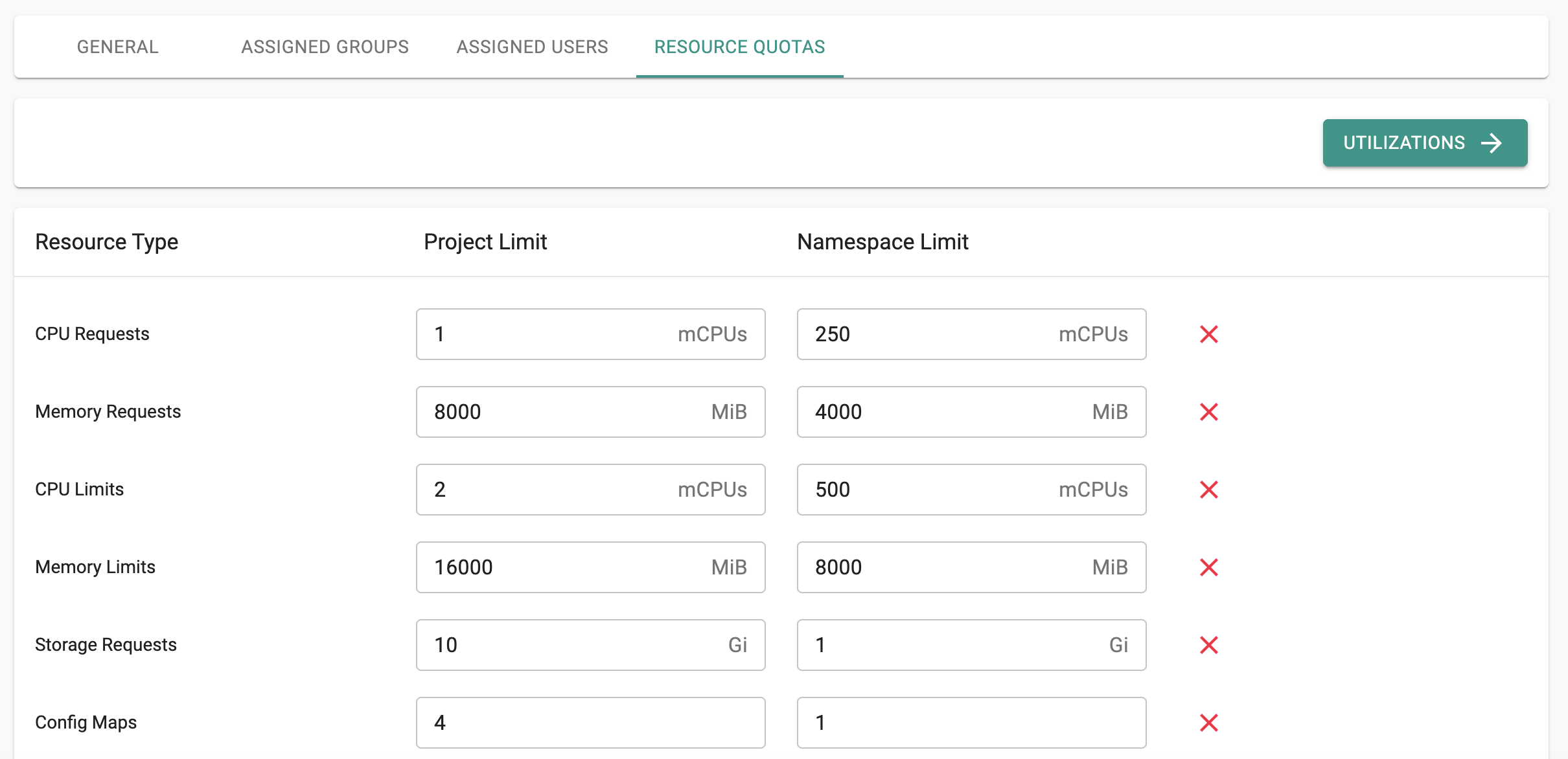

Here is an illustrative example of a template for a "project".

# Generated: {{now.UTC.Format "2006-01-02T15:04:05UTC"}}

# With: {{command_line}}

{{ $p := . }}{{ range $count := IntSlice .StartIndex .Count }}

apiVersion: system.k8smgmt.io/v3

kind: Project

metadata:

name: {{ $p.Name }}-{{ $count }}

spec:

clusterResourceQuota:

configMaps: "{{ $p.ClusterQuota.ConfigMaps }}"

cpuLimits: {{ $p.ClusterQuota.CpuLimits }}

cpuRequests: {{ $p.ClusterQuota.CpuRequests }}

memoryLimits: {{ $p.ClusterQuota.MemoryLimits }}

memoryRequests: {{ $p.ClusterQuota.MemoryRequests }}

storageRequests: {{ $p.ClusterQuota.StorageRequests }}

default: false

defaultClusterNamespaceQuota:

configMaps: "{{ $p.NamespaceQuota.ConfigMaps }}"

cpuLimits: {{ $p.NamespaceQuota.CpuLimits }}

cpuRequests: {{ $p.NamespaceQuota.CpuRequests }}

memoryLimits: {{ $p.NamespaceQuota.MemoryLimits }}

memoryRequests: {{ $p.NamespaceQuota.MemoryRequests }}

storageRequests: "{{ $p.NamespaceQuota.StorageRequests }}"

---

{{end}}

Info

Learn more about this here.

Declarative Models¶

All supported resources now support a declarative approach (i.e. rctl apply -f file.yaml). The controller will automatically determine the changes to current state and bring the resources to the specified state.

Note that customers can continue using the legacy imperative commands in addition to the recommended declarative approach.

rctl apply -f everything.yaml

GitOps¶

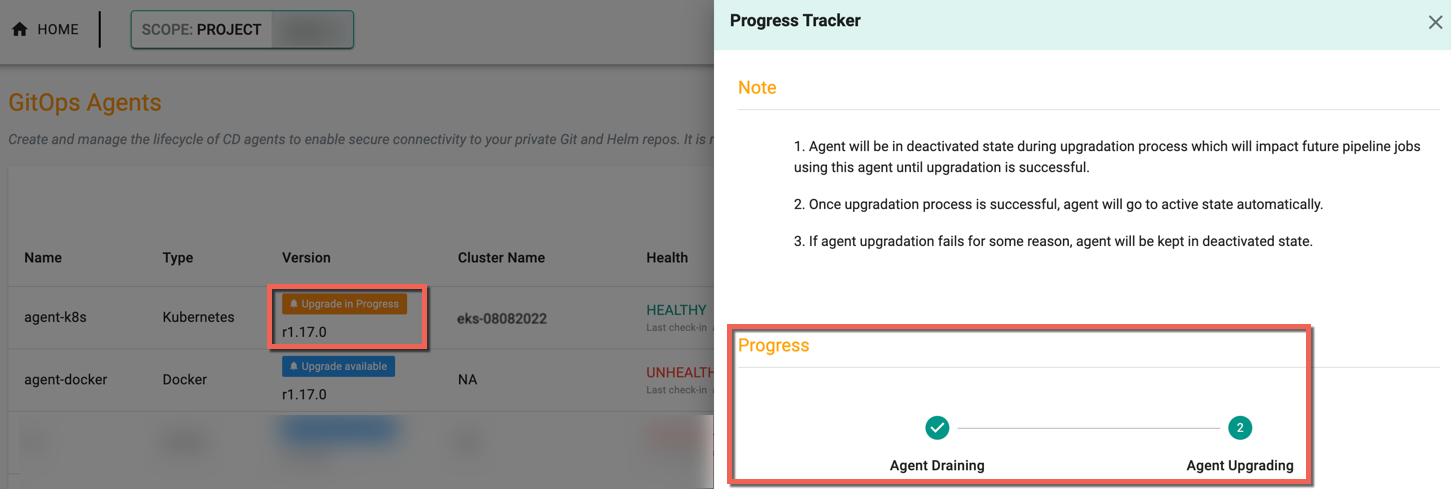

In-place Upgrades of Agent¶

Administrators can now perform one click, in-place upgrades for GitOps Agents on versions ≥ 1.17.

Info

Learn more about this here.

RBAC¶

Workspace Admin role¶

Workspace Admins can now create pipelines for deploying applications.

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following repositories.

| Category | Description |

|---|---|

| Storage | Nutanix |

| Security | Sysdig |

Info

Learn more about the System Catalog here.

Terraform Provider¶

The terraform provider has been updated to add new resources and existing resources have been enhanced. Click here for more details.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-17638 | EKS: Cluster creation fails and does not use the private VPC defined in the template |

| RC-17353 | EKS: Windows nodes with custom AMIs fail to join the cluster |

| RC-10851 | Infra GitOps: Custom CNI configuration missing in the unified YAML created by System Sync |

| RC-17519 | UI: Kubeconfig for API+Console users not available for download from the console |

v1.16¶

29 July, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.16) with their cluster blueprints to be able to use many of the new features described below.

Upstream Kubernetes¶

Lifecycle management on VMware vSphere¶

It is now possible for users to provision clusters on vSphere natively with Cluster API (CAPI) while keeping the platform's core capabilities consistent and similar to other cluster types.

Info

Learn more about lifecycle management for VMware environments here.

Windows Worker nodes¶

Calico CNI support is now available for Windows worker nodes. Support for consul has also been added to make Windows worker nodes aware of master node expansion.

Storage¶

Encryption support is now available for Rook-Ceph based storage. An option has also been provided to clean up disks consumed by Ceph.

Amazon EKS¶

Lifecycle management for brownfield EKS clusters¶

Users can now manage both Rafay provisioned as well as imported EKS clusters (brownfield) using the same APIs, RCTL apply, GitOps System Sync and Terraform scripts. The platform has been enhanced to enable the following capabilities for imported/brownfield clusters:

- Retrieval of information for control plane and managed node groups

- Expansion and scaling infrastructure

- Post provisioning operations such as upgrades

Info

Learn more about lifecycle management enhancements for imported EKS clusters here.

Custom AMIs¶

Users leveraging the cluster specification file to manage the node groups can now apply custom AMIs to node groups. This can be done via RCTL Apply, Terraform and GitOps System sync.

Resource tags for clusters and node groups¶

Users can now update cluster and node group level tags post the provisioning process.

IRSA¶

Users can now create service accounts using a cluster specification file both at the time of and post provisioning of a cluster. This can be done via RCTL Apply, Terraform and GitOps.

Infra GitOps¶

Secrets for Terraform based Infrastructure Provisioner¶

Infrastructure Provisioners require secrets to run the Terraform scripts. A new first-class object called Pipeline Secret Groups is now available within the platform to make it simpler for users to securely attach secrets to the Infrastructure Provisioner.

Info

Learn more about Pipeline secret groups here.

RBAC and SSO¶

Using IDP with multiple orgs (tenants)¶

SSO integration within the platform has now been enhanced to allow customers to register and use the same IDP across multiple orgs (tenants). This allows customers to own and operate multiple orgs (tenants) as necessary while ensuring that they are able to satisfy their internal compliance requirements.

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following:

| Category | Description |

|---|---|

| Ingress | Upstream NGINX, Citrix, Kong |

| Amazon EKS | Amazon EKS Charts |

| Secrets | HashiCorp |

| Cluster Scaling | K8s Cluster Autoscaler |

| Database | InfluxDB, Elasticsearch |

| Storage | MinIO, Ondat |

| Service Mesh | Tetrate |

| Monitoring | New Relic, Datadog, Splunk Connect, Grafana |

| Network Policy | Cilium |

Dashboard¶

Create k8s Resources¶

Administrators are now provided with intuitive workflows to quickly and efficiently "create" CronJobs and Jobs Kubernetes resources directly from the inline Kubernetes resources dashboard.

Info

Learn more about how developers can create k8s resource here

Audit Logs¶

Audit logs have been enhanced across the platform with an intention to provide additional contextual information to users.

Terraform Provider¶

The terraform provider has been updated to add new resources and existing resources have been enhanced. Click here for more details.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-12179 | UI: Preflight validation failures when upgrading EKS clusters are not displayed within the console in certain scenarios |

| RC-9642 | UI: Delete action on a cluster that's already in the process of being deleted displays an incorrect error message |

| RC-10851 | Nodegroup upgrade is not possible in certain scenarios when the control plane is upgraded outside of the platform |

Known Issues¶

Known issues are typically ephemeral and should be resolved in an upcoming patch.

| # | Description |

|---|---|

| 1 | UI: Deletion of resources via Cluster Resources dashboard for restricted namespaces (Ex: rafay-system) is not prevented for users with ClusterRoleBinding permissions (Ex: Org Admin, Project Admin, Infrastructure Admin) |

v1.15¶

24 Jun, 2022

Important

Customers must upgrade to the latest version of the base blueprint (v1.15) with their cluster blueprints to be able to use many of the new features described below.

Upstream Kubernetes¶

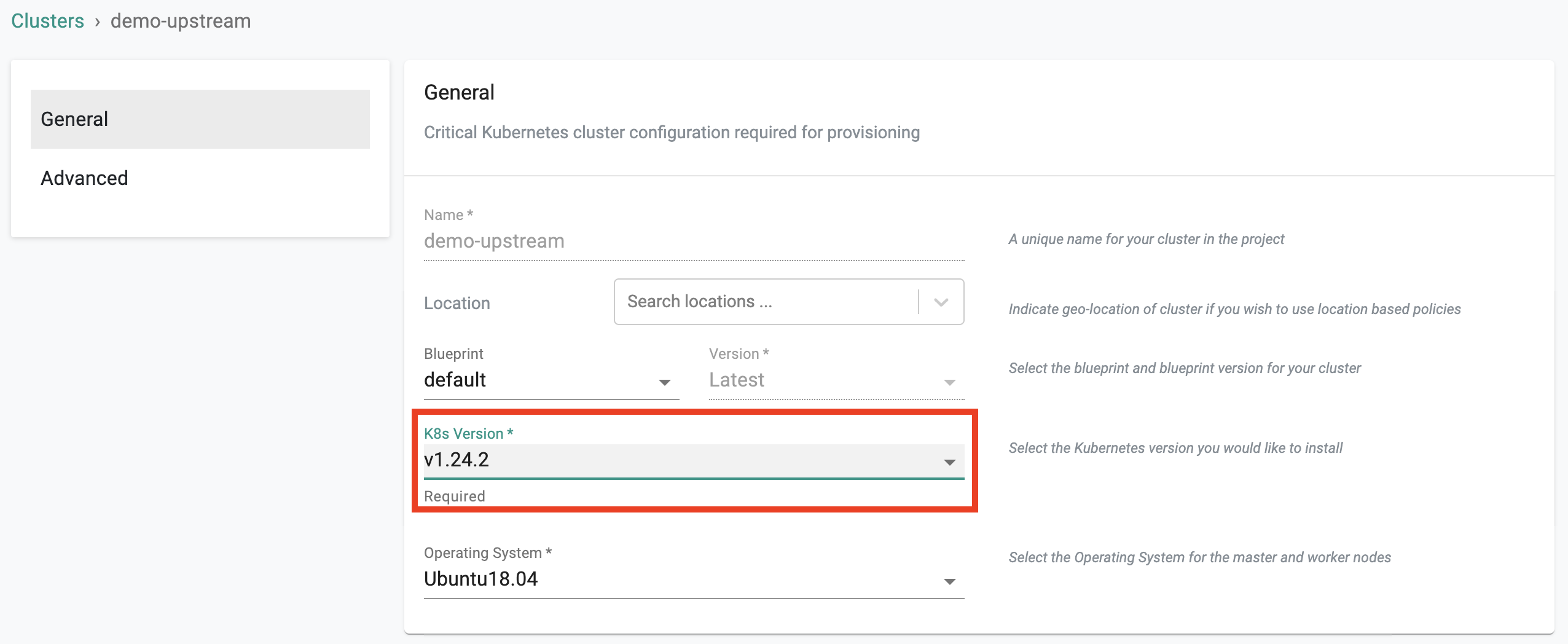

Kubernetes v1.24¶

New upstream clusters can be provisioned based on Kubernetes v1.24.x. Existing upstream Kubernetes clusters managed by the controller can be upgraded in-place to Kubernetes v1.24.2.

Info

Learn more about support for k8s v1.24 here.

CNCF Conformance¶

Upstream Kubernetes clusters based on Kubernetes v1.24 (and prior Kubernetes versions) are fully CNCF conformant.

Kubernetes Patch Releases¶

Existing upstream Kubernetes clusters managed by the controller can be updated in-place to the latest Kubernetes patches for supported versions (v1.23.8, v1.22.11, v1.21.14)

Info

Learn more about support for k8s patch releases here

Reset Cluster¶

For bare metal and VM-based clusters (especially single-node clusters), administrators can reset the cluster's state. This workflow allows administrators to provision Kubernetes from scratch without having to delete and recreate the cluster in their project.

Info

Learn more about resetting cluster state here

Managed Storage in HA Mode¶

For bare metal and VM-based clusters, administrators can now deploy and operate Rook/Ceph based managed storage in a Highly Available (HA) configuration.

Amazon EKS¶

Cluster Template Role¶

A new "cluster template user" role is now available that allows operations teams to enable a true self-service experience for internal users without sacrificing governance and control. Users with this role can only provision EKS clusters based on approved cluster templates in their project.

Info

Learn more about the new "cluster template user" role here.

Infra GitOps - Custom AMI Upgrades¶

The existing RCTL CLI based Infra GitOps capability has been enhanced to add support for custom AMI upgrades. With this, administrators can

- Replace the AMI ID in their EKS Cluster spec files with the new AMI ID and

- Perform "rctl apply -f clusterspec.yaml".

The controller will detect the user's intention and automatically upgrade the cluster's node groups to the new AMI ID.

Info

Learn more about using RCTL based Infra GitOps for Custom AMI Upgrades here

Infra GitOps - k8s Upgrades for Specific Node Groups¶

The existing RCTL CLI based Infra GitOps capability has been enhanced to add support for performing k8s upgrades for specific node groups. With this, administrators can

- Update the k8s version for a specific node group in their EKS cluster spec files with the new version

- Perform "rctl apply -f clusterspec.yaml".

The controller will detect the user's intention and automatically upgrade the node groups to the Kubernetes version

Info

Learn more about using RCTL CLI based Infra GitOps to upgrade the k8s version of a specific node group here

Catalog¶

Additions to System Catalog¶

The System Catalog has been updated to add support for the following:

| Category | Description |

|---|---|

| Service Mesh | Istio, Linkerd |

| Progressive Deployments | Argo Rollouts |

| Backup | Velero |

| Cluster Scaling | AWS Karpenter |

| GPU | Nvidia GPU Operator |

| Storage | Rook/Ceph, NetApp Trident |

| Logging | Fluentbit |

| Observability | Open Telemetry |

Custom Catalogs¶

Administrators can now create and manage "custom catalogs" for workloads and add-ons allowing them to provide internal users a curated "Enterprise Catalog" experience.

Info

Learn more about custom catalogs here

Sharing Custom Catalogs¶

Custom catalogs can be shared with All or Select projects in the Org. This enables a central architecture team to create and manage "enterprise catalogs" and share them with downstream projects.

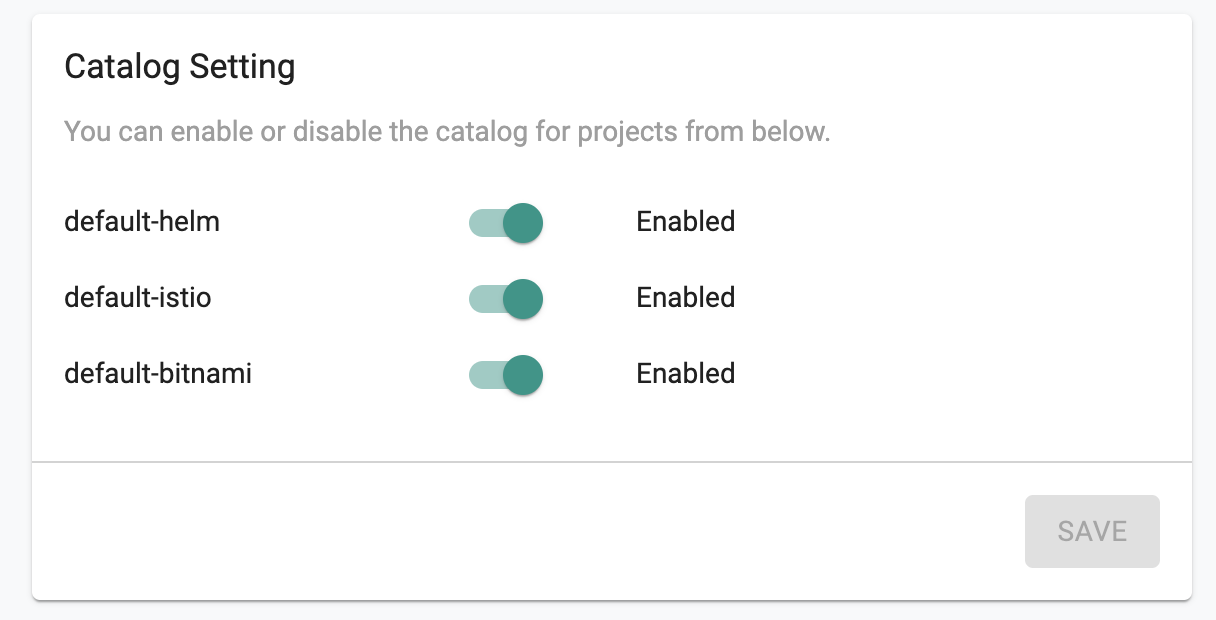

Disable System Catalog¶

Org Admins can optionally disable the default "system catalogs" ensuring that only applications from custom catalogs can be used.

Cluster Blueprint¶

Sync Performance for Minimal Blueprint¶

The cluster blueprint reconciliation process has been tuned and optimized for minimal blueprints and is now significantly faster.

Dashboard¶

Create k8s Resources¶

Administrators are now provided with intuitive workflows to quickly and efficiently "create" Deployments, DaemonSets, and StatefulSets Kubernetes resources directly from the inline Kubernetes resources dashboard.

Info

Learn more about how developers can create k8s resource here

GitOps¶

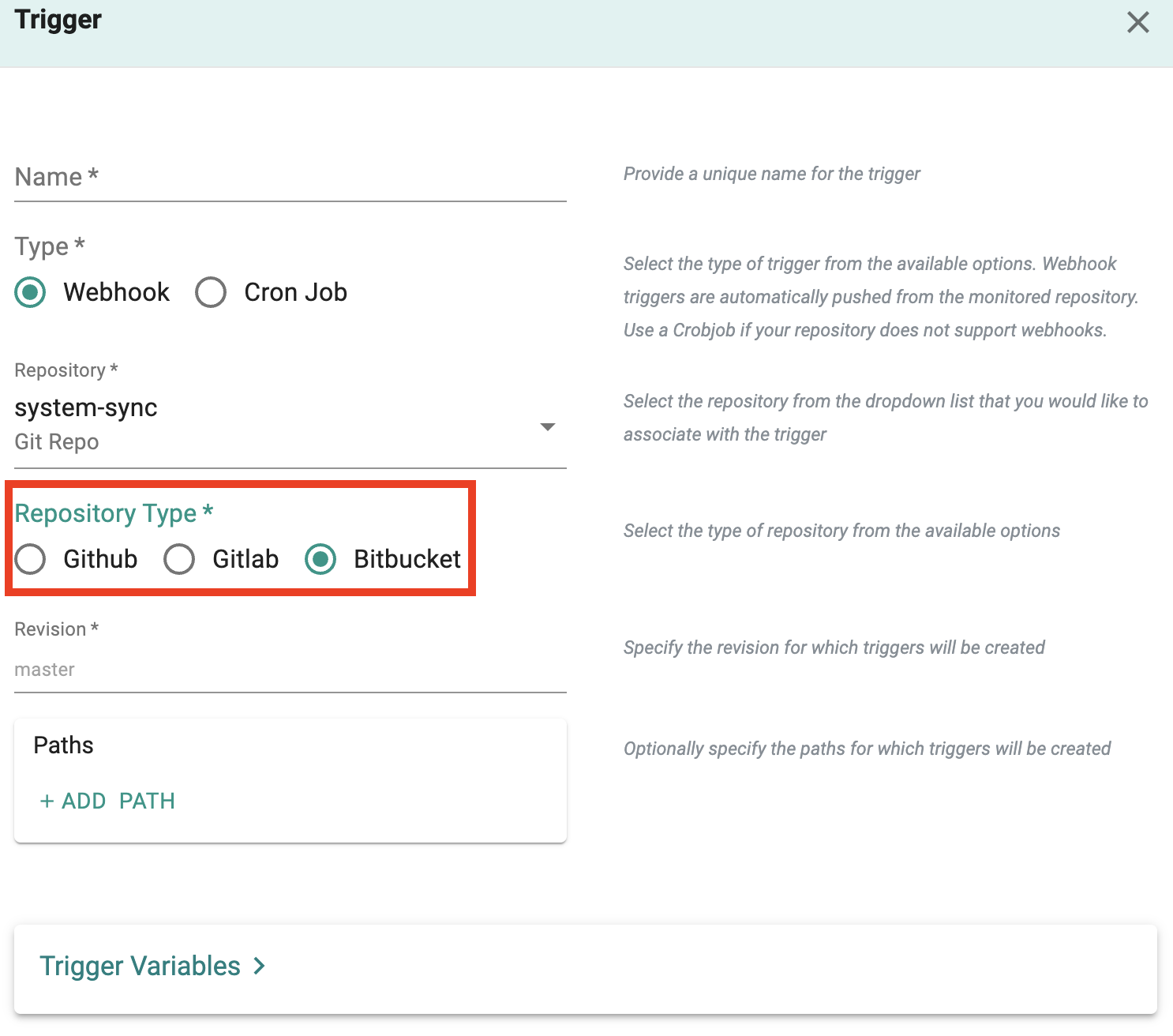

Webhook for BitBucket¶

Automation pipelines can now be triggered using webhooks from BitBucket (self hosted or SaaS). This adds to existing webhook support for GitHub and GitLab.

Info

Learn more about how webhooks can be configured for BitBucket repositories here

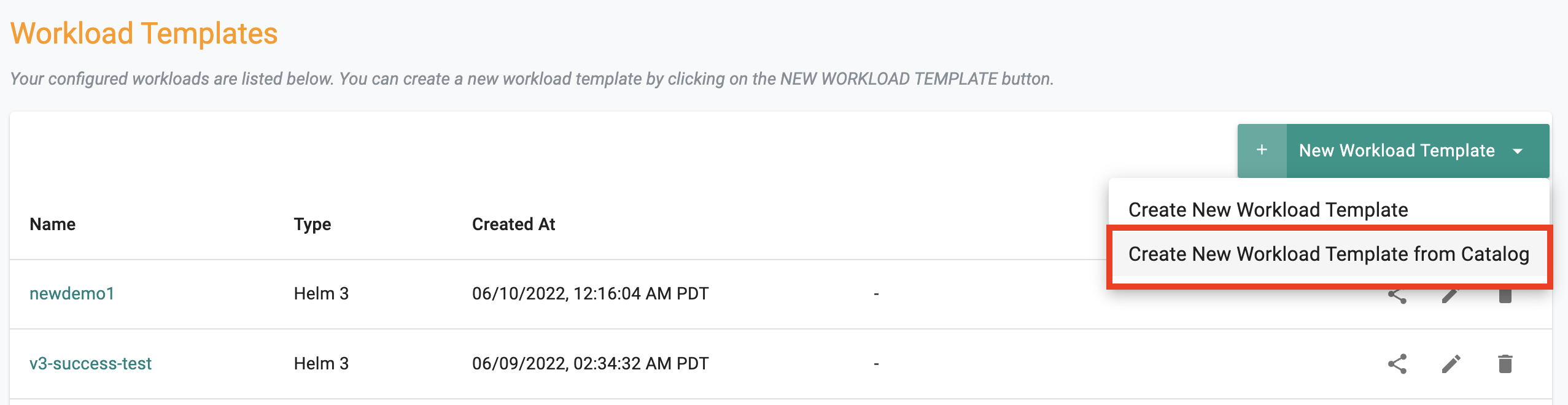

Catalog for Workload Templates¶

Workload templates can now be created based on applications from the system or custom catalogs.

Info

Learn more about how workload templates can be created using a catalog here

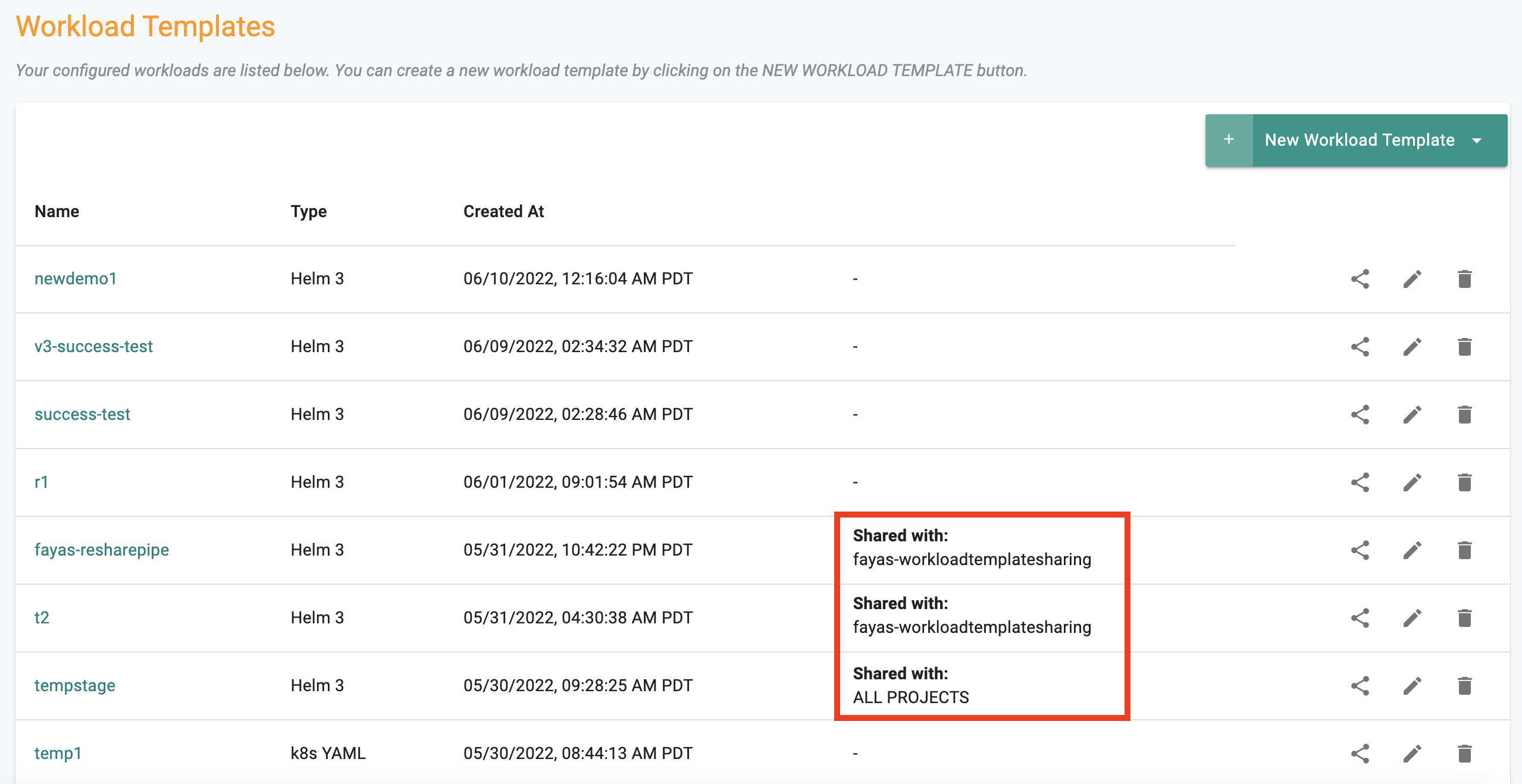

Share Workload Templates¶

Workload templates can now be shared across projects enabling "developer productivity" and "governance"

Info

Learn more about how workload templates can be shared across projects here

Terraform Provider¶

The terraform provider has been updated to add new resources and existing resources have been enhanced. Click here for more details.

Secrets Management¶

AWS Secrets Manager Integration (Beta)¶

Users can leverage a new turnkey integration with AWS Secrets Manager for securing application secrets. This is available as a beta feature.

Info

Learn more about the turnkey integration with AWS Secrets Manager here

Deprecation¶

PSP Support¶

In alignment with the upcoming end of support for PSPs in Kubernetes, turnkey support for PSPs has been deprecated. New clusters, blueprints and namespaces will no longer support the use of PSPs.

Customers are strongly encouraged to adopt compelling alternatives such as the OPA Gatekeeper integration. Turnkey Gatekeeper policies providing a superset of capabilities relative to PSP are available here.

Managed Log Aggregation Add-On¶

The managed log aggregation add-on (based on Fluentd) in the cluster blueprint has been deprecated and will be removed in the near future. Users are recommended to migrate to next generation alternatives such as Fluentbit based on the integrated catalog.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-12177 | Audit log for namespace creation does not capture user or group information |

| RC-12179 | UI: Search box is not working for Audit logs -> System |

| RC-15406 | Project cannot be deleted in certain scenarios |

| RC-15823 | For a blueprint created with RCTL, Alert Manager as a managed add-on is not getting deployed appropriately |

| RC-15897 | Azure vnet list fails post cluster creation for kubenet case |

Known Issues¶

Known issues are typically ephemeral and should be resolved in an upcoming patch.

| # | Description |

|---|---|

| 1 | UI: Deletion of resources via Cluster Resources dashboard for restricted namespaces (Ex: rafay-system) is not prevented for users with ClusterRoleBinding permissions (Ex: Org Admin, Project Admin, Infrastructure Admin) |

v1.14¶

27 May, 2022

Important

Customers need upgrade to the latest version of the base blueprint (v1.14) with their cluster blueprints to be able to use many of the new features described below. They also need to upgrade to the latest version of the agent to use the newly introduced GitOps 2-way sync enhancements.

Upstream Kubernetes¶

Cluster Provisioning UX¶

An enhanced and updated user experience is now provided initial provisioning of upstream Kubernetes clusters. This providing users with a detailed view into status and progress. They are also provided with inline debug and diagnostic capabilities for the configured cluster blueprint components.

Info

Learn more about the enhanced user experience for initial cluster provisioning here

Coexist with Customer's Salt Minion¶

The controller managed salt minion on the upstream k8s cluster master and worker nodes can now operate side-by-side with a customer's existing salt stack infrastructure.

Info

Learn more how the controller's salt minion can coexist with a customer's salt minion here

Amazon EKS¶

GitOps for EKS Lifecycle Management¶

Lifecycle of Amazon EKS Clusters can now be managed using GitOps with the integrated System Sync automation framework. This feature ensures that the state of the EKS cluster is "always in sync" with the declarative cluster spec in the configured Git repository. With this feature:

-

Users can use the convenience of the web console to configure and provision an EKS cluster and have the controller automatically generate and bootstrap the configured Git repository with the EKS cluster's declarative cluster specification.

-

Users can make changes to the EKS cluster using the convenience of the web console and the changes to the cluster spec will be automatically written back to the configured Git repository.

Info

Learn by Trying using this Get Started Guide

Info

Watch a video of this feature below. Learn more here

Important

Customers are required to upgrade their agents to the latest version before enabling this feature.

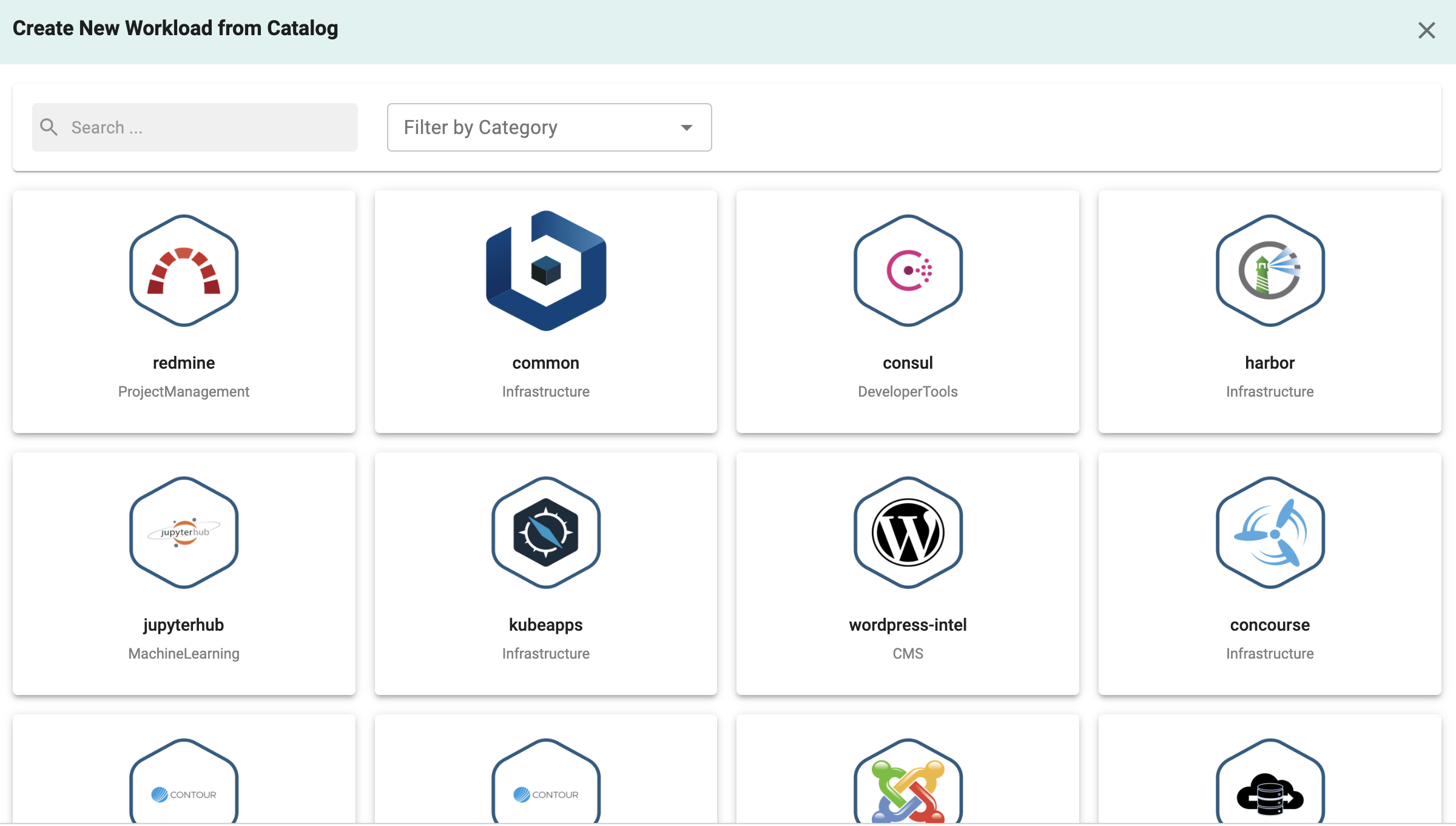

Catalog for Blueprint Addons¶

Addons for cluster blueprints can now be sourced from a curated system catalog with popular addons. Lifecycle of the "catalog" based addons can be managed using the console, RCTL CLI and GitOps System Sync.

Catalog for Workloads¶

Developers can configure and deploy workloads for popular applications (e.g. MySQl database etc) via a curated system catalog. Lifecycle of the "catalog" based workloads can be managed using the console, RCTL CLI and GitOps System Sync.

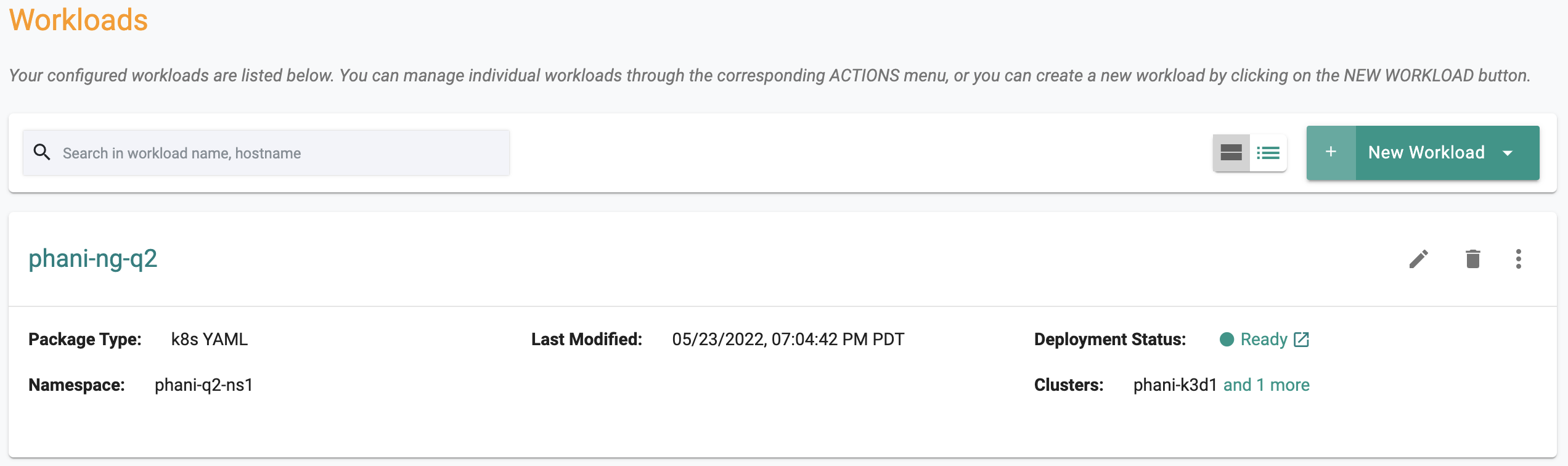

Workloads¶

Workloads by Cluster Name¶

The workload dashboard has been enhanced to display the name of the cluster where workload is currently deployed. The user experience has been updated to support both "single" and "multi cluster" deployment scenarios.

Security¶

Workspace Admin¶

A new role supporting an intermediate level of "soft multitenancy" is now available allowing administrators to offload certain administrative functionality to application users without losing control and governance.

The Workspace Admin feature enables "self service" for application teams by providing an intermediate level of multi-tenancy beyond Kubernetes namespaces.

Workspace admins:

- Can create and manage their own namespaces on clusters in a project

- Cannot access namespaces they do not manage or have access via any channel including zero trust kubectl

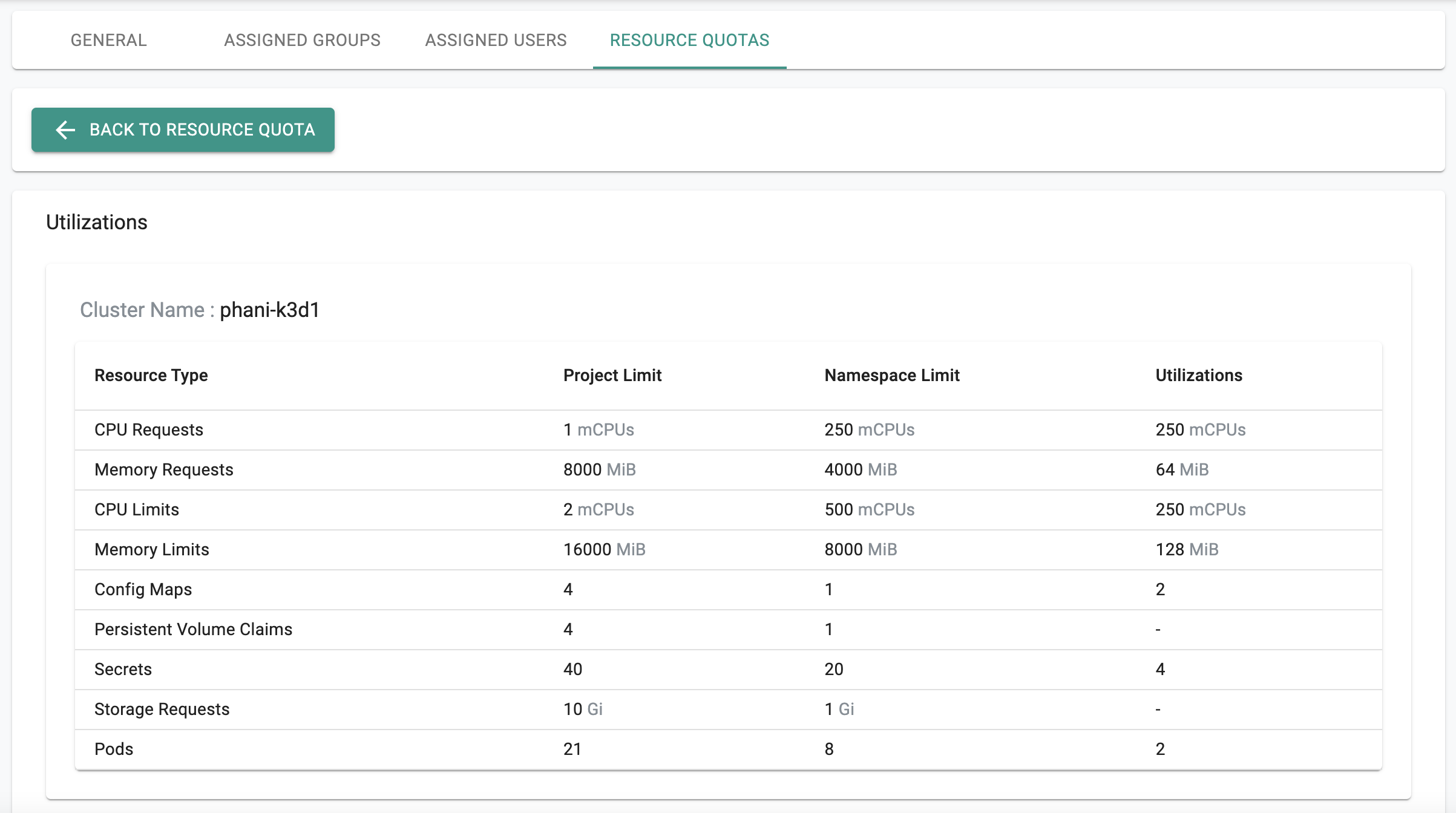

- Can be assigned resource quotas that are actively enforced

Info

Learn more about the workspace admin role here

Watch a video showcasing the workspace administration feature

Policy Management¶

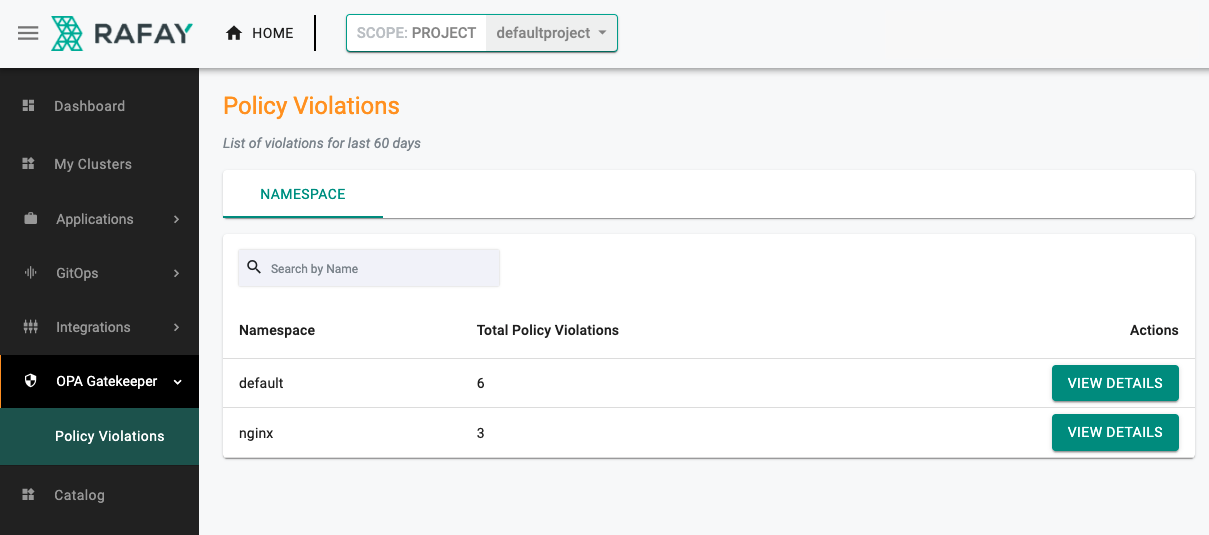

Users limited to specific namespaces (e.g. developers) are now provided centralized visibility and access to OPA Gatekeeper policy violations for resources in their namespaces.

Dashboards¶

Create k8s Resources¶

Administrators are now provided with intuitive workflows to quickly and efficiently "create" Ingress, Service and PVC Kubernetes resources directly from the inline Kubernetes resources dashboard.

Info

Learn more about how developers can create k8s resource here

Cluster View for Project Admins¶

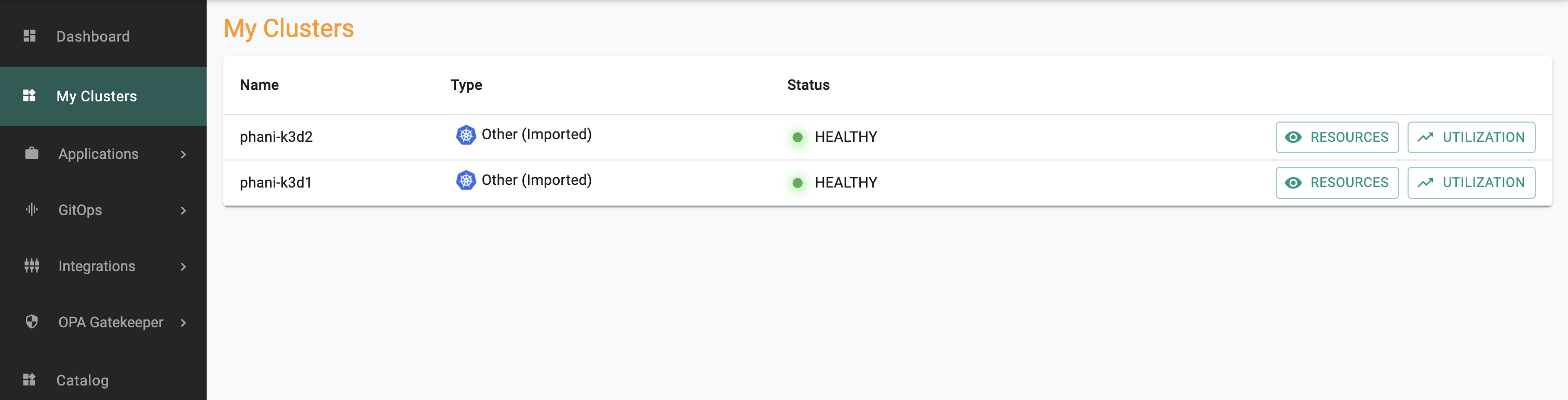

Users with a "Project Admin" role are now provided visibility into clusters in their project. Specifically, they have access to the following:

- Kubernetes Resources Dashboard

- Utilization details

Known Issues¶

| # | Description |

|---|---|

| 1 | System Sync for Git to system is not supported with a prior version of the agent. Customers are required to upgrade to latest version of the agent first |

| 2 | Since EKS Cluster deletion operations are destructive, they are currently not supported via Git -> System Sync. Users need to delete clusters explictly with explicit acknowledgement and this action is audited in the controller |

v1.13¶

29 April, 2022

Important

Customers need upgrade to the latest version of the base blueprint (v1.13) with their cluster blueprints to be able to use many of the new features described below.

Upstream Kubernetes¶

Windows Worker Nodes¶

Support for seamless addition/removal of Windows worker nodes. Support for hitless, in-place Kubernetes upgrades for Windows worker nodes. With this enhancement, users can now provision and operate Upstream Kubernetes clusters with worker nodes based on multiple architectures.

- Linux/amd64 and/or

- Linux/arm64 and/or

- Windows/amd64 architectures

This allows for deployment and operations of heterogeneous application types on the same Kubernetes cluster enabling "consolidation of infrastructure". Users can containerize legacy Windows applications and deploy them to Kubernetes clusters enabling "acceleration of migration of legacy applications" to Kubernetes.

Click here for more details.

Watch a video showcasing the experience of adding a Windows Worker node to an existing cluster. You will also see what the user experience is to deploy and operate a Windows workload to the remote windows worker node.

Amazon EKS¶

CNI Custom Networking¶

Turnkey support for CNI custom networking for the AWS VPC CNI plugin enabling large enterprises to address CIDR block availability related issues for their AWS VPCs. More here and here.

Watch a video showcasing how to configure and provision an EKS cluster with custom networking.

Cluster Templates Enhancements¶

Cluster templates can now be shared across projects. Templates have been enhanced to support overrides for complex objects. Administrators can also now identify and list clusters based on a specific cluster template. More here

k8s 1.22¶

New Amazon EKS clusters can be provisioned based on Kubernetes 1.22. Existing clusters can be upgraded in-place to Kubernetes 1.22.

Watch a video showcasing the user experience of performing in-place upgrades of Amazon EKS clusters from k8s 1.21.x to 1.22.x

Clusters and Node Groups by AMI ID¶

Organizations can now use a Swagger API to quickly identify EKS clusters and node groups based on a specified "AMI ID" across all projects spanning multiple AWS accounts. Click here for more details.

Blueprints¶

Minimal Blueprint¶

The AWS node termination handler is no longer automatically deployed to EKS clusters when the "Minimal Blueprint" is used as the base blueprint. This allows organizations to bring their own customized versions of the AWS node termination handler as part of their cluster blueprints.

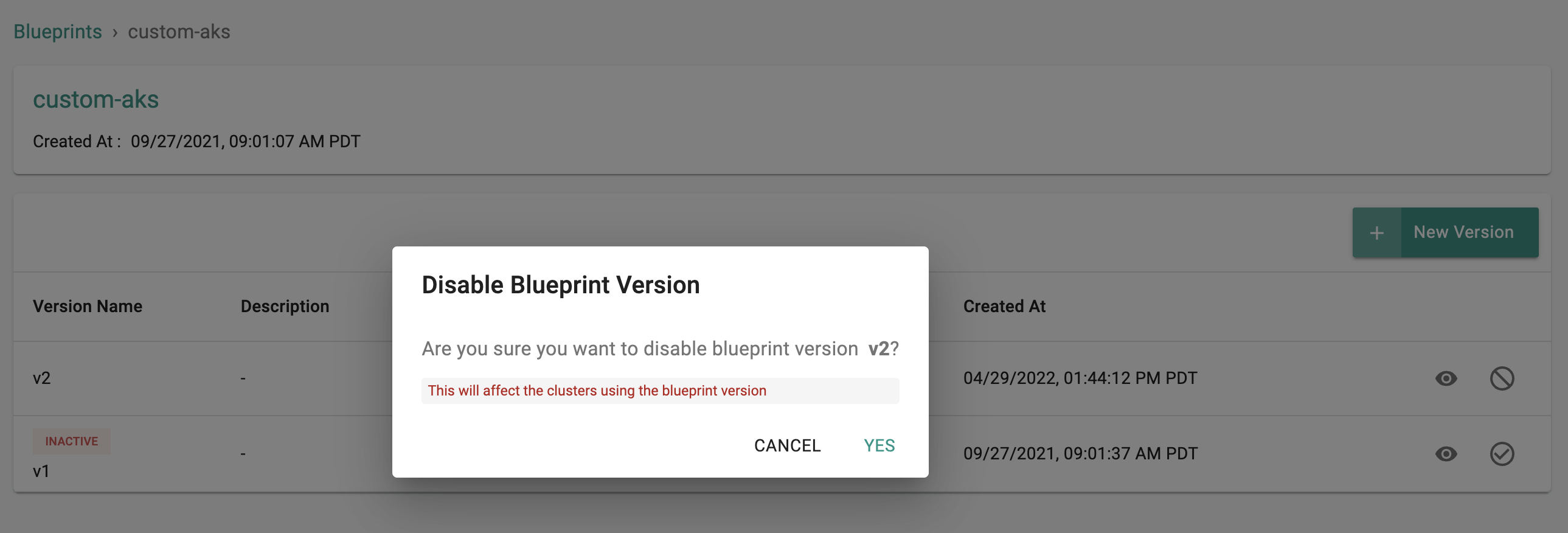

Disable Versions¶

Administrators can now enable/disable specific versions of a cluster blueprint. This prevents users from accidentally using outdated/deprecated versions of a blueprint. When a specific blueprint version is disabled, a visible warning and upgrade prompt is displayed on the cluster card for cluster administrators.

Dashboards¶

Create k8s Resources¶

Administrators are now provided with intuitive workflows to quickly and efficiently "create" ConfigMaps and Secret type of Kubernetes resources directly from the inline Kubernetes resources dashboard.

Click here to learn more.

Zero Trust Kubectl¶

Users are now shown connection establishment status on the web shell to the remote Kubernetes cluster (a) when they open it for the first time and (b) when the session expires.

GitOps¶

GitOps support has been added for the following resources: Role, Group and Cluster Overrides.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-14431 | RCTL CLI: Add node pool to AKS cluster having a custom Identity is showing as failure but node created |

| RC-14351 | Expose flags related to private GKE Cluster |

| RC-14428 | When creating new nodegroups in EKS, nodes are joining the cluster as aws-auth configmap is not updated with the IAM role |

| RC-14400 | Namespace admin user with “Infra ReadOnly” and/or “Cluster Admin” not able to exec to the pod |

| RC-14270 | When cloud watch logging is configured for EKS control plane in the spec, it's not getting enabled on the EKS |

| RC-13660 | Labels not showing on dashboard for some clusters |

Known Issues¶

Known issues are typically ephemeral and should be resolved in an upcoming patch.

| # | Description |

|---|---|

| RC-14769 | UI:Disabled BP version should not be there in the drop down list on update BP ->clusters |

| RC-14768 | Backend validation is not proper if enable/disable the BP version on shared BP |

| RC-14767 | UI: Enable/disable BP version option should not be there for shared BP |

| RC-14492 | Upstream k8s upgrade failed from v1.21.8 to v1.22.5 |

v1.12¶

01 April, 2022

Important

Customers need upgrade to the latest version of the base blueprint (v1.12) with their cluster blueprints to be able to use many of the new features described below.

Upstream Kubernetes¶

EKS-D Provisioning¶

Amazon EKS-D based Kubernetes cluster provisioning workflows have been streamlined and optimized.

Managed Storage¶

In addition to existing Local PV and Distributed Storage (based on GlusterFS), customers can now use CNCF graduated Rook Ceph based distributed storage as a managed storage option. More here.

Important

Support for GlusterFS based distributed storage is now deprecated. It will be removed later in the year and users are encouraged to transition to the new Rook Ceph based managed storage option.

Amazon EKS¶

Wait Option for RCTL CLI based Infra Operations¶

A "blocking" wait option is now available in the RCTL CLI for "long running" infrastructure operations such as cluster provisioning, cluster scaling, addition and removal of nodes etc. More here.

Managed Node Group Upgrades with Custom AMI¶

Admins can now update managed nodegroups by specifying node-ami for custom AMIs.

Dashboards¶

Kubernetes Resources¶

The integrated Kubernetes resources dashboard (available for both cluster administrators and developers) now provides the means for authorized users to perform additional lifecycle operations for all k8s resources (Edit YAML, Download YAML, Describe) on remote clusters directly from the console.

Developers can use the "describe" option for their Ingress and PVCs to quickly verify if there are issues with the underlying Ingress Controller or PVs. More here.

Blueprints¶

Fleet Upgrades¶

Users can now perform controlled and automated upgrades of blueprints on a fleet of clusters. More here.

GitOps¶

Docker Form Factor for Agent¶

A Docker form factor for the agent (CD/Repository) is now available. This provides users the means to deploy the agent in their networks without the need for a k8s cluster. More here.

Policy Management¶

Centralized Aggregation of Violations¶

All policy (Managed OPA Gatekeeper) violations are automatically aggregated centrally at the controller and made available to administrators via intuitive dashboards, workflows and APIs. More here.

RCTL CLI¶

The RCTL CLI has been updated for users to fully automate the entire lifecyle of policy management for the integrated OPA Gatekeeper service. More here.

Important

Ensure the base blueprint for your cluster blueprints are updated to v1.12 or higher to experience the new functionality.

RBAC and SSO¶

Group Assignment for IdP Users¶

Admins can now use the RCTL CLI to progammatically assign IdP users to a Group allowing them to fully automate workflows. More here.

Integrations¶

HashiCorp Vault¶

In addition to using the web console, customers can now automate integration with Vault on managed clusters using the RCTL CLI. More here.

Terraform Provider¶

The terraform provider has been updated with intuitive examples and improved documentation.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-14026 | Modify self heal script on CentOS/RHEL to enforce "nameserver IP" to be present as first nameserver entry in /etc/resolv.conf but do not force it to be first line of the file |

| RC-13755 | Upstream Kubernetes cluster upgrades trying to from pull dev registry |

| RC-13746 | Clusters page in Web Console Fails to load in Safari browser |

| RC-13660 | Labels not showing on dashboard for some clusters |

| RC-13515 | Cannot select a custom AMI from UI |

| RC-11968 | Slack alerts sent from the customized, managed alert manager has the link pointing to localhost |

Known Issues¶

| # | Description |

|---|---|

| 1 | Volume expansion to add an additional storage device is currently not possible without a restart of the VM or the ceph operator |

| 2 | Ensure that all required storage devices are attached to the VM before cluster provisioning to ensure they are discovered and usable |

v1.11¶

25 February, 2022

Upstream Kubernetes¶

Kubernetes Versions¶

New upstream Kubernetes clusters can be provisioned based on Kubernetes v1.23. Existing upstream Kubernetes clusters on older versions can be upgraded to Kubernetes v1.23. Latest patch releases for Kubernetes v1.22, v1.21 and v1.20 are also available.

Important

We strongly encourage customers to upgrade their existing clusters to the latest Kubernetes patch releases.

Updated OVA and QCOW Images¶

Refreshed OVA and QCOW2 images for the pre-packaged clusters are now available with security updates and latest software images. More on OVA and on QCOW2.

Amazon EKS¶

Bottlerocket - Managed Node Groups¶

Support for provisioning and ongoing operations of managed node groups based on Bottlerocket AMIs.

List of In-Use AMI ID¶

Across the organization or the projects, authorized users can identify the list of the AMI Images associated with the node groups of EKS clusters. This helps to quickly detect the outdated AMI IDs and prioritize upgrades. More here.

Cluster Blueprints¶

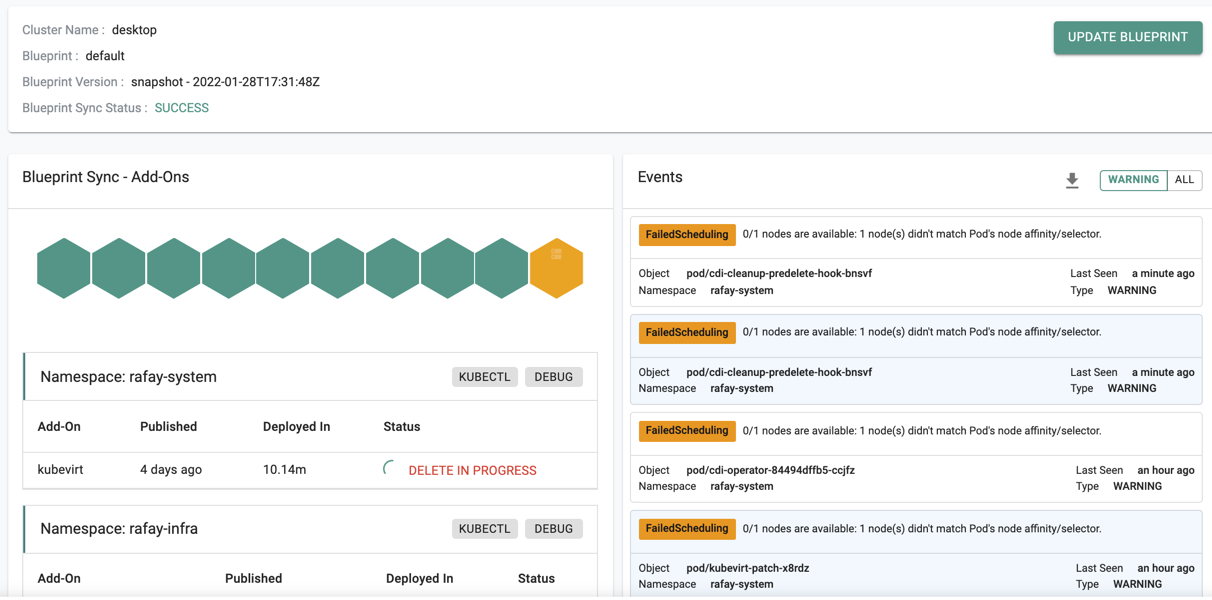

Status and Progress¶

Decoupled status and progress for infrastructure and blueprint/addon during cluster provisioning for Amazon EKS and Azure AKS clusters (coming soon for upstream k8s provisioning!). This provides users with a detailed, fine-grained view into how things are progressing and allows them to quickly zero in on the specific issue causing failures. More here.

Decoupled Lifecycle for Managed Add-ons¶

Customers now have fine-grained control over how/when managed add-ons in custom blueprints are updated.

Change Log for Managed Add-on Versions¶

Customers have visibility into the change log of managed addons in base blueprint versions. This allows them to understand which add-on changed and when.

CLI for Charts and Values from Different Repos¶

The RCTL CLI has been enhanced to support addons with Helm charts and values.yaml files from different repositories. More here.

Important

Download the latest RCTL CLI to use the updated functionality

Dashboards¶

k8s Resource Dashboards¶

The embedded, inline k8s resource dashboards for both clusters (used by Ops/SRE users) and workloads (used by developers) have been streamlined for near real-time retrieval and presentation of data to users. More here and here.

Workloads¶

Status and Progress¶

The RCTL CLI has been enhanced with an “option” to provide detailed "status" and "progress updates" for associated k8s resources associated with a workload. Customers that embed RCTL CLI in their automation pipelines can now retrieve and present detailed workload deployment status and progress updates as part of their pipeline output to developers. More here.

Important

Download the latest RCTL CLI to use the updated functionality

Per Container Resource Sizing in Workload Wizard¶

The workload wizard has been enhanced to provide support for separate sizing options for container requests and limits. More here.

Mount same Volume to Multiple Paths¶

The workload wizard has been enhanced to support mounting the same volume to multiple paths as part of same workload. More here.

CLI for Charts and Values from Different Repos¶

The RCTL CLI has been enhanced to support Helm 3 workloads with charts and values.yaml files from different repositories. More here.

Important

Download the latest RCTL CLI to use the updated functionality

Integrations¶

Self-Signed Cert for Vault Integration¶

Customers can now use self-signed certificates for integration with HashiCorp Vault. More here.

Partner Operations Console¶

Create Org¶

In addition to programmatic ways to create and manage Orgs, Partner Admins can now also create and approve new Orgs directly using a workflow in the Partner Ops Console. More here.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-11020 | Support mounting same volume to multiple paths as part of same workload |

| RC-11968 | Slack alerts sent from the customized alert manager with Slack has the link point to localhost |

| RC-12120 | Swagger API: Add an API to provision nodegroup |

| RC-13229 | Use of keyword "dev" in the cluster name is causing issues with the auto heal script on MKS clusters |

| RC-13277 | Metrics server addon is not compatible with k8s1.22 |

| RC-13370 | Make the client secret in Azure Credentials a protected string |

| RC-13416 | OPA Template is missing when creating constraint |

| RC-13419 | OPA: Not able to upload new Constraint or Template |

| RC-13501 | Not able to add the EKS nodegroup with RCTL |

| RC-13545 | Not able to deploy data agents on an imported EKS clusters with AWS Credentials |

v1.10¶

28 January, 2022

Amazon EKS¶

Cluster Templates¶

For non-production environments, it can be extremely effective to "empower and enable" developers with the ability to provision and use infrastructure resources such as compute, network and clusters for testing and deploying their applications. However, typically Operations and Security teams also need control over "which infrastructure resources are created" and "where they are created" for various reasons like cost management, security policies and governance.

A cluster template for Amazon EKS

- Enables Ops/SRE teams to enable "self service" operations for cluster lifecycle operations to developers (cluster admin role) without losing control over governance and policy.

- Allows the Infrastructure admins to specify and ecncapsulate the freedom/restriction for infrastructure resource creation.

- Abstracts the details of the resource creation by exposing limited configuration for the user to deal with.

- Is a preset configuration that can be used to replicate infrastructure resources.

Note

Once a cluster is provisioned, organizations can use cluster blueprints to enforce and govern organizational policies for cluster wide software addons "inside" the Kubernetes cluster.

More here

RCTL for AWS Wavelength¶

The RCTL CLI now supports full lifecycle management of AWS Wavelength node groups using declarative cluster specs.

More here

Upstream Kubernetes¶

Hard Failure for DNS Preflight¶

Conjurer based provisioning will now block provisioning if collisions/conflicts are detected on the node with DNS preflight checks.

Dashboards¶

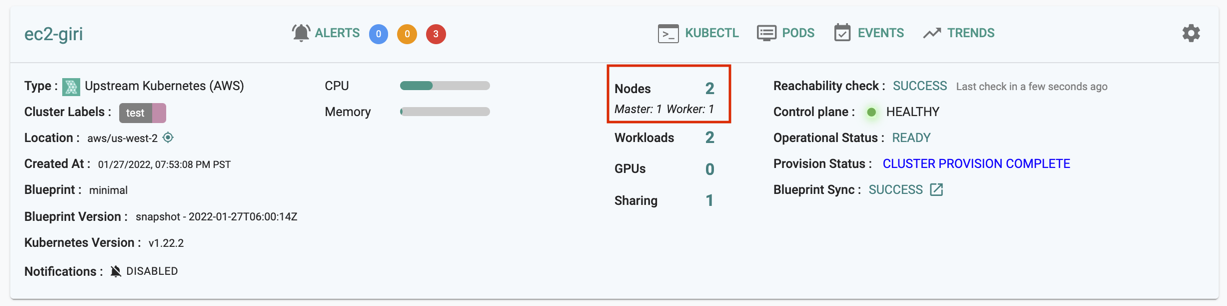

Cluster Dashboard¶

The cluster card in the web console has been enhanced to also display the number of nodes "by type" (master, worker).

Workloads¶

For Helm 3 workloads, developers can source chart and values.yaml files from different repositories. For example, the chart can be sourced from a public Bitnami repository and the "custom" values.yaml file can be sourced from a private Git repository.

Cluster Blueprints¶

Multiple Repos for Addons¶

For Helm 3 addons in a cluster blueprint, administrators can source the chart and values.yaml files from different repositories. For example, the chart can be sourced from a public Bitnami repository and the "custom" values.yaml file can be sourced from a private Git repository.

Status and Progress Enhancements¶

A significantly enhanced user experience for cluster blueprint updates on all cluster types.

Imported Clusters¶

Admins will have access to rich and detailed status and progress during the initial cluster import process into the controller.

Zero Trust Kubectl¶

Users with API only privileges cannot login into the web console. Currently, they can download their kubeconfig file programmatically using the RCTL CLI. Org Admin privileges can now download the kubeconfig file for users with "API only" privileges from the Web Console as well.

Vault Integration¶

The integration with HashiCorp Vault has been enhanced to support retrieval of "All secrets from the configured path" and (a) Render to a file or (b) Create environment variables. This enhancement enables users to retrieve all secrets in a single pass resulting in dramatically simplified workflows for the developer.

RCTL CLI Enhancements¶

Users of the RCTL CLI now have the option to wait and block for long running operations such as "namespace publish" and "workload publish". The wait and block operational approach can potentially help simplify the logic in a customer's automation pipeline.

Partner Operations Console¶

Disable Self Service Sign Up¶

White labeled partners that do not have an inhouse process to handle self service sign up workflows can now optionally request that self service sign up workflows be disabled.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-12863 | Email notifications are not being sent for approval stage |

| RC-12815 | Use non standard ports other than 8080, 8081 for running with hostNetwork true to avoid port conflict |

| RC-12807 | For EKS Spot nodegroup there is no default instance type specified in the UI |

| RC-12668 | Regression in vault integration with mounting vault-cacert for self-signed TLS vault server |

| RC-12572 | [EKS]:Calico CNI:prometheus: 2 api services rafay-prometheus-adapter and rafay-prometheus-metrics-server failed at discovery check( Need to put hostnetwork:true) |

| RC-11075 | Deploy backup agent fails when same cluster name available in different orgs |

| RC-10838 | For helm3 workload when just values file is modified and uploaded republish button is grayed out |