CLI

RCTL can be used to manage the end-to-end lifecycle of a workload from an external CI system such as Jenkins etc. The table below describes the workload operations that can be automated using RCTL.

| Resource | Create | Get | Update | Delete | Publish | Unpublish |

|---|---|---|---|---|---|---|

| Workload | YES | YES | YES | YES | YES | YES |

Important

It is strongly recommended that customers version control their workload definition files in their Git repositories.

Create and apply Workload¶

Create a new workload using a definition file as the input. The workload definition is a YAML file that captures all the necessary details required for the Controller to manage the lifecycle of the workload.

Important

- When uploading an artifact file, the file path should be relative to the config YAML file (applicable for all types)

- With the

applycommand,--v3is not required. The API version is included in the YAML file. Theapplycommand creates and publishes the workload

./rctl apply -f config.yaml

Helm Chart Workloads¶

For Helm3 type workloads, the controller acts as the Helm client.

Note

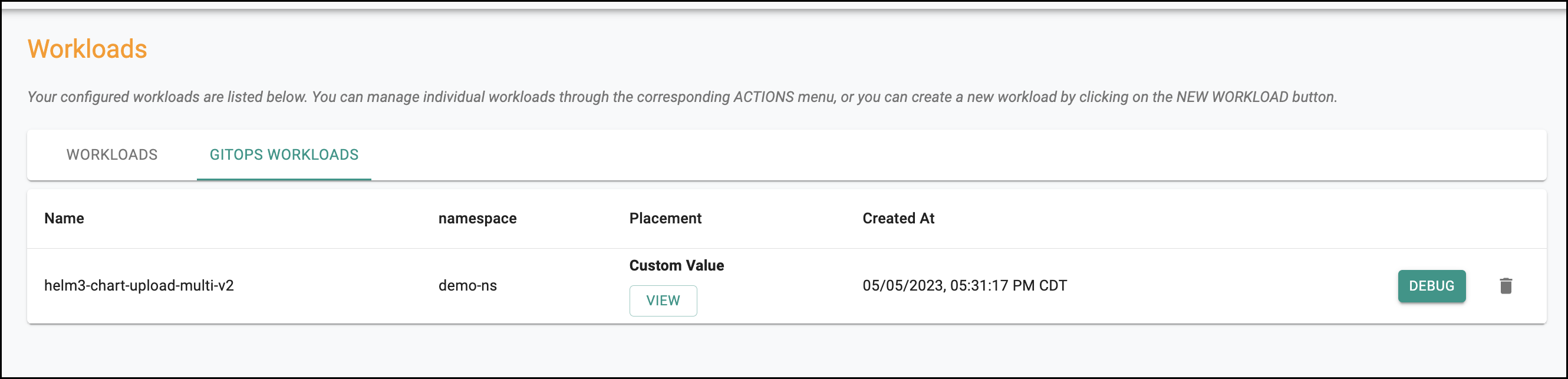

When using apply to create a workload, the workload is published to the cluster if the placement selector is configured (see example below). The workload is added to the GitOps Workload tab in the Console.

An illustrative example for a Helm3 type workload definition is shown below

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: helm3-chart-upload-multi-v2

project: prod-test

spec:

artifact:

artifact:

chartPath:

name: file://artifacts/helm3-chart-upload-multi-v2/nginx-8.2.0-rafay.tgz

valuesPaths:

- name: file://artifacts/helm3-chart-upload-multi-v2/nginx-values-1-with-rauto-automation-ecr-registry.yaml

- name: file://artifacts/helm3-chart-upload-multi-v2/nginx-values-2-with-rauto-automation-ecr-registry.yaml

- name: file://artifacts/helm3-chart-upload-multi-v2/nginx-values-3-with-rauto-automation-ecr-registry.yaml

options:

maxHistory: 10

timeout: 5m0s

type: Helm

namespace: demo-ns

placement:

selector: rafay.dev/clusterName in (prod-test-mks-1,prod-test-eks-1)

version: helm3-chart-upload-multi-v2-v3

Example of artifact path when the workload and artifact files are in the same directory:

spec:

artifact:

artifact:

paths:

- name: file://demo.yaml

options: {}

type: Yaml

Example of artifact path when the workload and artifact files are in the different directory:

spec:

artifact:

artifact:

paths:

- name: file://./directory/demo.yaml

options: {}

type: Yaml

Helm Workloads from different Repos¶

Below is an example config file to create a workload with Helm Chart and values from different repositories

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: workload-v3-different-repo

project: test

spec:

artifact:

artifact:

chartPath:

name: nginx-9.5.8.tgz

repository: repo-test

revision: main

valuesRef:

repository: repo-test2

revision: main

valuesPaths:

- name: values.yaml

options:

maxHistory: 10

timeout: 5m0s

type: Helm

drift:

enabled: false

namespace: demo-ns

placement:

selector: rafay.dev/clusterName=demo-stage-eks-10

version: workload-helmingit-v2

K8s Yaml Workloads¶

An illustrative example for a k8s YAML type workload definition is shown below

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: nativeyamlupload-v2

project: prod-test

spec:

artifact:

artifact:

paths:

- name: file://artifacts/nativeyamlupload-v2/nativeyamlupload-v2.yaml

options: {}

type: Yaml

namespace: demo-ns

placement:

selector: rafay.dev/clusterName in (prod-test-mks-1,prod-test-eks-1)

version: nativeyamlupload-v2-v1

On successful creation, workload is published.

Note

Version is mandatory to publish the workload.

List Workloads¶

Use the below command to retrieve all the workloads

./rctl get workload --v3

Use the below RCTL command to retrieve/list all workloads in the specified Project.

In the example below, the command will list all the workloads in clusters in the "qa" project.

./rctl get workload --project qa --v3

NAME NAMESPACE TYPE STATE ID

apache apache NativeHelm READY 2d0zjgk

redis redis NativeHelm READY k5xzdw2

The command will return all the workloads with metadata similar to that in the Web Console.

- Name

- Namespace

- Type

- State

- ID

Unpublish Workload¶

Use RCTL to unpublish a workload.

./rctl unpublish workload <workload name> --v3

In the example below, the "apache" workload will be unpublished in the "qa" project

./rctl unpublish workload apache --project qa --v3

Delete Workload¶

Use RCTL to delete a workload identified by name. Note that a delete operation will unpublish the workload first.

./rctl delete workload <workload name> --v3

Status¶

Use this command to check status of a workload. The status of a workload can be checked on all deployed clusters with a single command.

./rctl status workload <workload name>

If the workload has not yet been published, it will return a "Status = Not Ready". If the publish is in progress, it will return a "Status = Pending". Once publish is successful, it will return a "Status = Ready". Status is presented by cluster for all configured clusters. The workload states transition as follows "Not Ready -> Pending -> Ready".

An illustrative example is shown below. In this example, the publish status of the workload is listed by cluster.

./rctl status workload apache --project qa

CLUSTER PUBLISH STATUS MORE INFO

qa-cluster Published

Use the below command to fetch the real time detailed status of a workload like K8s object names, objects latest condition, and cluster events

./rctl status workload <WORKLOAD-NAME> --detailed --cluster=<CLUSTER-NAME>,<CLUSTER NAME 2>..<CLUSTER NAME N>

Note

The flag --cluster is optional and Helm2 type workload(s) is currently not supported

Example

./rctl status workload test-helminhelm --detailed --clusters=oci-cluster-1

Output

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

| CLUSTER NAME | K8S OBJECT NAME | K8S OBJECT NAMESPACE | K8S OBJECT LATEST CONDITION |

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

| oci-cluster-1 | test-helminhelm-nginx-ingress-controller | default-rafay-nikhil | - |

| | (Service) | | |

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

| oci-cluster-1 | test-helminhelm-nginx-ingress-controller-default-backend | default-rafay-nikhil | - |

| | (Service) | | |

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

| oci-cluster-1 | test-helminhelm-nginx-ingress-controller | default-rafay-nikhil | {"lastTransactionTime":"0001-01-01T00:00:00Z","lastUpdateTime":"2022-02-08T07:49:18Z","message":"ReplicaSet |

| | (Deployment) | | \"test-helminhelm-nginx-ingress-controller-568dd8fdb\" is |

| | | | progressing.","reason":"ReplicaSetUpdated","status":"True","type":"Progressing"} |

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

| oci-cluster-1 | test-helminhelm-nginx-ingress-controller-default-backend | default-rafay-nikhil | {"lastTransactionTime":"0001-01-01T00:00:00Z","lastUpdateTime":"2022-02-08T07:49:19Z","message":"Deployment |

| | (Deployment) | | has minimum availability.","reason":"MinimumReplicasAvailable","status":"True","type":"Available"} |

+---------------+----------------------------------------------------------+----------------------+-------------------------------------------------------------------------------------------------------------+

EVENTS:

+-------------------------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| CLUSTER NAME | EVENTS |

+-------------------------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| oci-cluster-1(default-rafay-nikhil) | LAST SEEN TYPE REASON OBJECT MESSAGE |

| | 66s Normal Killing pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-5648j Stopping container controller |

| | 21s Warning Unhealthy pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-5648j Readiness probe failed: Get "http://10.244.0.171:10254/healthz": context deadline exceeded (Client.Timeout exceeded while awaiting headers) |

| | 61s Warning Unhealthy pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-5648j Liveness probe failed: Get "http://10.244.0.171:10254/healthz": dial tcp 10.244.0.171:10254: i/o timeout (Client.Timeout exceeded while awaiting headers) |

| | 41s Warning Unhealthy pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-5648j Liveness probe failed: Get "http://10.244.0.171:10254/healthz": context deadline exceeded (Client.Timeout exceeded while awaiting headers) |

| | 11s Warning Unhealthy pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-5648j Readiness probe failed: Get "http://10.244.0.171:10254/healthz": dial tcp 10.244.0.171:10254: i/o timeout (Client.Timeout exceeded while awaiting headers) |

| | 9s Normal Scheduled pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz Successfully assigned default-rafay-nikhil/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz to nikhil-28-jan-02 |

| | 8s Normal Pulled pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz Container image "docker.io/bitnami/nginx-ingress-controller:1.1.0-debian-10-r34" already present on machine |

| | 8s Normal Created pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz Created container controller |

| | 7s Normal Started pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz Started container controller |

| | 6s Normal RELOAD pod/test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz NGINX reload triggered due to a change in configuration |

| | 9s Normal SuccessfulCreate replicaset/test-helminhelm-nginx-ingress-controller-568dd8fdb Created pod: test-helminhelm-nginx-ingress-controller-568dd8fdb-n7flz |

| | 9s Normal SuccessfulCreate replicaset/test-helminhelm-nginx-ingress-controller-default-backend-c57d46575 Created pod: test-helminhelm-nginx-ingress-controller-default-backend-ckk29z |

| | 8s Normal Scheduled pod/test-helminhelm-nginx-ingress-controller-default-backend-ckk29z Successfully assigned default-rafay-nikhil/test-helminhelm-nginx-ingress-controller-default-backend-ckk29z to nikhil-28-jan-02 |

| | 8s Normal Pulled pod/test-helminhelm-nginx-ingress-controller-default-backend-ckk29z Container image "docker.io/bitnami/nginx:1.21.4-debian-10-r53" already present on machine |

| | 8s Normal Created pod/test-helminhelm-nginx-ingress-controller-default-backend-ckk29z Created container default-backend |

| | 7s Normal Started pod/test-helminhelm-nginx-ingress-controller-default-backend-ckk29z Started container default-backend |

| | 66s Normal Killing pod/test-helminhelm-nginx-ingress-controller-default-backend-cljz9z Stopping container default-backend |

| | 9s Normal ScalingReplicaSet deployment/test-helminhelm-nginx-ingress-controller-default-backend Scaled up replica set test-helminhelm-nginx-ingress-controller-default-backend-c57d46575 to 1 |

| | 9s Normal ScalingReplicaSet deployment/test-helminhelm-nginx-ingress-controller Scaled up replica set test-helminhelm-nginx-ingress-controller-568dd8fdb to 1 |

| | |

+-------------------------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Update Workload Config¶

Use this when you need to update the "Workload Definition" for an existing workload. For example, you may want to add a new cluster location where the workload needs to be deployed.

Workload definitions can be updated even if it is already published and operational on clusters. Once the workload definition is updated, ensure that you follow up with a "publish" operation to make sure that the updated workload definition is applied.

./rctl update workload <path-to-workload-definition-json-file> [flags] --v3

Templating¶

Users can also create multiple workloads with a set of defined configurations. The template file contains a list of objects that helps to create multiple workload(s) from a single template.

Below is an example of a workload config template

# Generated: {{now.UTC.Format "2006-01-02T15:04:05UTC"}}

# With: {{command_line}}

{{ $envName := environment "PWD" | basename}}

{{ $glbCtx := . }}{{ range $i, $project := .ProjectNames }}

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: node

project: {{$envName}}-{{$project}}

spec:

artifact:

artifact:

catalog: default-bitnami

chartName: node

chartVersion: {{$glbCtx.NodeChartVersion}}

options:

maxHistory: 10

timeout: 5m0s

type: Helm

drift:

enabled: false

namespace: ns-frontend

placement:

labels:{{$c := $glbCtx}}{{range $l, $cluster := $glbCtx.ClusterNames}}

- key: rafay.dev/clusterName

value: {{$envName}}-{{$project}}-{{ $cluster }}{{end}}

---

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: phpbb

project: {{$envName}}-{{$project}}

spec:

artifact:

artifact:

catalog: default-bitnami

chartName: phpbb

chartVersion: {{$glbCtx.PHPbbChartVersion}}

options:

maxHistory: 10

timeout: 5m0s

type: Helm

drift:

enabled: false

namespace: ns-backend

placement:

labels:{{$c := $glbCtx}}{{range $l, $cluster := $glbCtx.ClusterNames}}

- key: rafay.dev/clusterName

value: {{$envName}}-{{$project}}-{{ $cluster }}{{end}}

---

apiVersion: apps.k8smgmt.io/v3

kind: Workload

metadata:

name: mysql

project: {{$envName}}-{{$project}}

spec:

artifact:

artifact:

catalog: default-bitnami

chartName: mysql

chartVersion: {{$glbCtx.MySqlChartVersion}}

options:

maxHistory: 10

timeout: 5m0s

type: Helm

drift:

enabled: false

namespace: ns-database

placement:

labels:{{$c := $glbCtx}}{{range $l, $cluster := $glbCtx.ClusterNames}}

- key: rafay.dev/clusterName

value: {{$envName}}-{{$project}}-{{ $cluster }}{{end}}

---

{{end}}

Users can create one or more workload(s) with the required configuration defined in the template file. Below is an example of a workload value file. This file helps to create workload with with the specified objects

NodeChartVersion: 19.0.2

PHPbbChartVersion: 12.2.16

MySqlChartVersion: 9.2.6

Important

Only the objects defined in the template must be present in the value files

Use the command below to create workload(s) with the specified configuration once the value file(s) are prepared with the necessary objects

./rctl apply -t workload.tmpl --values values.yaml

where,

- workload.tmpl: template file

- value.yaml: value file

Refer Templating for more details on Templating flags and examples

Refer here for the deprecated RCTL commands