Releases - Jan 2024¶

v2.3 Update 1 - SaaS¶

30 Jan, 2024

The section below provides a brief description of changes in this release.

Environment Manager¶

UX improvements¶

A number of improvements have been implemented with this release to improve the workflow experience for both Platform Administrators and end users

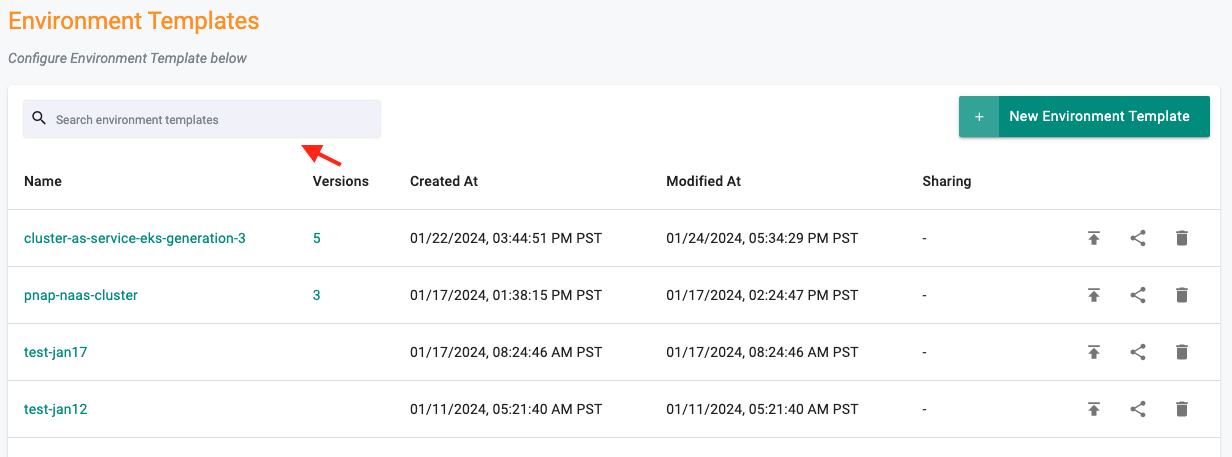

- 'Search by name' capability for Environment Manager Resources

-

'Upload file' support for Config Contexts

-

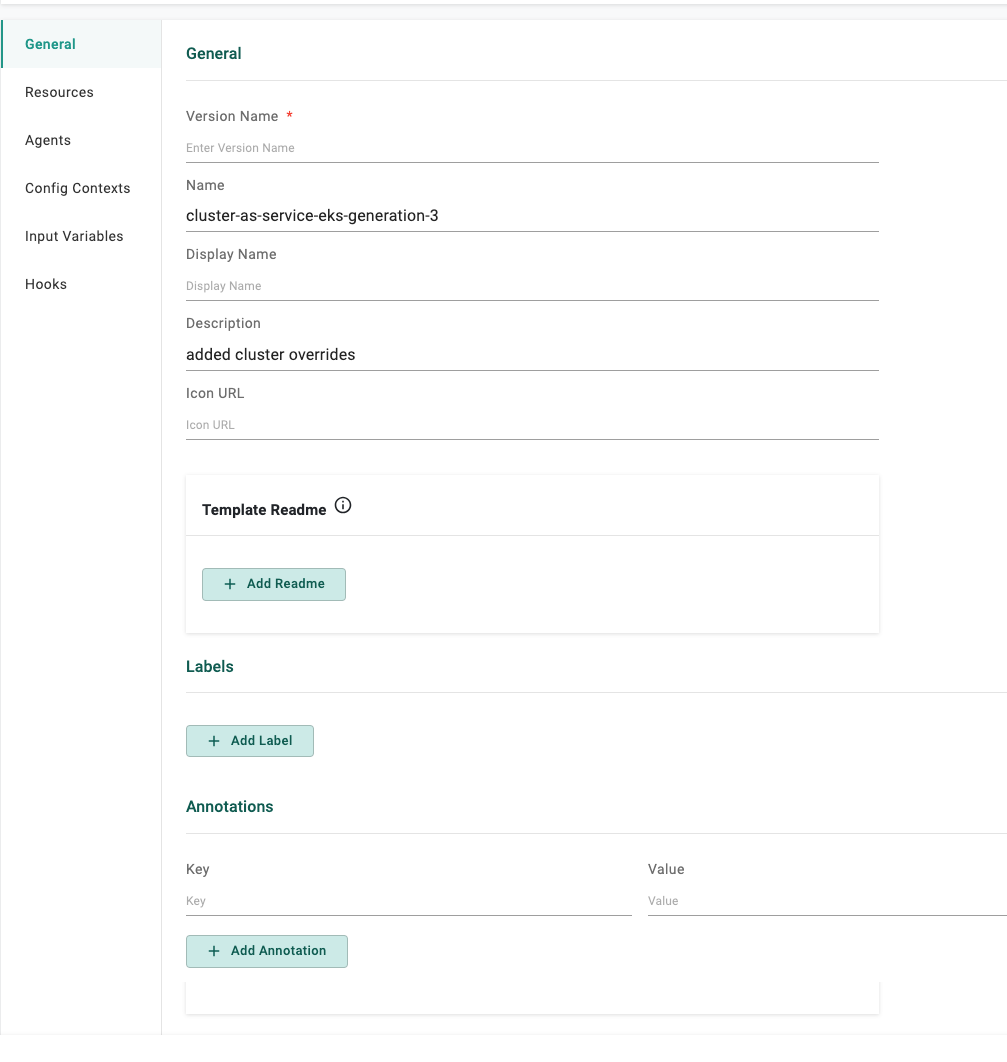

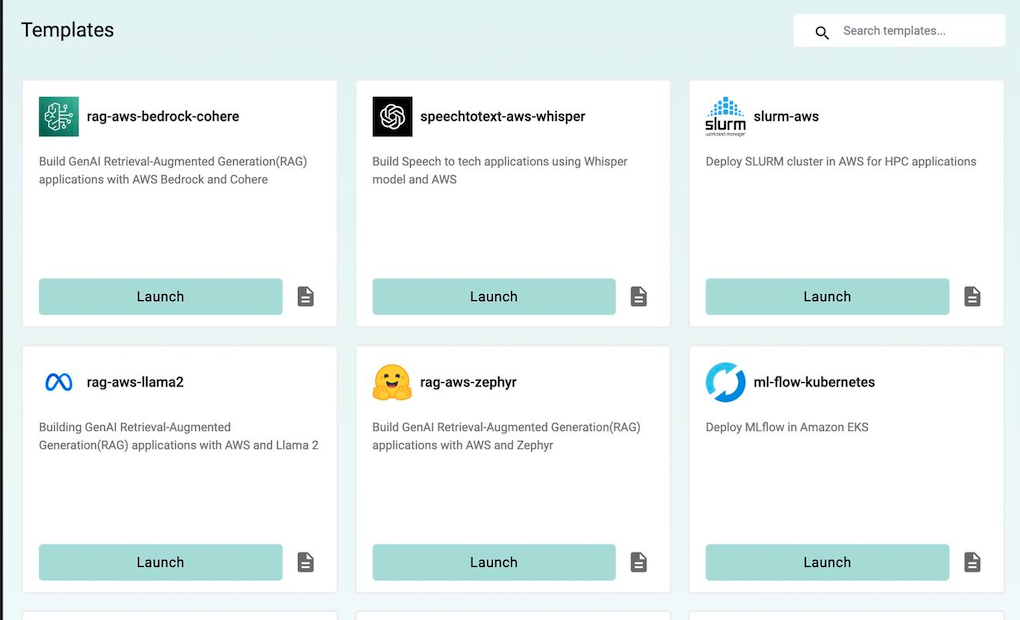

Ability for Platform Admins to add a display name, icon, category (from a pre-canned list) and README for the templates

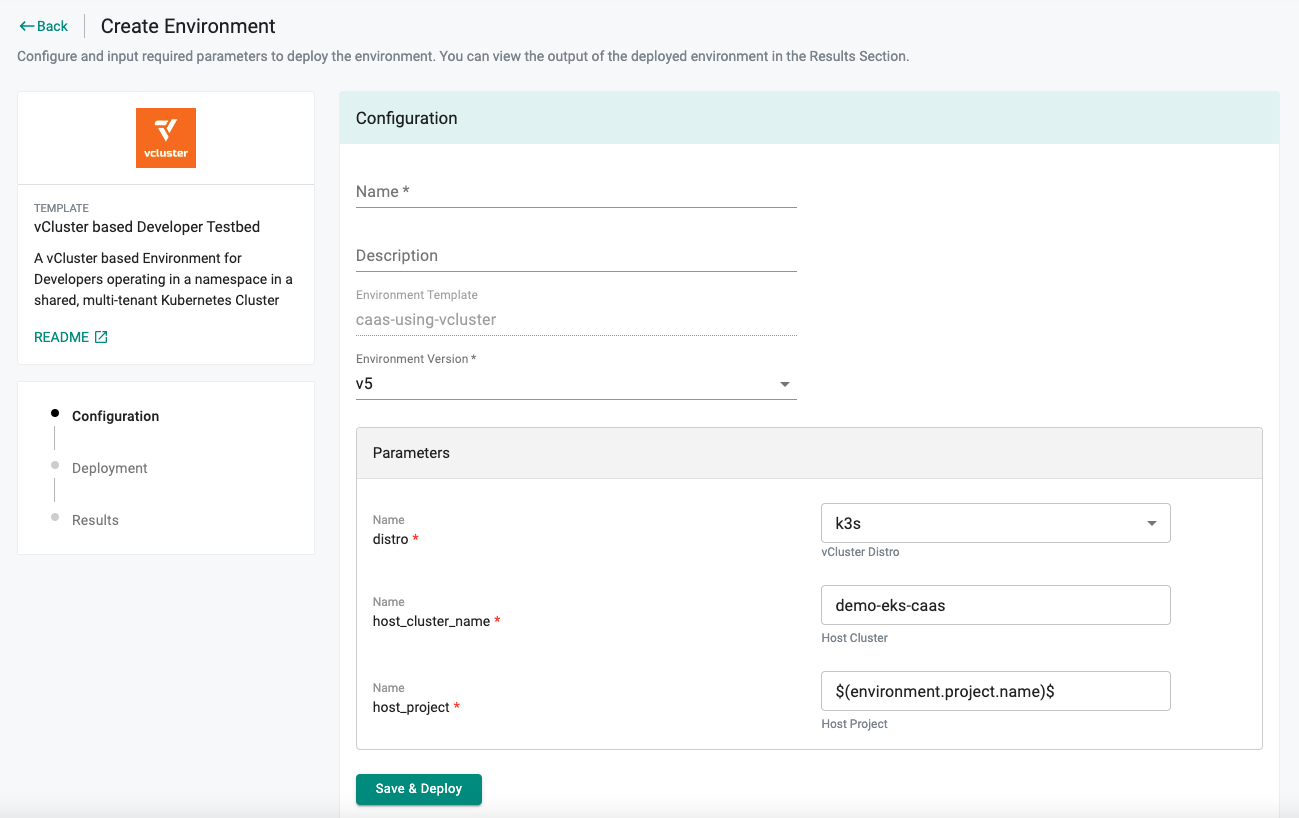

- Improved end user experience which makes it extremely simple for developers/data scientists to configure necessary input parameters and deploy environments based on Templates

v1.1.23 - Terraform Provider¶

25 Jan, 2024

An updated version of the Terraform provider is now available.

This release includes enhancements around resources listed below:

Existing Resources

resource_aks_cluster: System components placement support has been addedresource_aks_cluster_v3: System components placement support has been added to v3 resourcerafay_namespace: GPU Resource Quota (requests and limits) support has been added to this resourcerafay_project: GPU Resource Quota (requests and limits) support has been added to this resourcerafay_blueprint: CSI secret store configuration support has been added to this resourcerafay_blueprint: sync type support (Managed or Unmanaged) has been added to this resource

v2.3 - SaaS¶

19 Jan, 2024

The section below provides a brief description of the new functionality and enhancements in this release.

Environment Manager¶

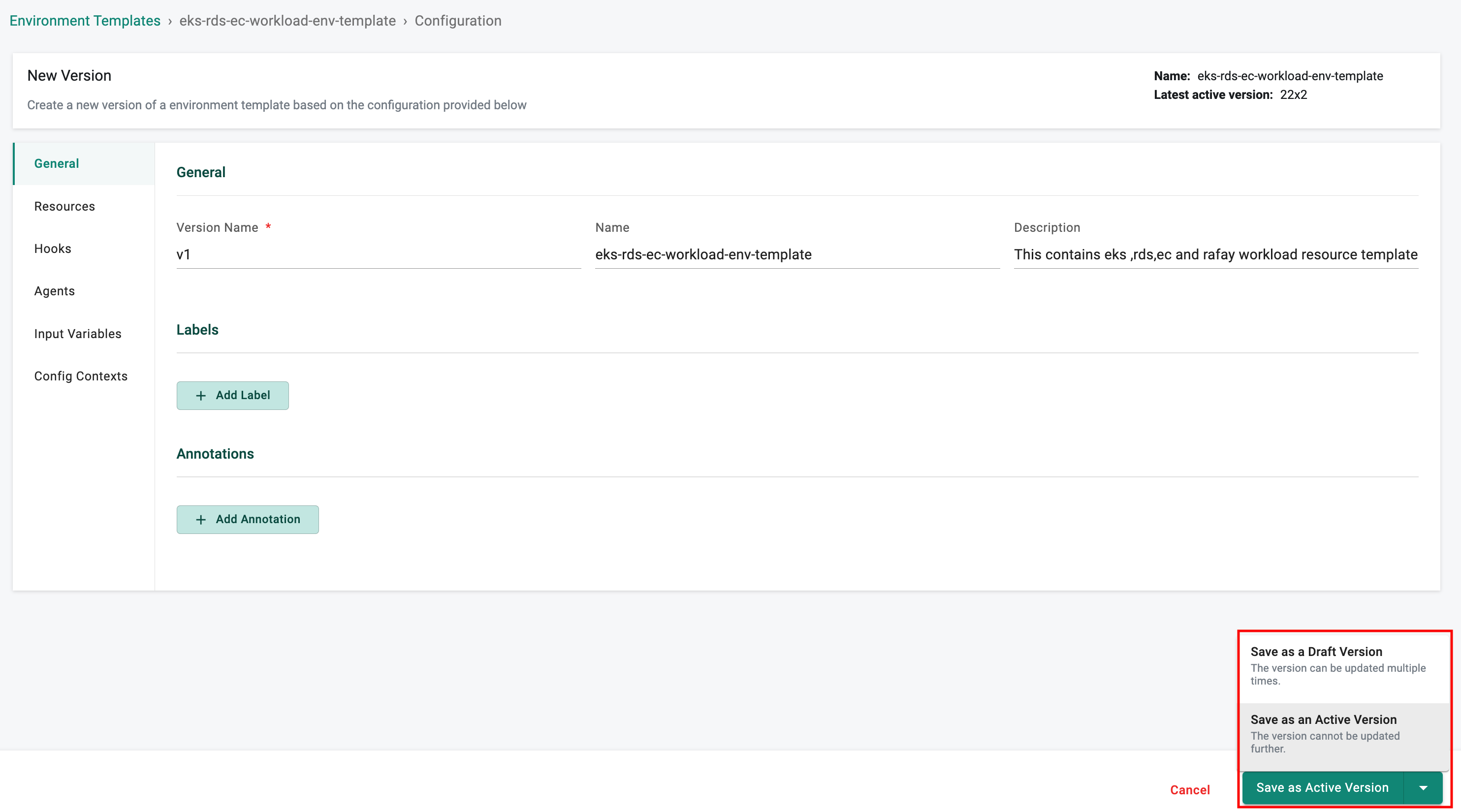

Version Control: Resource Templates and Environment Templates¶

Support has been added to mark versions as 'Draft' and to 'Disable' versions of resource and environment templates.

-

Draft Versions: This feature includes a couple of benefits. It lets the platform team modify templates multiple times without having to necessarily create a new version during the testing/validation phase. When all the required changes have been implemented, the version can be marked as 'Active'. The other benefit is that Draft versions are project scoped which means that these versions are not shared with downstream projects. This ensures that only fully vetted templates that are explicitly marked as Active are available to downstream projects/users

-

Disable Versions: This helps platform teams ensure that only currently blessed versions of templates are used by downstream users to create new environments and older versions can be retired/made unavailable

More information on this feature can be found here.

Amazon EKS¶

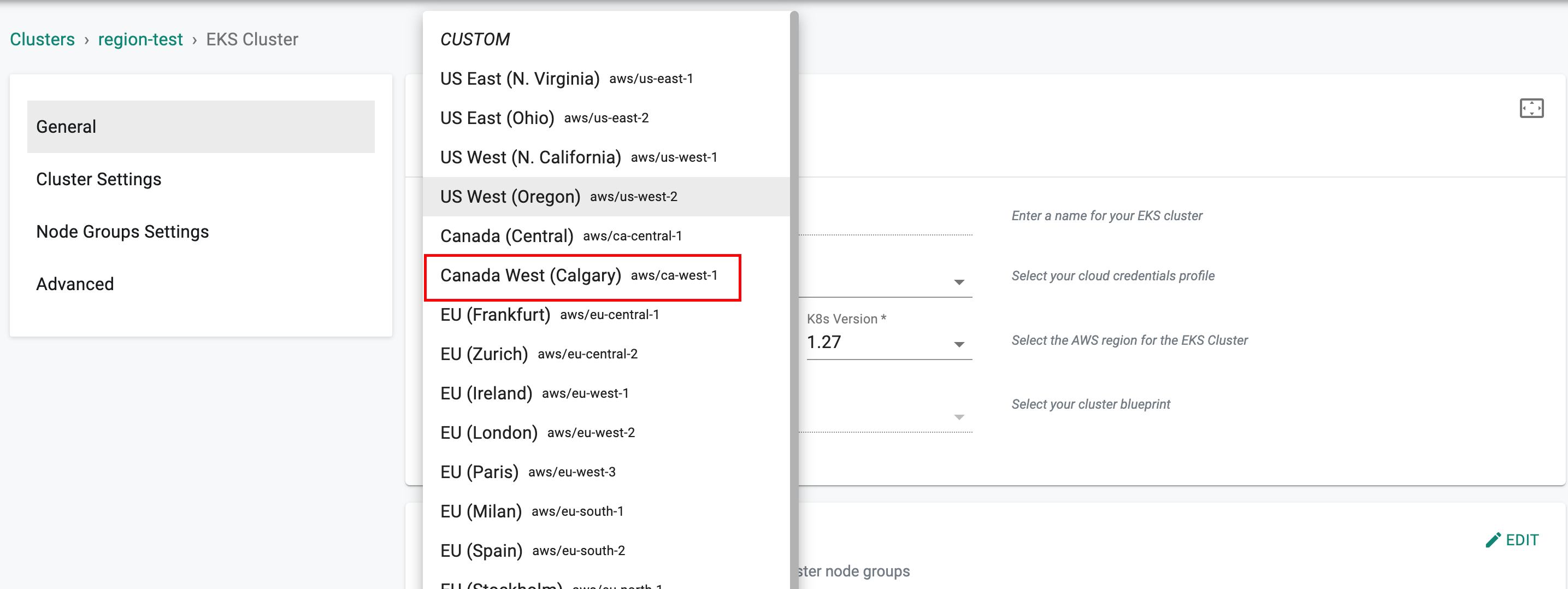

Support for additional region¶

Support for Canada West (Calgary) region has been added with this release.

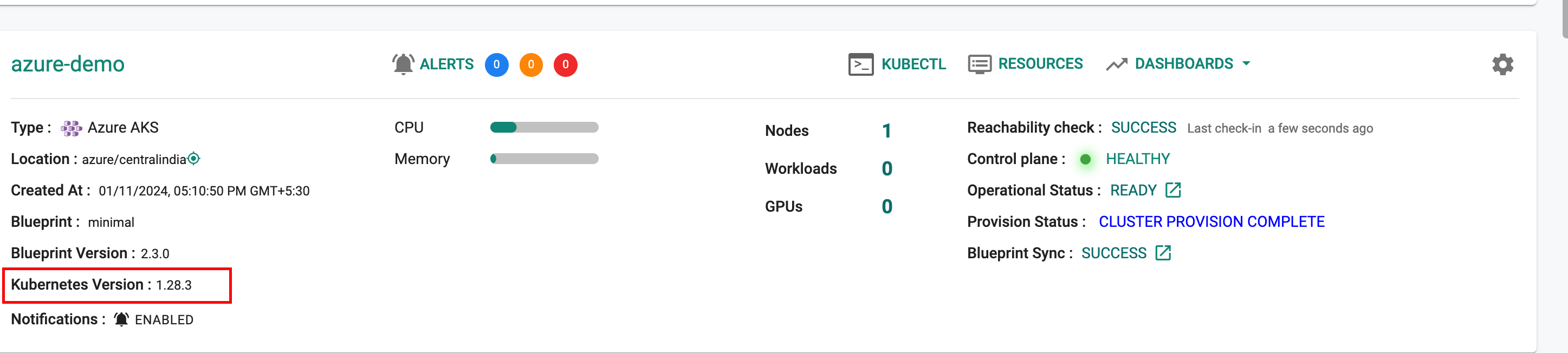

Azure AKS¶

Kubernetes v1.28¶

New AKS clusters can now be provisioned based on Kubernetes v1.28. Existing clusters managed by the controller can be upgraded "in-place" to Kubernetes v1.28.

Explore our blog for deeper insights on AKS v1.28 Cluster using Rafay, available here!

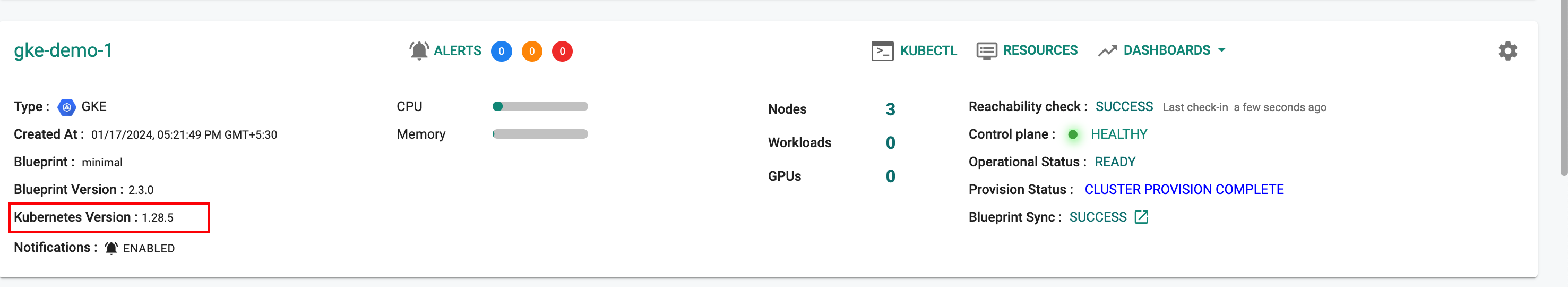

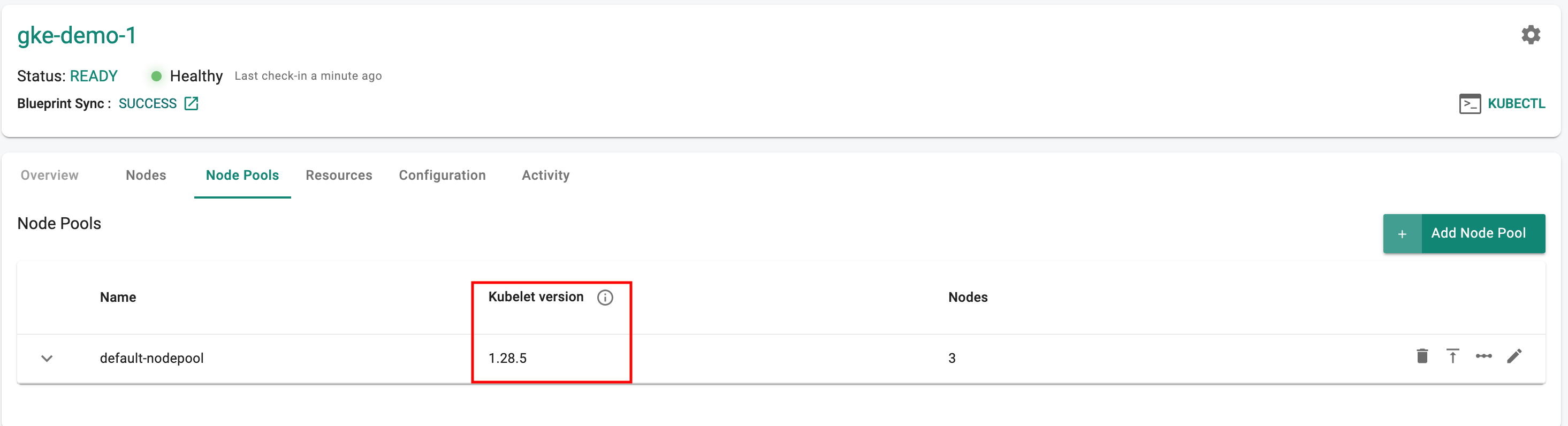

Google GKE¶

Kubernetes v1.28¶

New GKE clusters can now be provisioned based on Kubernetes v1.28. Existing clusters managed by the controller can be upgraded "in-place" to Kubernetes v1.28.

Explore our blog for deeper insights on GKE v1.28 Cluster using Rafay, available here!

Upstream Kubernetes¶

Retry Provisioning for Failed Nodes¶

This feature addresses provisioning challenges in large clusters with tens of worker nodes. If certain worker nodes encounter provisioning failures during the cluster setup (Day 0), you can trigger a retry exclusively only for those specific worker nodes.

For example, for a cluster with 20 nodes (1 Master and 19 workers), if some worker nodes face issues during the initial setup, re-triggering the process will retry solely on those worker nodes that had problems before.

Please note that this enhancement specifically targets worker node provisioning failures and does not extend to cases where Master Node Provisioning fails, especially in a High Availability (HA) setup with multiple master nodes. We hope this improvement enhances your experience with Upstream Kubernetes.

VMware vSphere¶

UI improvements¶

Search bar to the dropdown list has been added to the vSphere Cluster Configuration page with this release to enable a more streamlined UX.

Blueprints¶

Audit Logs¶

A number of improvements have been implemented around audit logs for Blueprints. Examples include:

- Tagging a blueprint update event on a cluster appropriately

- Capturing version details as part of a new blueprint creation event

- Capturing addition of labels to an add-on as part of the audit event

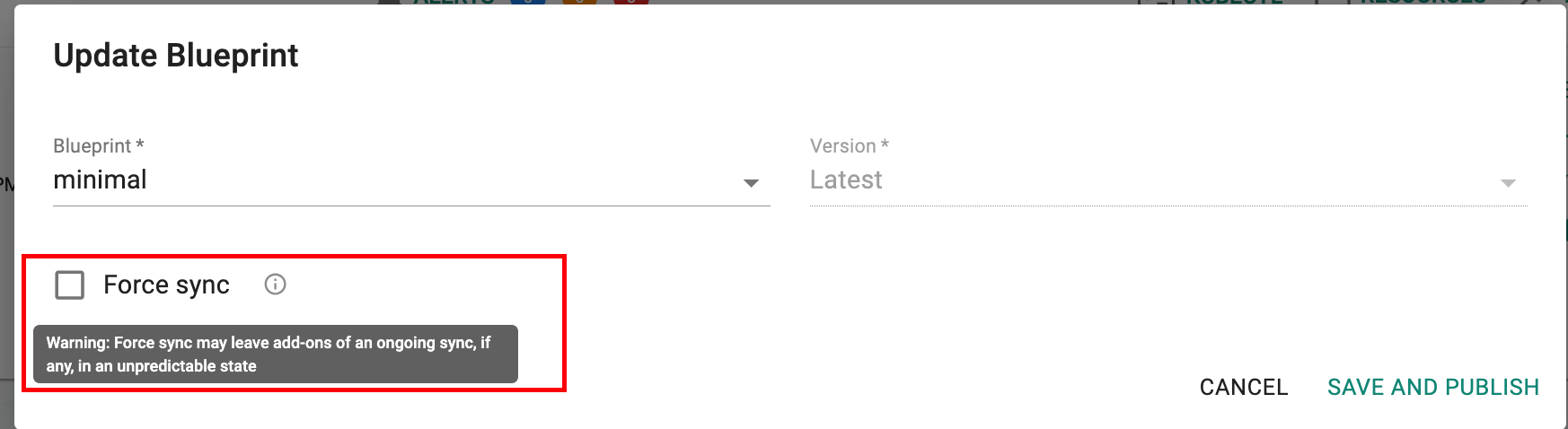

Force Sync¶

A Force Sync option is now available for users to re-trigger a blueprint sync on the cluster even when a previous blueprint sync is in progress. An example scenario where this may be useful is a user identifying an incorrect configuration with the blueprint being applied and wants to apply a new blueprint without having to wait for the previous sync operation to complete.

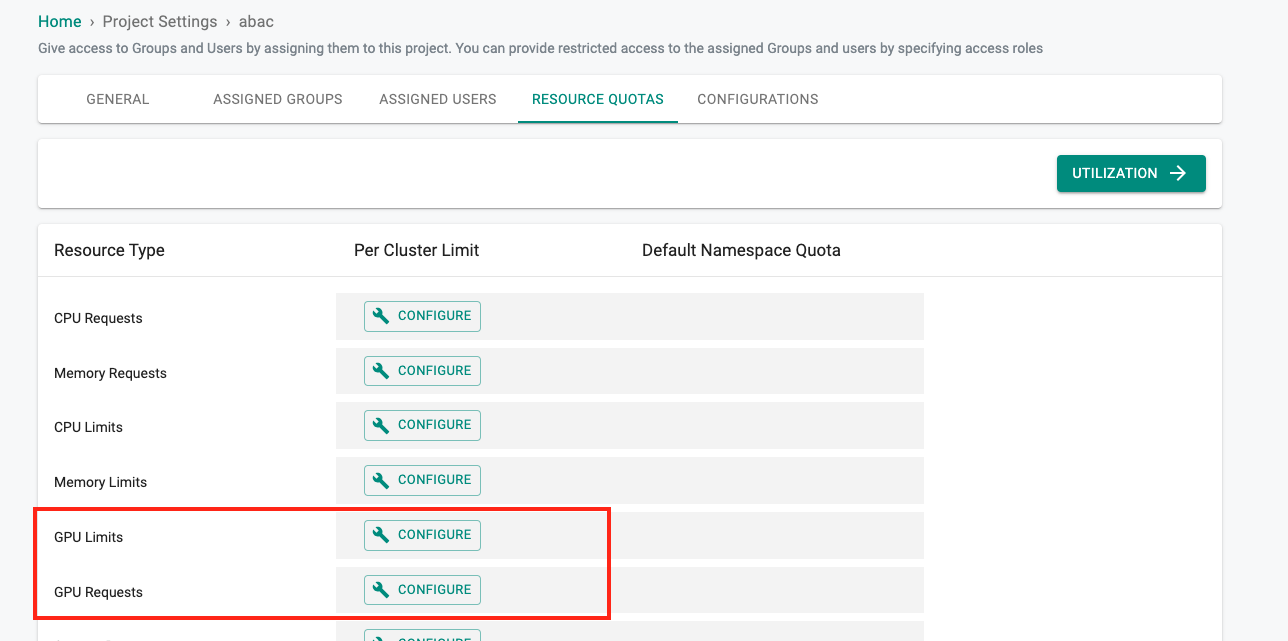

Multi-tenancy¶

Resource Quotas - GPU¶

It is possible to specify GPU Requests and Limits both at the project and namespace level with this release. This enables the Platform team to configure the same guardrails as other resources for GPUs when they are providing 'Workspace as service' or 'Namespace as a service' to downstream teams.

Namespaces¶

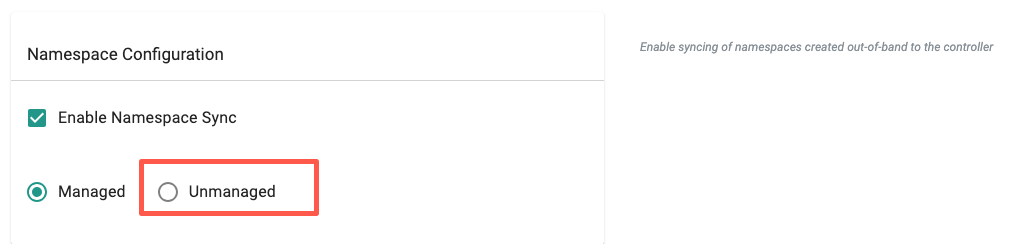

Unmanaged Mode¶

Namespace Sync capability allows syncing of pre-existing namespaces or namespaces created out-of-band to the controller. Any namespaces synced back to the controller were previously treated as "Managed namespaces" i.e. no out of band operations (e.g. delete) are allowed on those namespaces.

This release adds support for syncing namespaces in Unmanaged mode. This allows management of lifecycle of these namespaces out of band while being able to reference these namespaces for operations such as role assignment/Zero-Trust Access, add-on deployment.

Important

Upgrading the blueprint to use a base version of 2.3+ is a pre-requisite for this feature

More information on this feature can be found here.

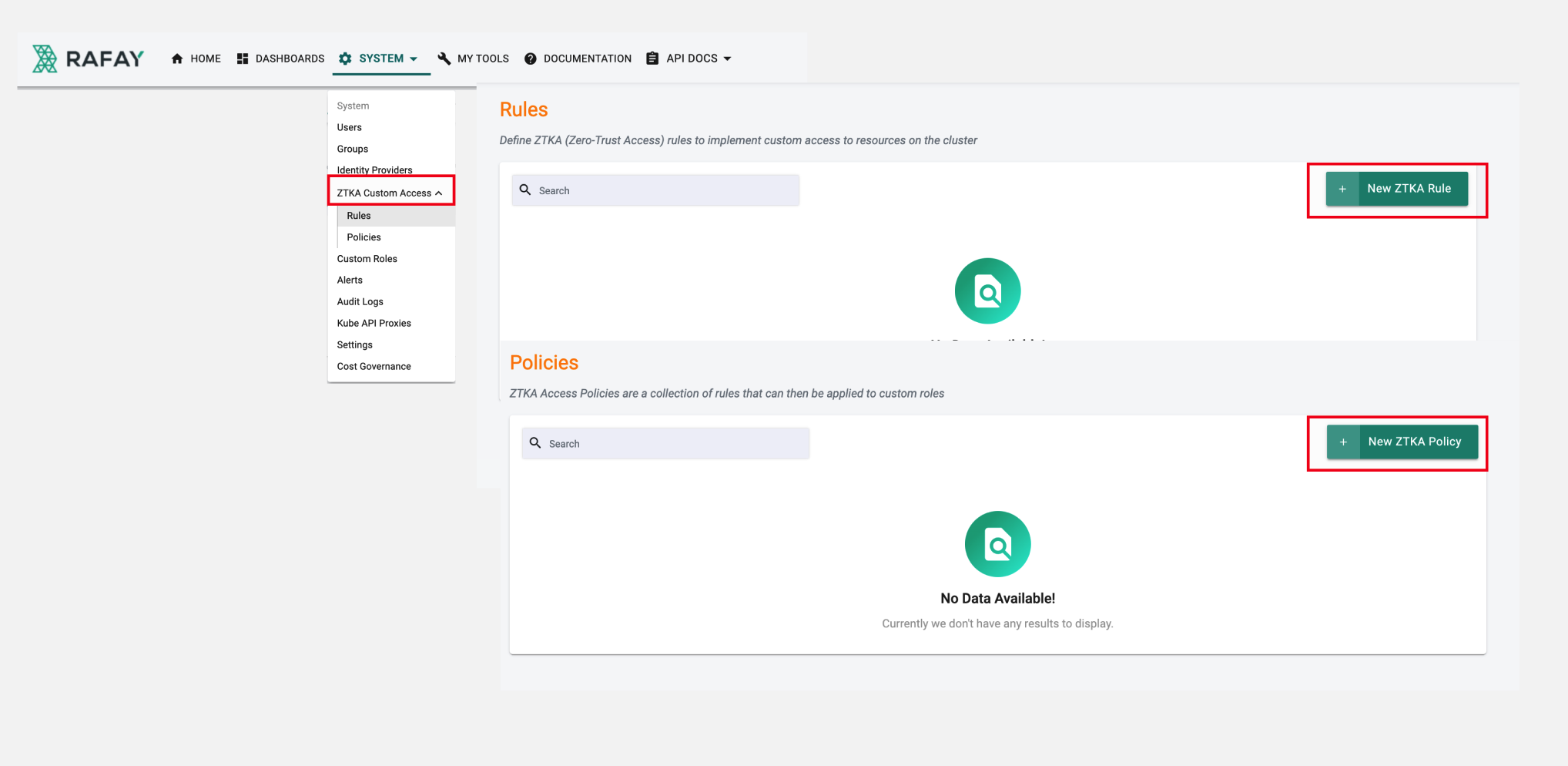

Zero-Trust Access¶

Custom RBAC Support¶

There are scenarios where more fine-grained access policies (than what is included with the platform's base roles) need to be configured for users. ZTKA (Zero-Trust) Custom Access enables customers to define custom RBAC definitions to control the access that users have to the clusters in their organization.

An example could be restricting users to read only access (get, list, watch verbs) for certain resources (e.g. pods, secrets) in a certain namespace.

More information on this feature can be found here.

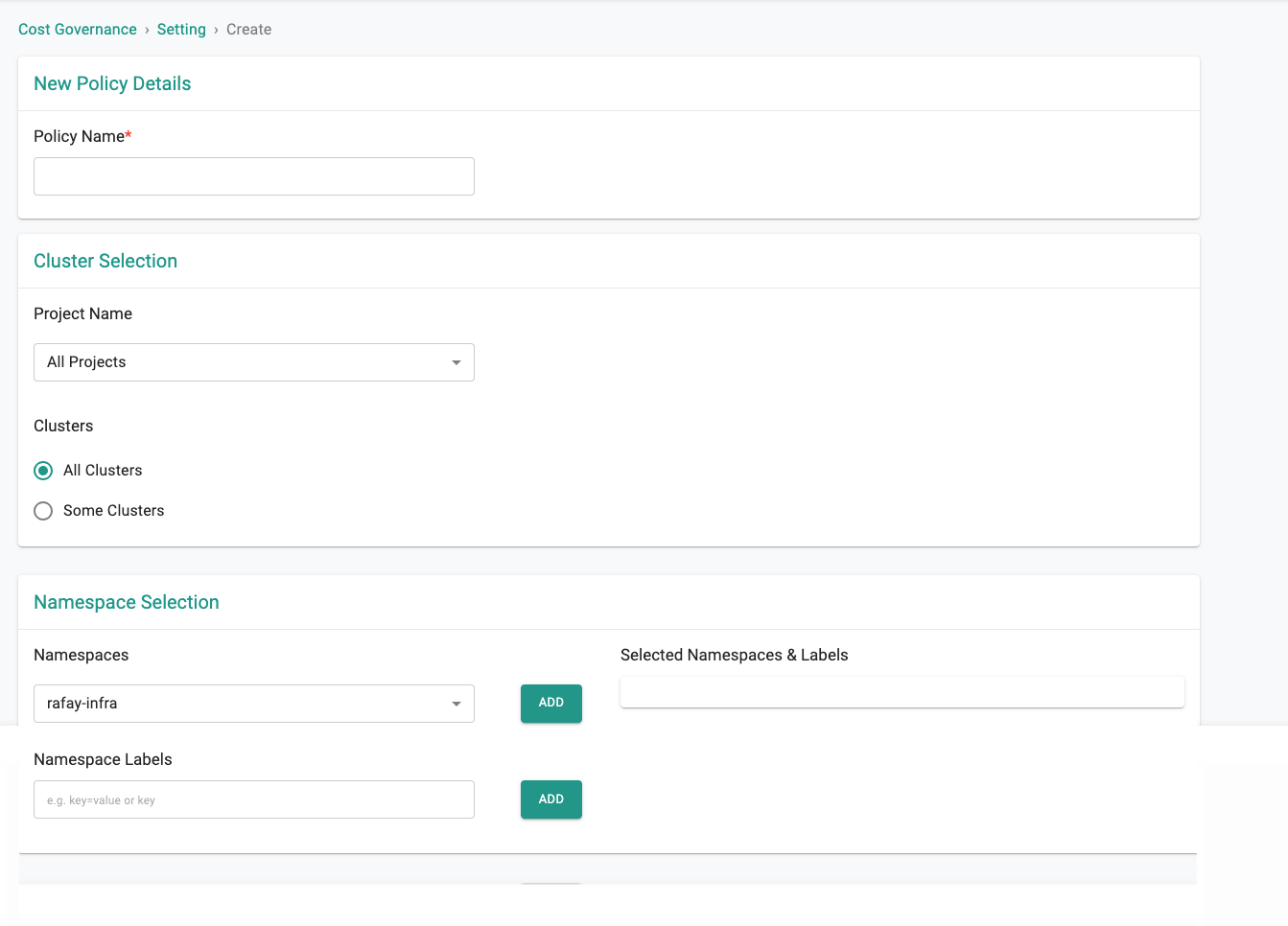

Cost Management¶

Chargeback Improvements¶

A previous release added the ability to identify common services through configuration of namespace list/labels and share the cost of common services among teams/applications when generating chargeback/showback reports through RCTL, Swagger API and Terraform Provider. This release includes the ability to do the same via UI.

More information on this feature can be found here.

OpenCost¶

OpenCost version installed with the 2.3 base blueprint version has been updated to v1.106.3.

v2.3 Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-24688 | Network policy dashboard fails to load when a namespace is in a failed state |

| RC-31154 | Incorrect Day2 Operation Computation affecting Blueprint sync update |

| RC-30951 | Applying EKS cluster spec with changes to cluster labels causes injection of blueprint rafay.dev labels into cluster config |

| RC-29710 | Opencost reports $0 as Node Hourly Cost for AWS Clusters |

| RC-29448 | Pre-flight check failed error during AKS upgrade is not communicated to the user |

| RC-22368 | UI: "Select all" under placement doesn't publish namespace to non-provisioned clusters |

| RC-31753 | Daemonset aws-node-termination-handler-v3 and rafay-prometheus-node-exporter updateStrategy maxUnavailable set to 1 |

| RC-31983 | Nil pointer error occurs when downloading the kubeconfig |

| RC-27381 | RCTL: No error message when using "rctl convert2managed cluster eks" even when the cluster fails to convert due to errors like IRSA account check failure |