Built-in variables

There could be scenarios where a cluster name or specific values (based on defined cluster labels) need to be injected to a manifest dynamically as it is being deployed to the cluster. Controller's built-in variables can be leveraged for this purpose and the syntax below can be used either as part of cluster overrides or the manifest itself.

Cluster name¶

{{{ .global.Rafay.ClusterName }}}

For example, deploying the AWS Load Balancer Helm chart requires configuration of "clusterName". You can utilize the below cluster override to achieve that.

clusterName: {{{ .global.Rafay.ClusterName }}}

Cluster labels¶

{{{ .global.Rafay.ClusterLabels.<label_key> }}}

If there is a need as an example to dynamically configure "region" based on a pre-defined cluster label (e.g. "awsRegion"), you can utilize the below cluster override to achieve that.

region: {{{ .global.Rafay.ClusterLabels.awsRegion }}}

Illustrative example¶

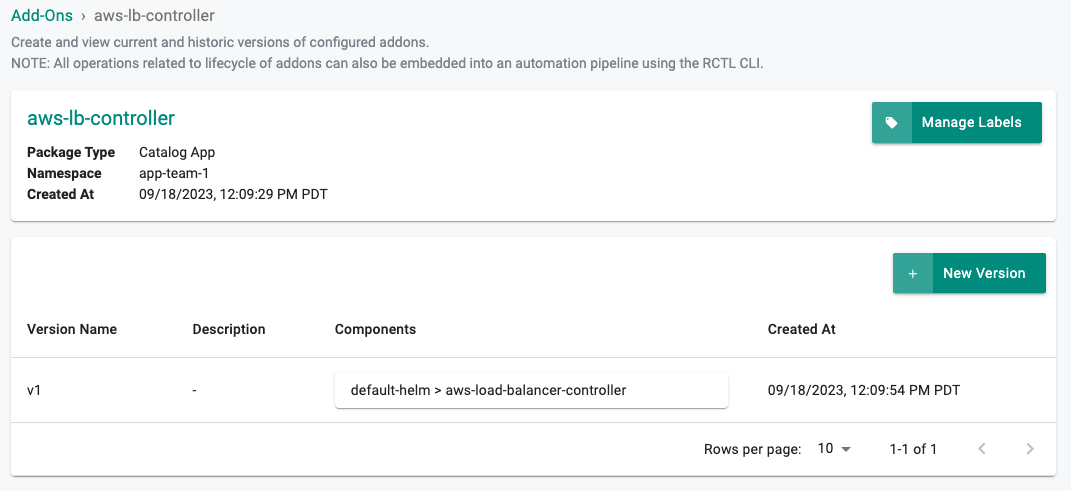

We will use the AWS Load Balancer Controller Helm chart for this example. The add-on called "aws-lb-controller" is configured with the helm chart provided by AWS. The helm chart requires that a "clusterName" be set before being deployed. By default, the value is left blank inside the chart. We will utilize a cluster override to set the value when the helm chart is deployed.

Step 1: Create Add-on¶

As an Admin in the console,

- Navigate to the Project

- Click on Add-Ons under Infrastructure. Select Create New Add-On from Catalog

- Search for "aws-load-balancer-controller"

- Click Create Add-On

- Provide a name for the add-on (e.g. aws=lb-controller), select the namespace

- Click Create

- Provide a version name (e.g. v1), clicck Save changes

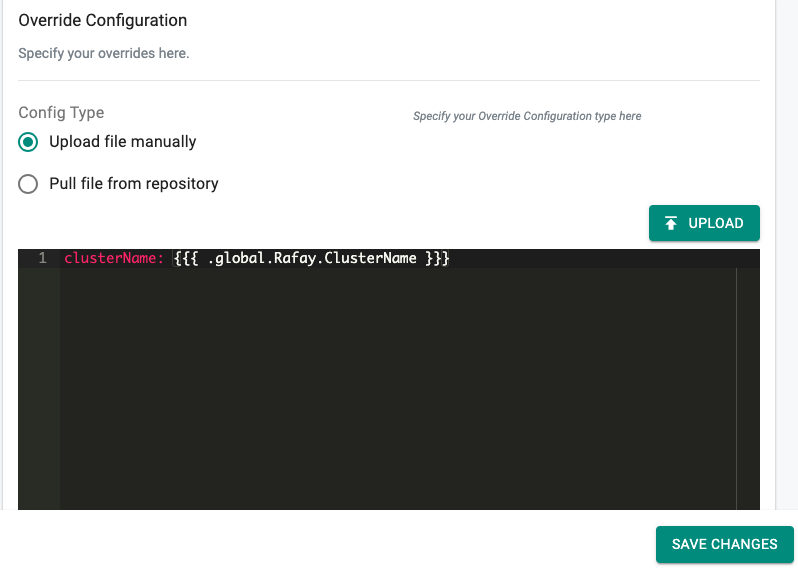

Step 2: Create Cluster Override¶

- Navigate to the Project

- Click on Cluster Overrides under Infrastructure. Cluster Override page appears

- Click New Override and provide a name (e.g. aws-lb)

- Select the required File Type (Helm) and click Create

- For the Resource Selector, select the add-on for which the cluster override will be applied (e.g. aws-lb-controller)

- Select Specific Clusters as Type and select the required cluster(s) for which the cluster override will be applied

- Add the Override Value directly in the config screen as shown below

- Click Save Changes

Step 3: Deploy the Add-on¶

Deploy the blueprint to the cluster containing the add-on to utilize the newly created cluster override.

Step 4: Verify the cluster override has been applied to the deployment¶

kubectl describe pod -n kube-system aws-lb-controller-aws-load-balancer-controller-f5f6d6b47-9kjkl

Name: aws-lb-controller-aws-load-balancer-controller-f5f6d6b47-9kjkl

Namespace: kube-system

Priority: 0

Node: ip-172-31-114-123.us-west-1.compute.internal/172.31.114.123

Start Time: Mon, 18 Sep 2023 19:17:04 +0000

Labels: app.kubernetes.io/instance=aws-lb-controller

app.kubernetes.io/name=aws-load-balancer-controller

envmgmt.io/workload-type=ClusterSelector

k8smgmt.io/project=mango

pod-template-hash=f5f6d6b47

rep-addon=aws-lb-controller

rep-cluster=pk0d152

rep-drift-reconcillation=enabled

rep-organization=d2w714k

rep-partner=rx28oml

rep-placement=k69rynk

rep-project=lk5rdw2

rep-project-name=mango

rep-workloadid=kv6p0vm

Annotations: kubernetes.io/psp: rafay-kube-system-psp

prometheus.io/port: 8080

prometheus.io/scrape: true

Status: Running

IP: 172.31.103.206

IPs:

IP: 172.31.103.206

Controlled By: ReplicaSet/aws-lb-controller-aws-load-balancer-controller-f5f6d6b47

Containers:

aws-load-balancer-controller:

Container ID: docker://e115a56b7444ea55bda8f2503b9b046d6fd84dbffd3cbf77090f35f35c2657ef

Image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-load-balancer-controller:v2.1.3

Image ID: docker-pullable://602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-load-balancer-controller@sha256:c7981cc4bb73a9ef5d788a378db302c07905ede035d4a529bfc3afe18b7120ef

Ports: 9443/TCP, 8080/TCP

Host Ports: 0/TCP, 0/TCP

Command:

/controller

Args:

--cluster-name=demo-eks-mango

--ingress-class=alb

State: Running

Started: Mon, 18 Sep 2023 19:17:36 +0000

Ready: True

Restart Count: 0

Liveness: http-get http://:61779/healthz delay=30s timeout=10s period=10s #success=1 #failure=2

Environment: <none>

Mounts:

/tmp/k8s-webhook-server/serving-certs from cert (ro)

/var/run/secrets/kubernetes.io/serviceaccount from aws-lb-controller-aws-load-balancer-controller-token-dllmd (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

cert:

Type: Secret (a volume populated by a Secret)

SecretName: aws-load-balancer-tls

Optional: false

aws-lb-controller-aws-load-balancer-controller-token-dllmd:

Type: Secret (a volume populated by a Secret)

SecretName: aws-lb-controller-aws-load-balancer-controller-token-dllmd

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m27s default-scheduler Successfully assigned kube-system/aws-lb-controller-aws-load-balancer-controller-f5f6d6b47-9kjkl to ip-172-31-114-123.us-west-1.compute.internal

Normal Pulling 3m26s kubelet Pulling image "602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-load-balancer-controller:v2.1.3"

Normal Pulled 3m22s kubelet Successfully pulled image "602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-load-balancer-controller:v2.1.3" in 4.252275657s

Normal Created 3m21s kubelet Created container aws-load-balancer-controller

Normal Started 3m21s kubelet Started container aws-load-balancer-controller