Part 4: Expand

What Will You Do¶

This is Part 4 of a multi-part, self-paced quick start exercise. In this part, you will add additional raw storage devices to the storage nodes and see that the managed storage addon automatically detects and provisions this storage as part of the Rook Ceph Cluster.

Confirm Storage Device¶

First, you will confirm the storage devices attached to your storage nodes.

- Open a shell within each storage node in the cluster

- Execute the following command to list all block devices

lsblk -a

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 55.6M 1 loop /snap/core18/2667

loop1 7:1 0 55.6M 1 loop /snap/core18/2697

loop2 7:2 0 63.3M 1 loop /snap/core20/1778

loop3 7:3 0 63.3M 1 loop /snap/core20/1822

loop4 7:4 0 111.9M 1 loop /snap/lxd/24322

loop5 7:5 0 50.6M 1 loop /snap/oracle-cloud-agent/48

loop6 7:6 0 52.2M 1 loop /snap/oracle-cloud-agent/50

loop7 7:7 0 49.6M 1 loop /snap/snapd/17883

loop8 7:8 0 49.8M 1 loop /snap/snapd/18357

loop9 7:9 0 0B 0 loop

sda 8:0 0 46.6G 0 disk

├─sda1 8:1 0 46.5G 0 part /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/reloader/1

│ /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/fluentd/4

│ /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 1T 0 disk

└─ceph--ba1c0a57--d96e--467a--a3b9--1b61ec70ab7c-osd--block--c3ab1b1d--cd3f--470b--ad2e--1da594a0e424

253:0 0 1024G 0 lvm

└─GLCh4v-svKt-SuOc-5r2n-ft8V-Q1ef-lqdD9P 253:3 0 1024G 0 crypt

sdc 8:32 0 1T 0 disk

└─ceph--3bf147eb--98c4--4c7c--b1ff--b4598b55d195-osd--block--c2262605--d314--4cce--9cac--ef3d0131d98c

253:1 0 1024G 0 lvm

└─qDlkR4-qRbD-UncQ-Ytcs-EMDS-rOjG-rQIKHb 253:2 0 1024G 0 crypt

nbd0 43:0 0 0B 0 disk

nbd1 43:32 0 0B 0 disk

nbd2 43:64 0 0B 0 disk

nbd3 43:96 0 0B 0 disk

nbd4 43:128 0 0B 0 disk

nbd5 43:160 0 0B 0 disk

nbd6 43:192 0 0B 0 disk

nbd7 43:224 0 0B 0 disk

nbd8 43:256 0 0B 0 disk

nbd9 43:288 0 0B 0 disk

nbd10 43:320 0 0B 0 disk

nbd11 43:352 0 0B 0 disk

nbd12 43:384 0 0B 0 disk

nbd13 43:416 0 0B 0 disk

nbd14 43:448 0 0B 0 disk

nbd15 43:480 0 0B 0 disk

Add Storage Device¶

Next, you will add a raw storage device to the storage nodes.

- Add a raw storage device to the storage nodes

- Open a shell within each storage node in the cluster

- Execute the following command to list all block devices

lsblk -a

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 55.6M 1 loop /snap/core18/2667

loop1 7:1 0 55.6M 1 loop /snap/core18/2697

loop2 7:2 0 63.3M 1 loop /snap/core20/1778

loop3 7:3 0 63.3M 1 loop /snap/core20/1822

loop4 7:4 0 111.9M 1 loop /snap/lxd/24322

loop5 7:5 0 50.6M 1 loop /snap/oracle-cloud-agent/48

loop6 7:6 0 52.2M 1 loop /snap/oracle-cloud-agent/50

loop7 7:7 0 49.6M 1 loop /snap/snapd/17883

loop8 7:8 0 49.8M 1 loop /snap/snapd/18357

loop9 7:9 0 0B 0 loop

sda 8:0 0 46.6G 0 disk

├─sda1 8:1 0 46.5G 0 part /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/reloader/1

│ /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/fluentd/4

│ /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 1T 0 disk

└─ceph--ba1c0a57--d96e--467a--a3b9--1b61ec70ab7c-osd--block--c3ab1b1d--cd3f--470b--ad2e--1da594a0e424

253:0 0 1024G 0 lvm

└─GLCh4v-svKt-SuOc-5r2n-ft8V-Q1ef-lqdD9P 253:3 0 1024G 0 crypt

sdc 8:32 0 1T 0 disk

└─ceph--3bf147eb--98c4--4c7c--b1ff--b4598b55d195-osd--block--c2262605--d314--4cce--9cac--ef3d0131d98c

253:1 0 1024G 0 lvm

└─qDlkR4-qRbD-UncQ-Ytcs-EMDS-rOjG-rQIKHb 253:2 0 1024G 0 crypt

sdd 8:48 0 1T 0 disk

nbd0 43:0 0 0B 0 disk

nbd1 43:32 0 0B 0 disk

nbd2 43:64 0 0B 0 disk

nbd3 43:96 0 0B 0 disk

nbd4 43:128 0 0B 0 disk

nbd5 43:160 0 0B 0 disk

nbd6 43:192 0 0B 0 disk

nbd7 43:224 0 0B 0 disk

nbd8 43:256 0 0B 0 disk

nbd9 43:288 0 0B 0 disk

nbd10 43:320 0 0B 0 disk

nbd11 43:352 0 0B 0 disk

nbd12 43:384 0 0B 0 disk

nbd13 43:416 0 0B 0 disk

nbd14 43:448 0 0B 0 disk

nbd15 43:480 0 0B 0 disk

- Execute the following command to list all block devices again

lsblk -a

You will see that the added devices now have Ceph filesystems.

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 55.6M 1 loop /snap/core18/2667

loop1 7:1 0 55.6M 1 loop /snap/core18/2697

loop2 7:2 0 63.3M 1 loop /snap/core20/1778

loop3 7:3 0 63.3M 1 loop /snap/core20/1822

loop4 7:4 0 111.9M 1 loop /snap/lxd/24322

loop5 7:5 0 50.6M 1 loop /snap/oracle-cloud-agent/48

loop6 7:6 0 52.2M 1 loop /snap/oracle-cloud-agent/50

loop7 7:7 0 49.6M 1 loop /snap/snapd/17883

loop8 7:8 0 49.8M 1 loop /snap/snapd/18357

loop9 7:9 0 0B 0 loop

sda 8:0 0 46.6G 0 disk

├─sda1 8:1 0 46.5G 0 part /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/reloader/1

│ /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/fluentd/4

│ /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 1T 0 disk

└─ceph--ba1c0a57--d96e--467a--a3b9--1b61ec70ab7c-osd--block--c3ab1b1d--cd3f--470b--ad2e--1da594a0e424

253:0 0 1024G 0 lvm

└─GLCh4v-svKt-SuOc-5r2n-ft8V-Q1ef-lqdD9P 253:3 0 1024G 0 crypt

sdc 8:32 0 1T 0 disk

└─ceph--3bf147eb--98c4--4c7c--b1ff--b4598b55d195-osd--block--c2262605--d314--4cce--9cac--ef3d0131d98c

253:1 0 1024G 0 lvm

└─qDlkR4-qRbD-UncQ-Ytcs-EMDS-rOjG-rQIKHb 253:2 0 1024G 0 crypt

sdd 8:48 0 1T 0 disk

└─ceph--1df7f6fe--784e--4232--93f7--b9b80b54455c-osd--block--8d9343c5--ddf1--4365--a830--3c7947c4559d

253:4 0 1024G 0 lvm

└─bwEHAd-rAw7-GEtq-LmiF-4T4O-yVTX-2tYHx7 253:5 0 1024G 0 crypt

nbd0 43:0 0 0B 0 disk

nbd1 43:32 0 0B 0 disk

nbd2 43:64 0 0B 0 disk

nbd3 43:96 0 0B 0 disk

nbd4 43:128 0 0B 0 disk

nbd5 43:160 0 0B 0 disk

nbd6 43:192 0 0B 0 disk

nbd7 43:224 0 0B 0 disk

nbd8 43:256 0 0B 0 disk

nbd9 43:288 0 0B 0 disk

nbd10 43:320 0 0B 0 disk

nbd11 43:352 0 0B 0 disk

nbd12 43:384 0 0B 0 disk

nbd13 43:416 0 0B 0 disk

nbd14 43:448 0 0B 0 disk

nbd15 43:480 0 0B 0 disk

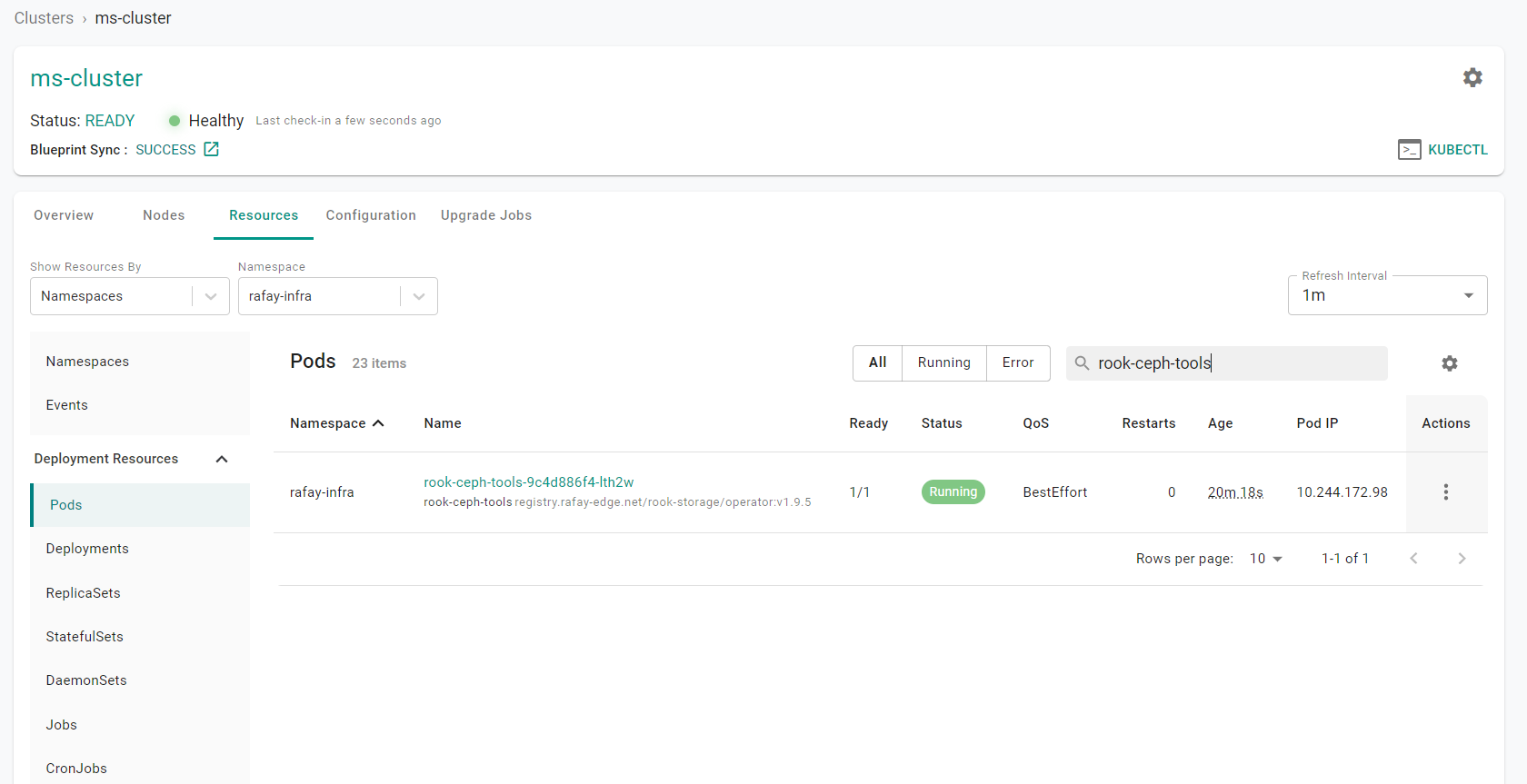

- In the console, navigate to your project

- Select Infrastructure -> Clusters

- Click the cluster name on the cluster card

- Click the "Resources" tab

- Select "Pods" in the left hand pane

- Select "rafay-infra" from the "Namespace" dropdown

- Enter "rook-ceph-tools" into the search box

- Click the "Actions" button

- Select "Shell and Logs"

- Click the "Exec" icon to open a shell into the container

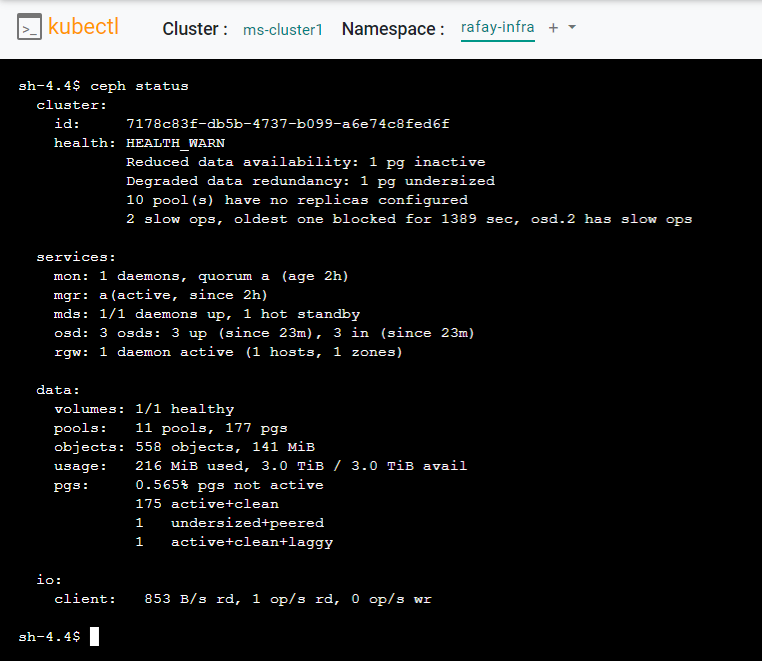

- Enter the following command in the shell to check the status of the Ceph cluster

ceph status

You will see that the storage capacity of the cluster has increased to 3.0 TiB now that the Ceph cluster is using the newly added storage device.

Recap¶

At this point, you have successfully expanded the storage capacity of your managed storage cluster.