Overview

Although namespaces provide the ability to logically partition a cluster, it is not possible to truly enforce partitioning. This means users or resources operating in the same Kubernetes cluster can access any other resource in the cluster regardless of the namespace it is operating in.

Even if compensating controls such as Network Policies are implemented to block/control "namespace-to-namespace" communication, there is still the "noisy neighbor" problem to consider.

For scenarios where this can be problematic, the only practical solution is to use "dedicated" Kubernetes clusters to guarantee true separation across operational boundaries.

A commonly used term for this single tenant model is Cluster as a Service.

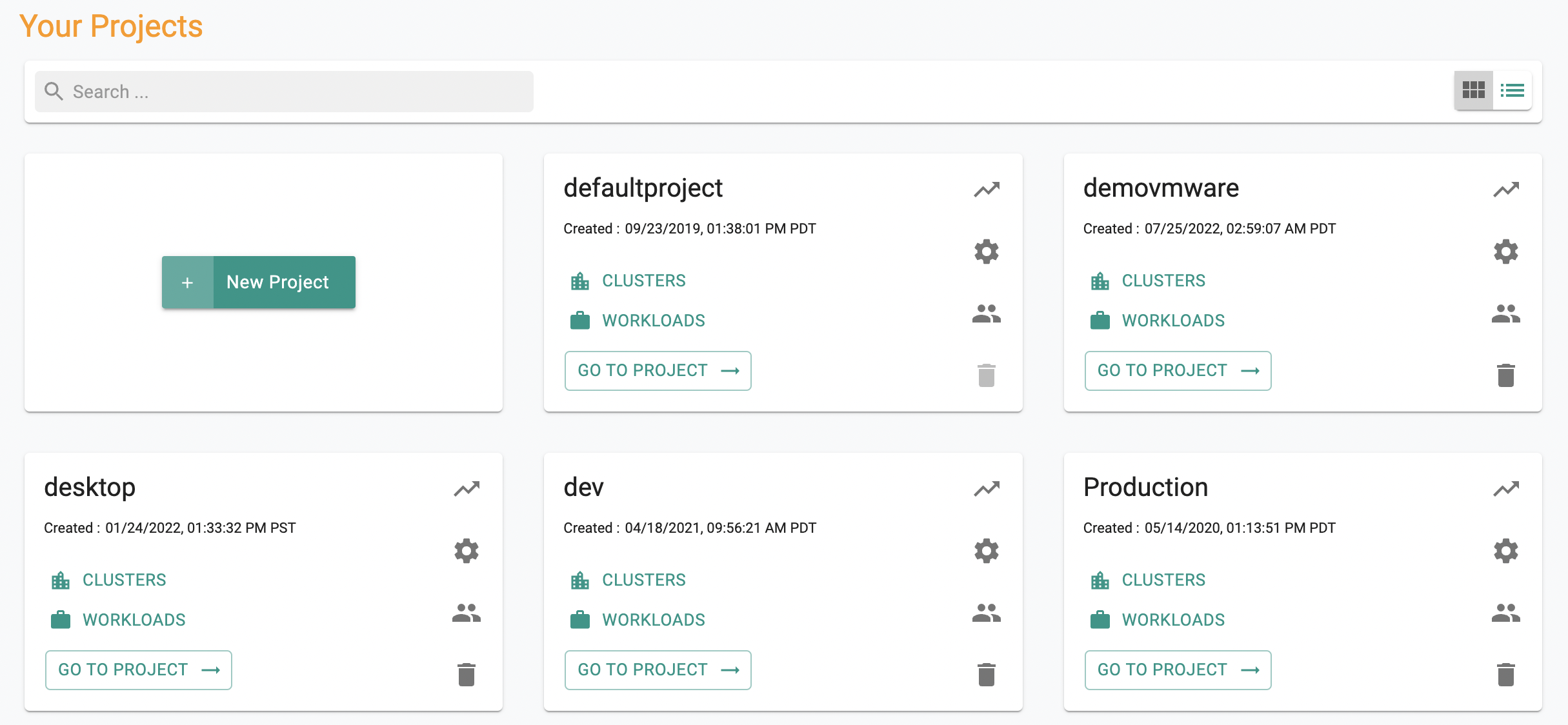

One common example for this is the use of a dedicated cluster for "Production" and "Staging". Users can use "Projects" to achieve this isolation. An illustrative example is shown below.

| Environment | Project |

|---|---|

| Production | "Production" Project with a dedicated Kubernetes cluster. Project configured with least privilege RBAC so that only required users can access the project |

| Staging | "Staging" Project with a dedicated Kubernetes cluster. Project configured with RBAC so that only required users can access the project |

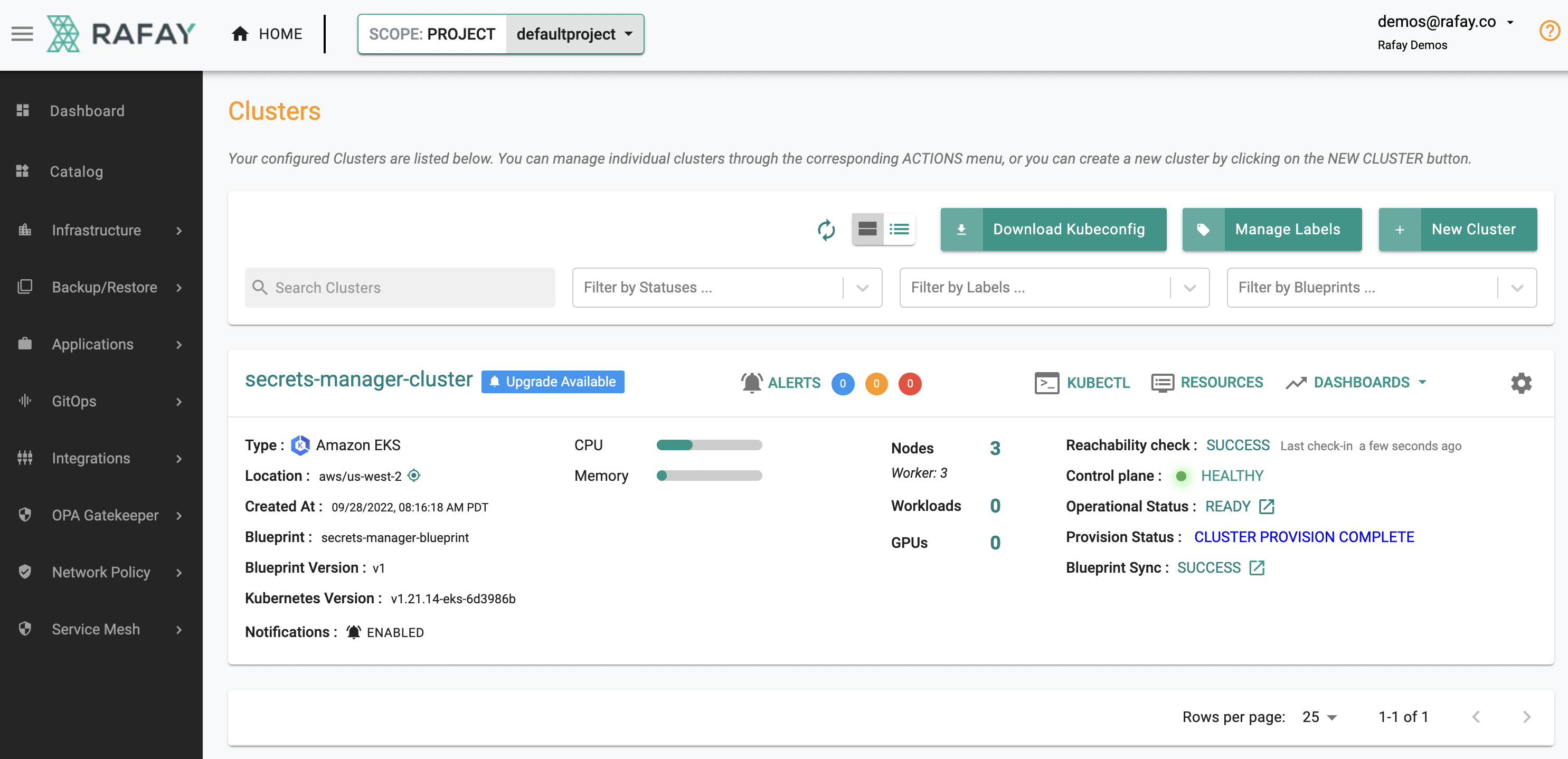

One or multiple Kubernetes clusters can exist in a Project. In the example below, an Amazon EKS cluster has been provisioned in the Project.

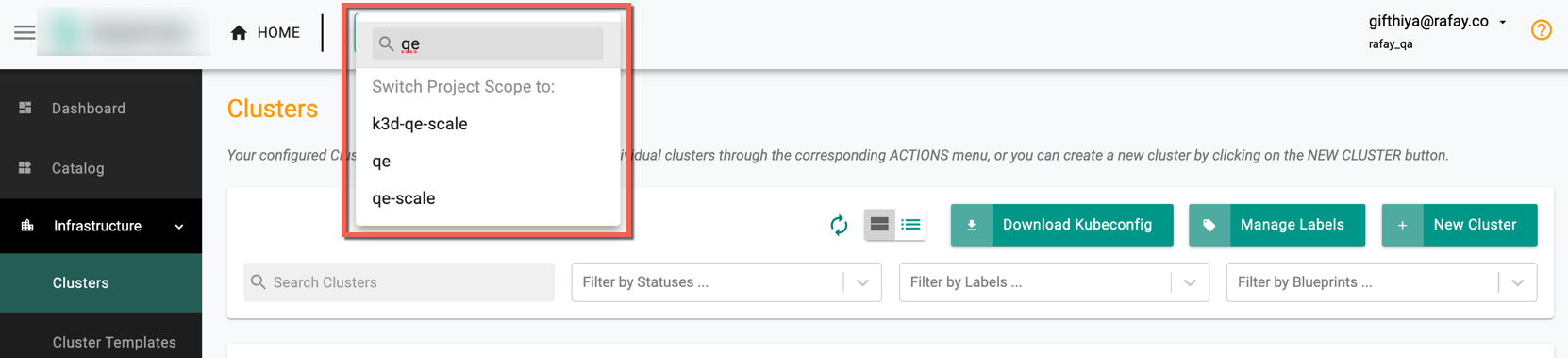

Users within a project can switch to another project using the search bar. When a user initiates a search with a specific letter(s) (for example, qe), the system auto complete the search and list out all the project names corresponding to the entered text (qe) as shown in the below example

Deployment Options¶

The below matrix presents a breakdown of actions like creation, updating, and deletion of projects across multiple deployment methods: Interactive UI, Declarative RCTL commands, API-driven automation, and Terraform.

| Action | UI | CLI | API | Terraform |

|---|---|---|---|---|

| Create | Yes | Yes | Yes | Yes |

| Update | Yes | Yes | Yes | Yes |

| Delete | Yes | Yes | Yes | Yes |

Access Control¶

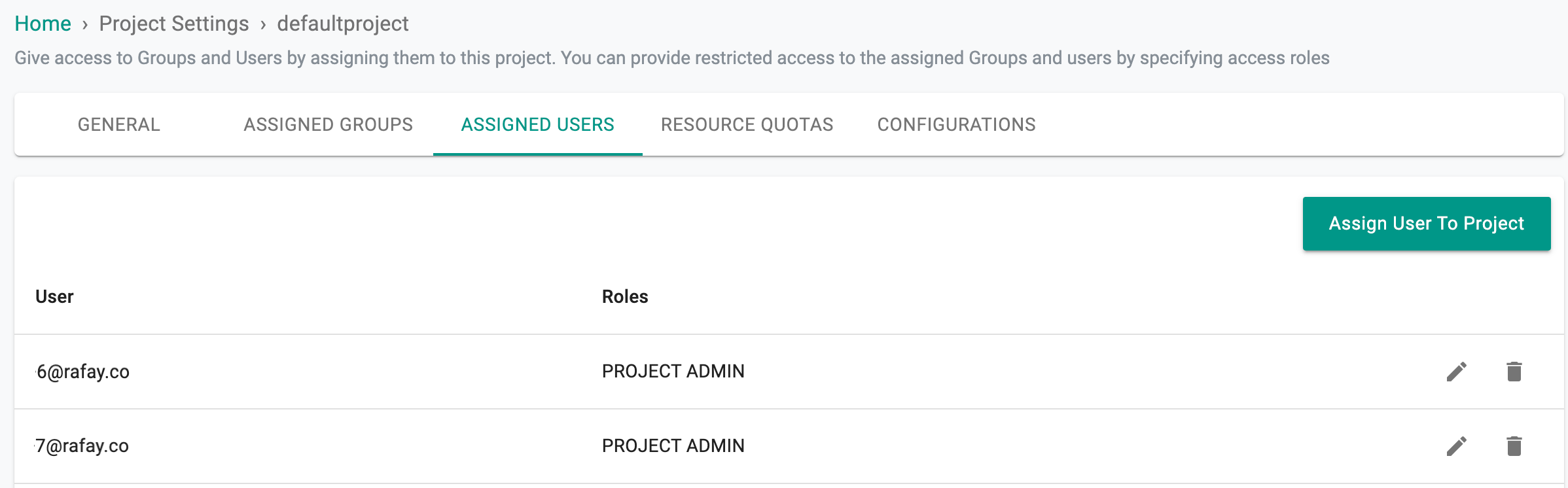

Organizations can restrict which users can access a particular project and with what privileges (i.e. read, read/write etc.) using role based access control

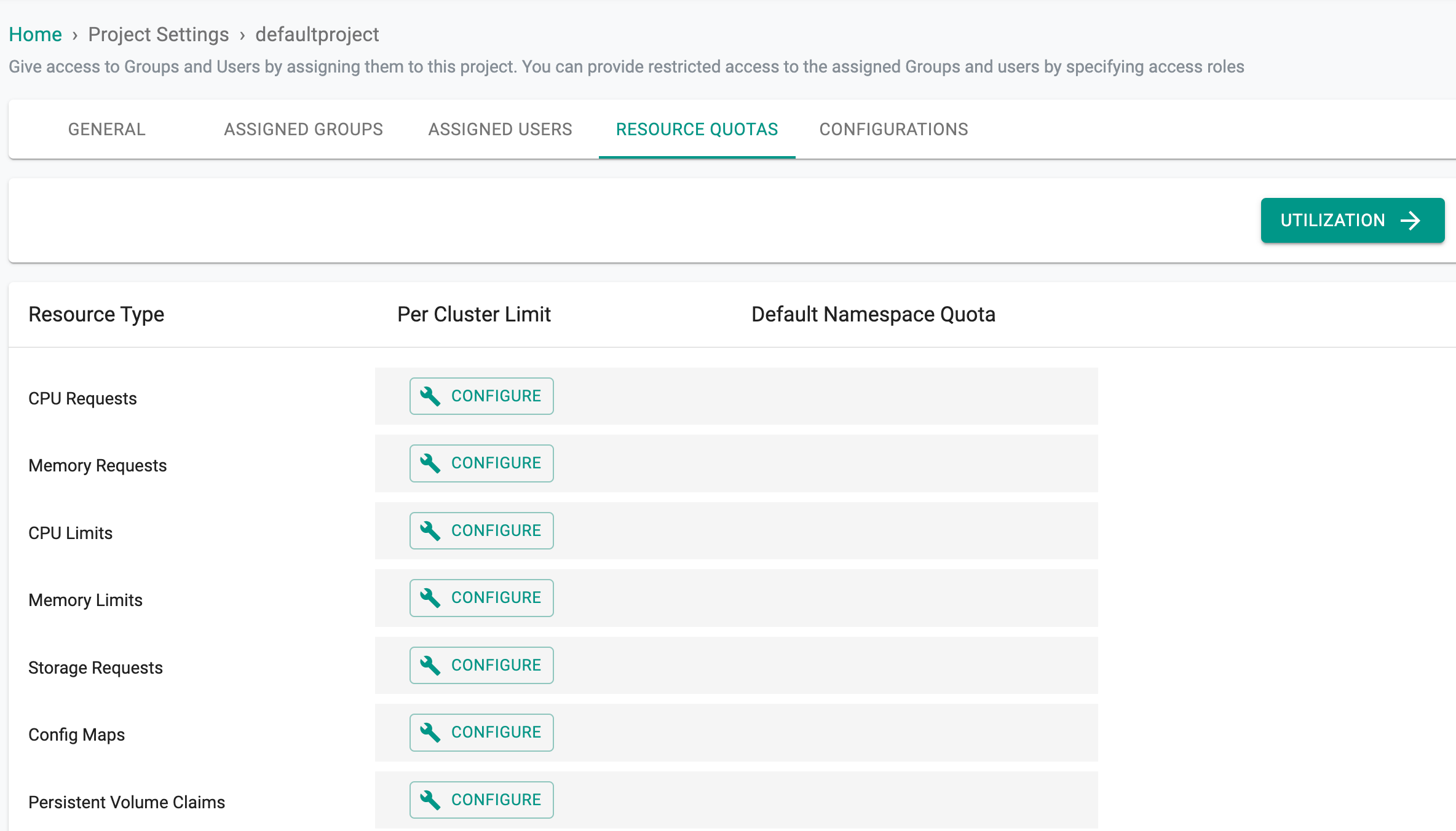

Resource Quotas¶

Organizations can leverage "Resource Quotas" to restrict how much of the cluster resources a namespace can use.

Configurations¶

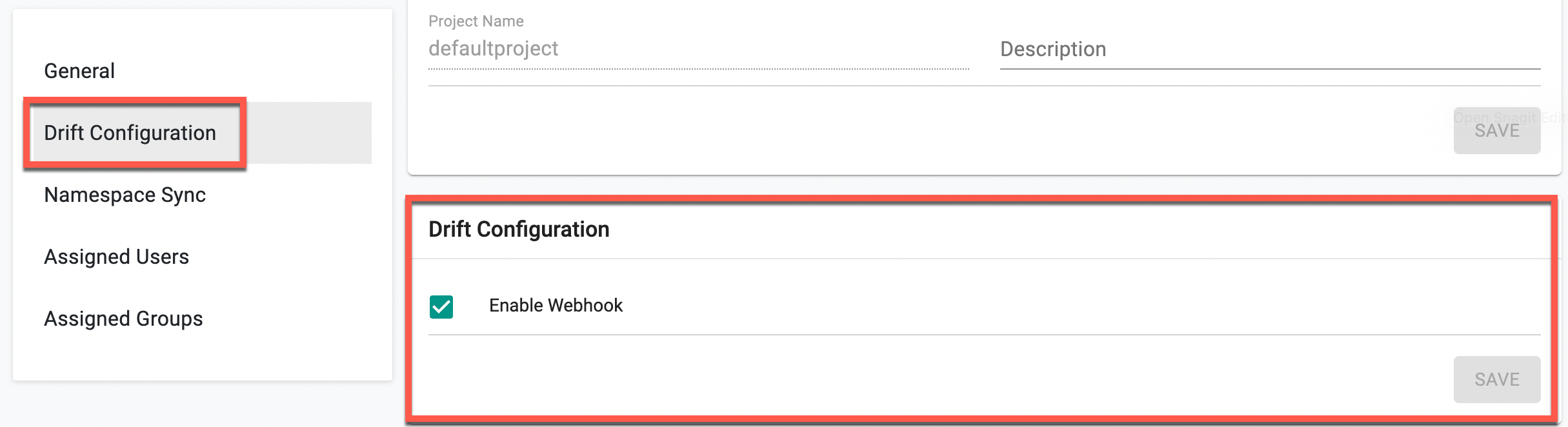

On this configuration page, users possess the ability to enable the webhook and Namespace Sync at the project level.

Enable Webhook¶

Enabling the drift configuration within a project empowers users to deploy the webhook across all clusters under that project using blueprints, contingent upon its activation at the organization level. Should the webhook be deactivated at the project level, users are not allowed to deploy the drift webhook on any cluster under the same project, even if the organization-level webhook remains enabled. By default, this option is enabled.

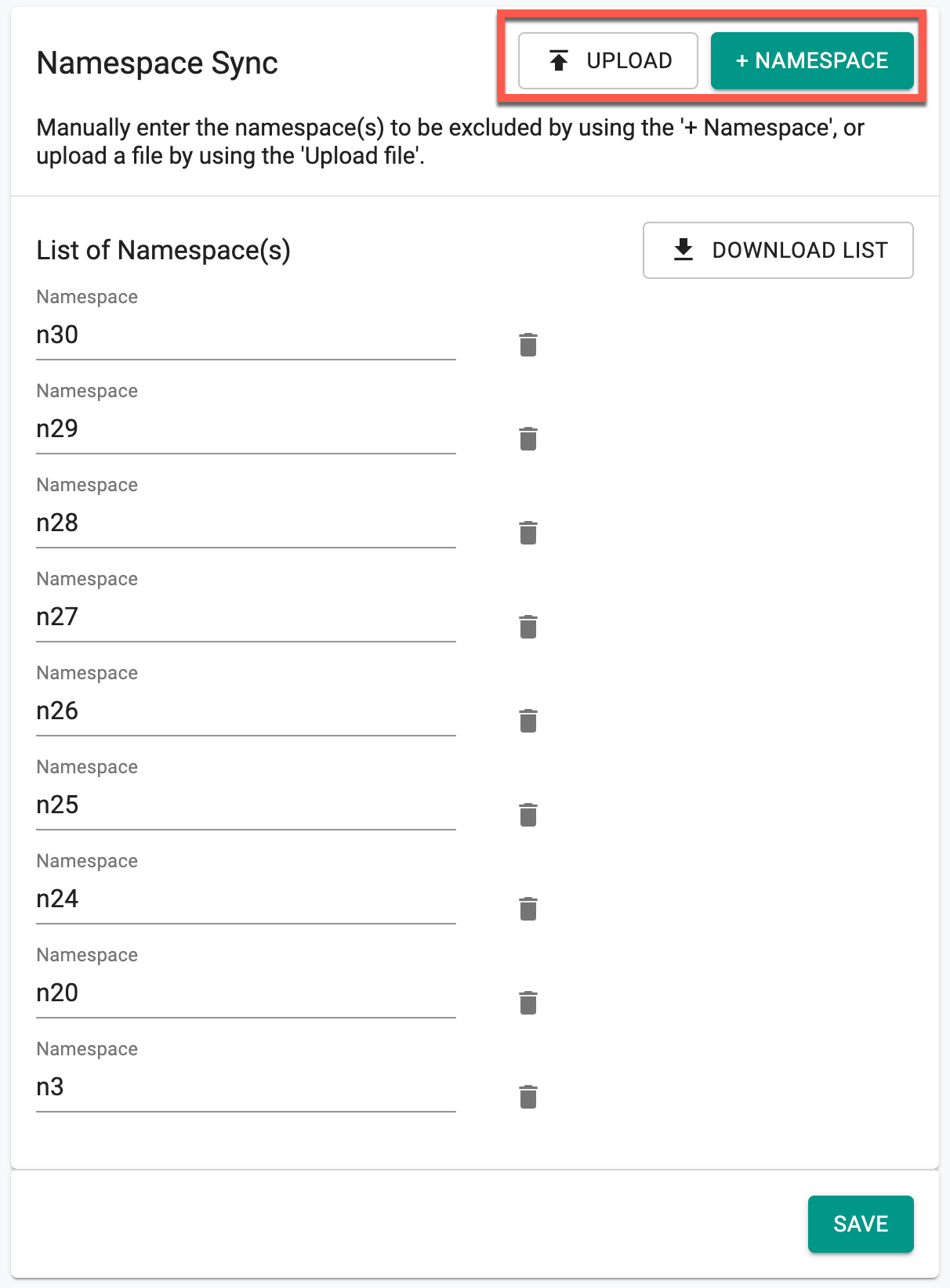

Namespace Exclusion¶

At the project level, users are allowed to define a list of namespaces exempt from synchronization processes. This customization helps prevent unintended changes while still allowing flexibility in managing namespaces. Only the Org Admin has the privilege to add or remove namespaces from the exclusion list.

-

Click +Namespace to manually add namespaces for exclusion from synchronization, or choose Upload to upload a file containing a list of namespaces. Only .txt format files are supported for upload. Additionally, multiple file uploads are also supported

-

Click Download List to retrieve the excluded namespace list configured here

- Click Save once the required namespaces are added for exclusion

Important

- File formats other than .txt will be dismissed

- In a project, if two namespaces with the same name are found in the exclusion list, only one will be considered, and the duplicate will be ignored

- If a namespace is already synchronized from the cluster and was not previously added to the exclusion list, this namespace will have no effect. However, if the namespace is recreated, it will be exempted from synchronization

Any changes made to the namespace exclusion list, including additions, removals, or modifications, are tracked in the Audit Log, documenting details such as the time of the change, user responsible for the action, etc.