Provision

Cloud Credentials¶

The controller needs to be configured with credentials in order to programmatically create and configure required AKS infrastructure on Azure in your account. These credentials securely managed as part of a cloud credential in the Controller.

The creation of a cloud credential is a "One Time" task. It can then be used to create clusters in the future when required. Please review Microsoft AKS Credentials for additional instructions on how to configure this.

Important

To guarantee complete isolation across Projects (e.g. BUs, teams, environments etc.,), cloud credentials are associated with a specific project. These can be shared with other projects if necessary.

Self Service Wizard¶

This approach is ideal for users that need to quickly provision and manage AKS clusters without having to become experts in Microsoft AKS tooling, best practices and writing bespoke Infrastructure as Code (IaC).

The wizard prompts the user to provide critical cluster configuration details organized into logical sections:

- General (mandatory)

- Cluster Settings

- Node Pool Settings

- Advanced

Only the General section is mandatory. Out of box details are provided for the remaining sections.

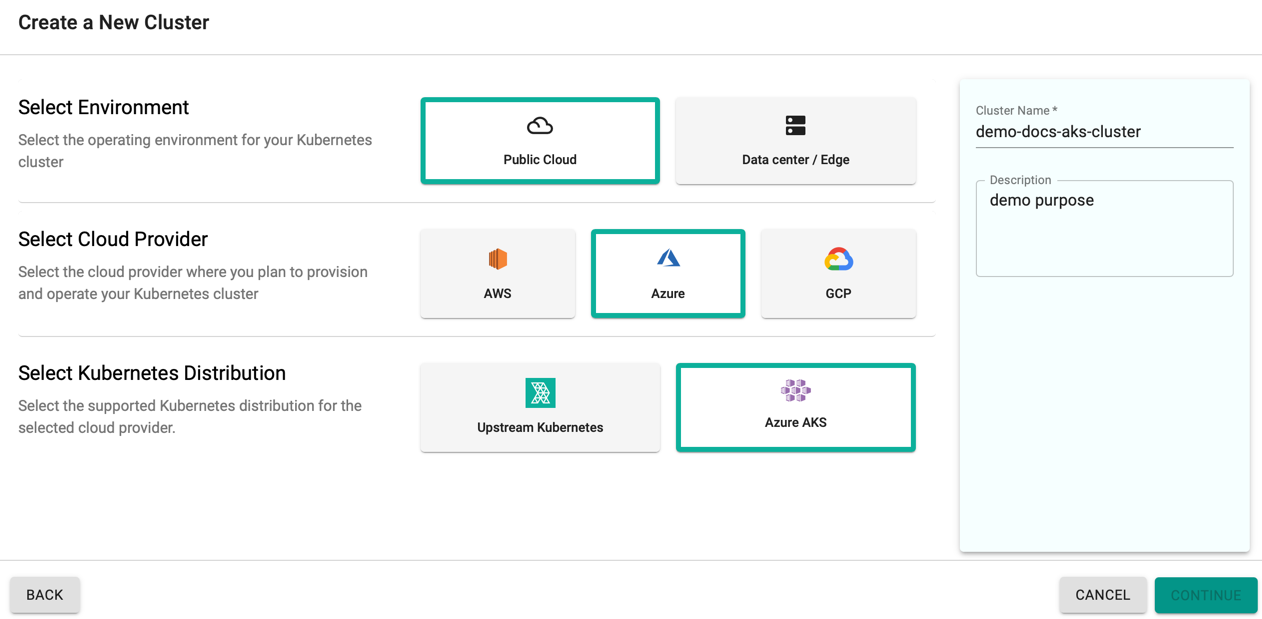

Create Cluster¶

- Click Clusters on the left panel and the Clusters page appears

- Click New Cluster

- Select Create a New Cluster and click Continue

- Select the Environment Public Cloud

- Select the Cloud Provider Azure

- Select the Kubernetes Distribution Azure AKS

- Provide a cluster name and click Continue

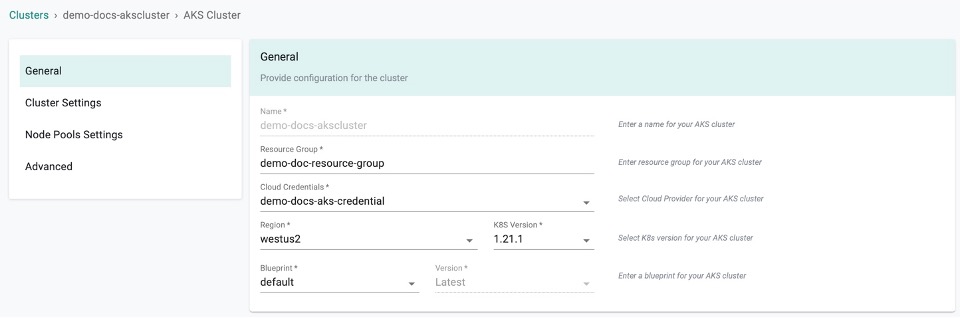

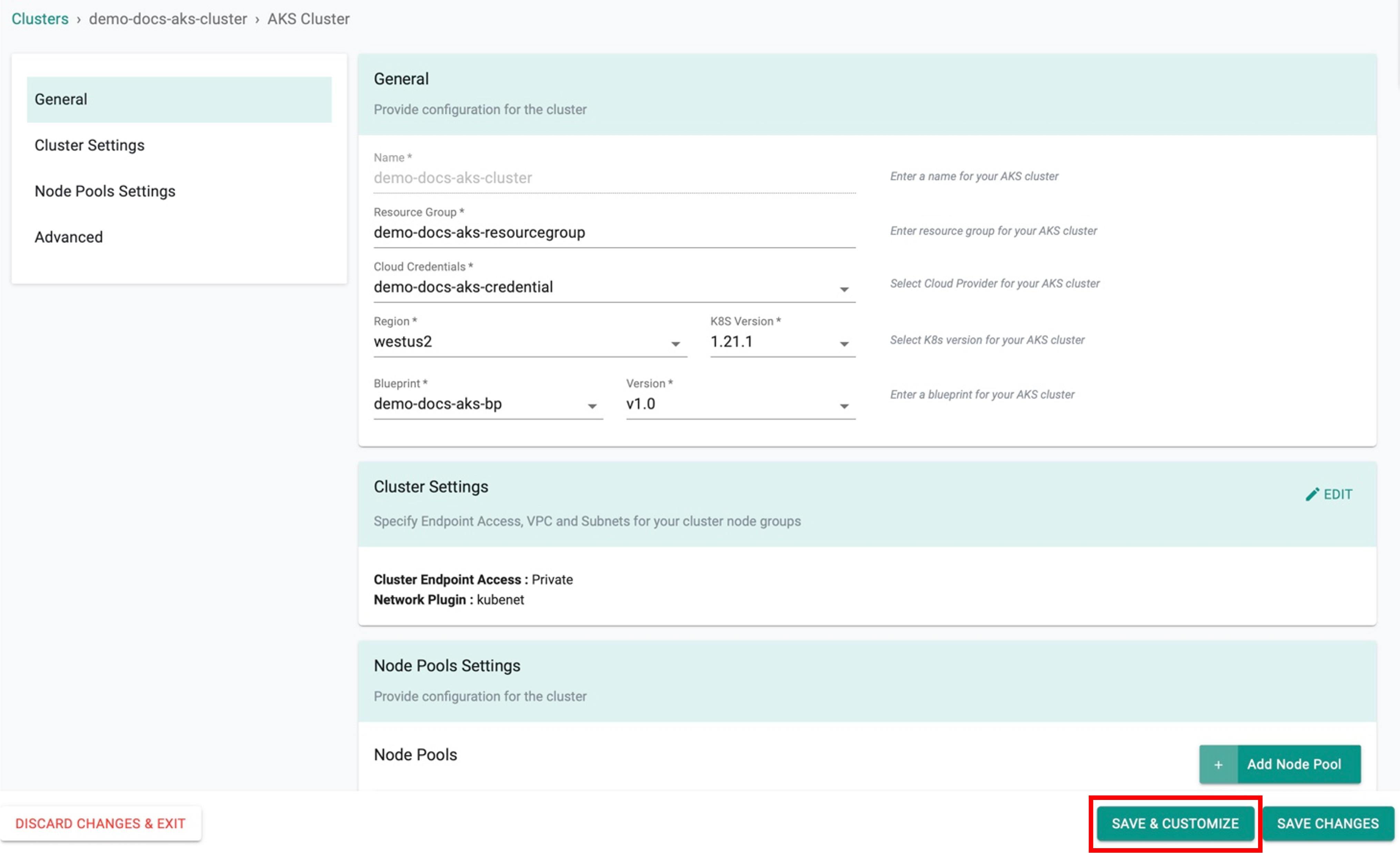

General (Mandatory)¶

General section is mandatory to create a cluster

- Enter the Resource Group for the AKS cluster

- Select the Cloud Credential from the drop-down created with Azure credentials

- Select a region and version

- Select a Blueprint and version. The default-aks is the system default blueprint available for all the AKS clusters to use by the roles Org Admin, Infra Admin and Cluster Admin. Customized blueprint can also be selected from the drop-down if required

- Click SAVE & CUSTOMIZE to customize the cluster configuration or SAVE CHANGES to proceed with the cluster provisioning

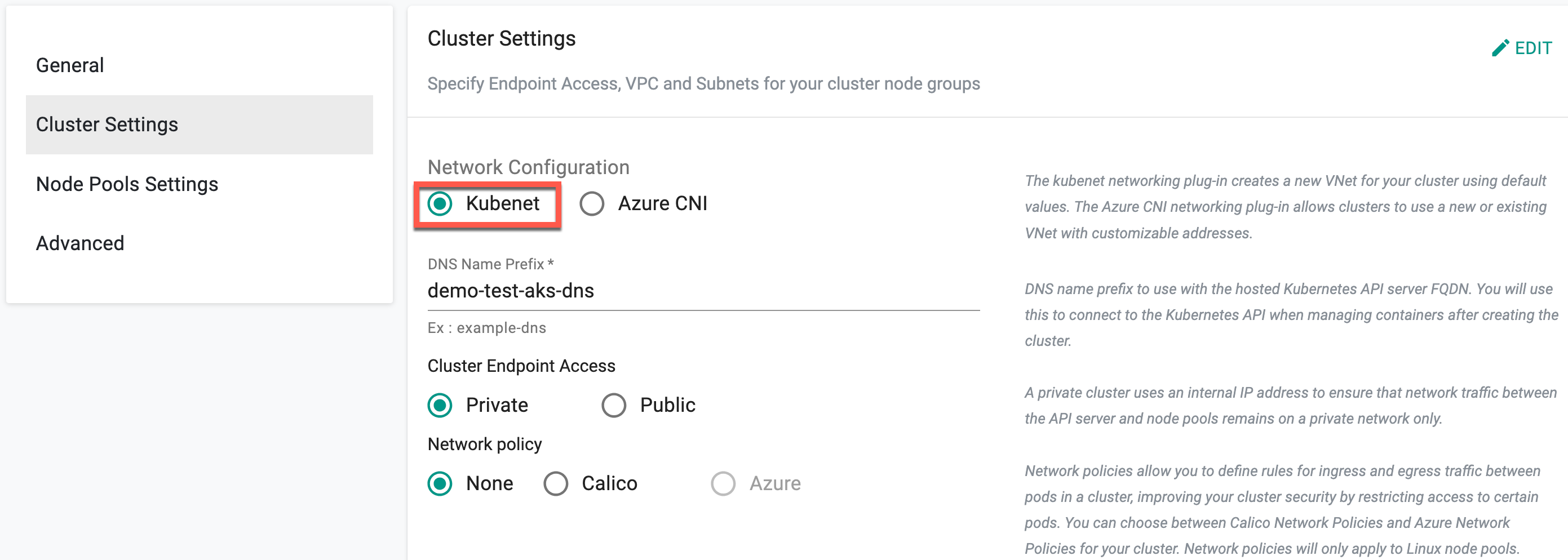

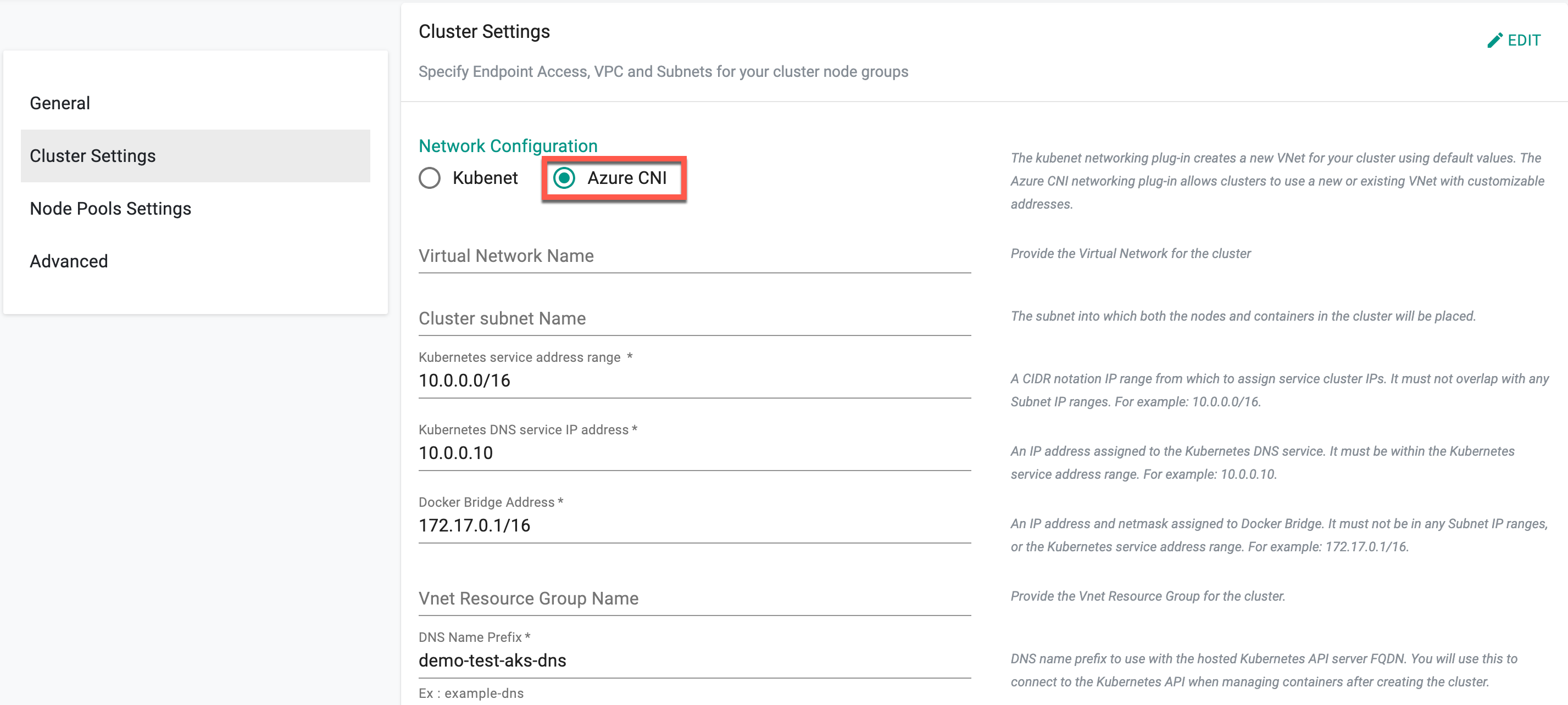

Cluster Settings (Optional)¶

Cluster Settings allows to customize the network settings. Click Edit next to Cluster Settings section

Network Configuration

By default, Network Configuration is set to Kubenet. Selecting Kubenet network automatically creates Virtual Network (VNet) for the clusters with default values.

- Users who do not require a new network and make use of the existing network can select Azure CNI. Selecting Azure CNI allows to enter the existing Virtual Network Name, Cluster subnet Name, IP address range, Vnet Resource Group Name, etc.

Users can leverage Azure CNI Overlay as an alternative networking solution, addressing challenges inherent in traditional Azure CNI. With Azure CNI Overlay, IP addresses are assigned to pods from a separate CIDR range, enhancing scalability and optimizing address utilization. This enables efficient IP address management and scalability to meet the demands of applications. Refer Azure CNI Overlay for more information

- Select SKU Tier Paid to enable a financially backed, higher SLA for the AKS clusters or Free, if not required

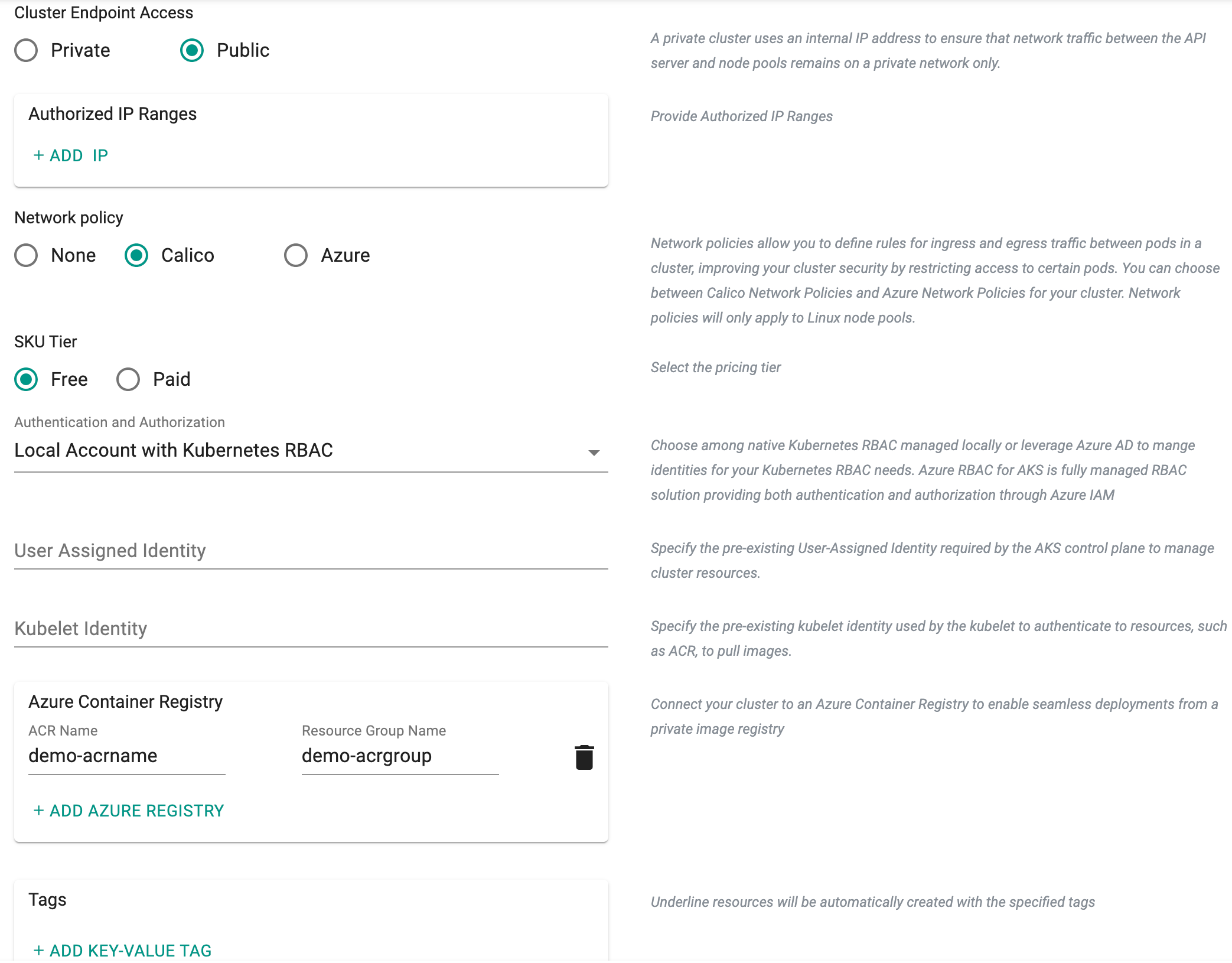

Cluster Endpoint Access

Below are the different cluster endpoints:

Private cluster uses an internal IP address to ensure that network traffic between the API server and node pools remains on a private network only

Public cluster endpoints is accessible from external network (public network). Selecting Public enables the Authorized IP Ranges field providing cluster access to a specific IP address. Multiple IP ranges are allowed

Network Policy

Network Policies allows to define rules for ingress and egress traffic between pods in a cluster, improving you cluster security by restricting access to certain pods. Choose one of the three options, None, Calico and Azure for the cluster. Network policies are applicable only to Linux node pools

Authentication and Authorization

Authentication and Authorization allows the users to select from three (3) options for managing user identities and access permissions within the Kubernetes environment. The choices include using local accounts with native Kubernetes RBAC, integrating Azure Active Directory (AD) for authentication with Kubernetes RBAC, or leveraging Azure AD authentication with Azure RBAC

Users Assigned Identity

Provide the User Assigned Identity required by the AKS control plane to manage cluster resources. This identity serves as an authentication mechanism for the control plane to interact with Azure services

Kubelet Identity

Provide the kubelet identity to authenticate with external resources, such as Azure Container Registry (ACR), enabling the kubelet to securely pull images required for running containers within the Kubernetes cluster. Refer this page for more information on Kubelet Identity

ACR Settings

Click on + Add Azure Registry and enter the ACR Name and Resource Group name. This action enables the controller to interface with the Azure Container Registry, facilitating the retrieval of stored container images from the existing Azure Container Registry Service. Users can add multiple ACRs for a cluster

Important

Effective May 1, 2023, Microsoft has removed all Windows Server 2019 Docker images from the registry. Consequently, the Docker container runtime for Windows node pools has been retired. Although existing deployed nodepool will continue to function, scaling operations on existing windows nodepools are no longer supported. To maintain ongoing support and address this issue, it is recommended to create new node pools based on Windows instead of attempting to scale the existing ones.

For more information, you can refer to the AKS release notes.

Node Pools Settings (Optional)¶

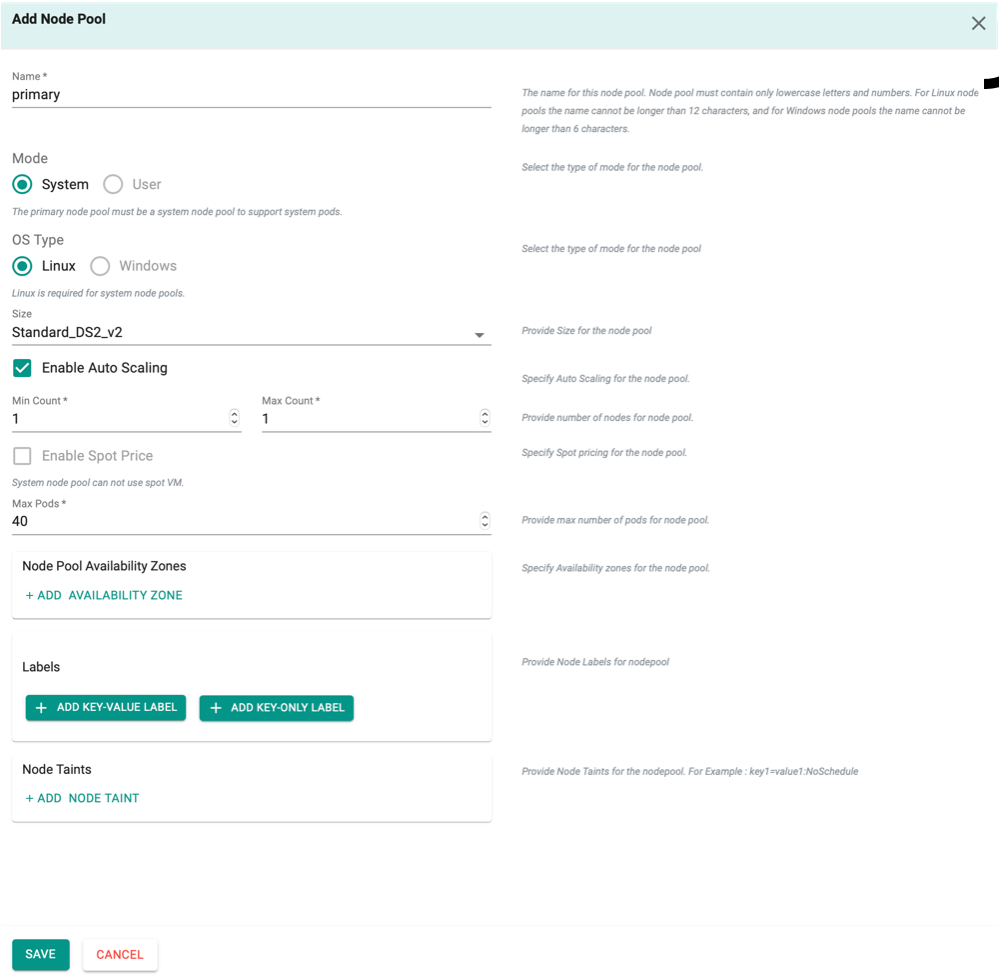

A primary node pool is created by default when creating a cluster. It is mandatory to have one primary node pool for a cluster. To perform any changes to the existing node, click Edit. Primary node should always have the System Mode and Linux OS Type.

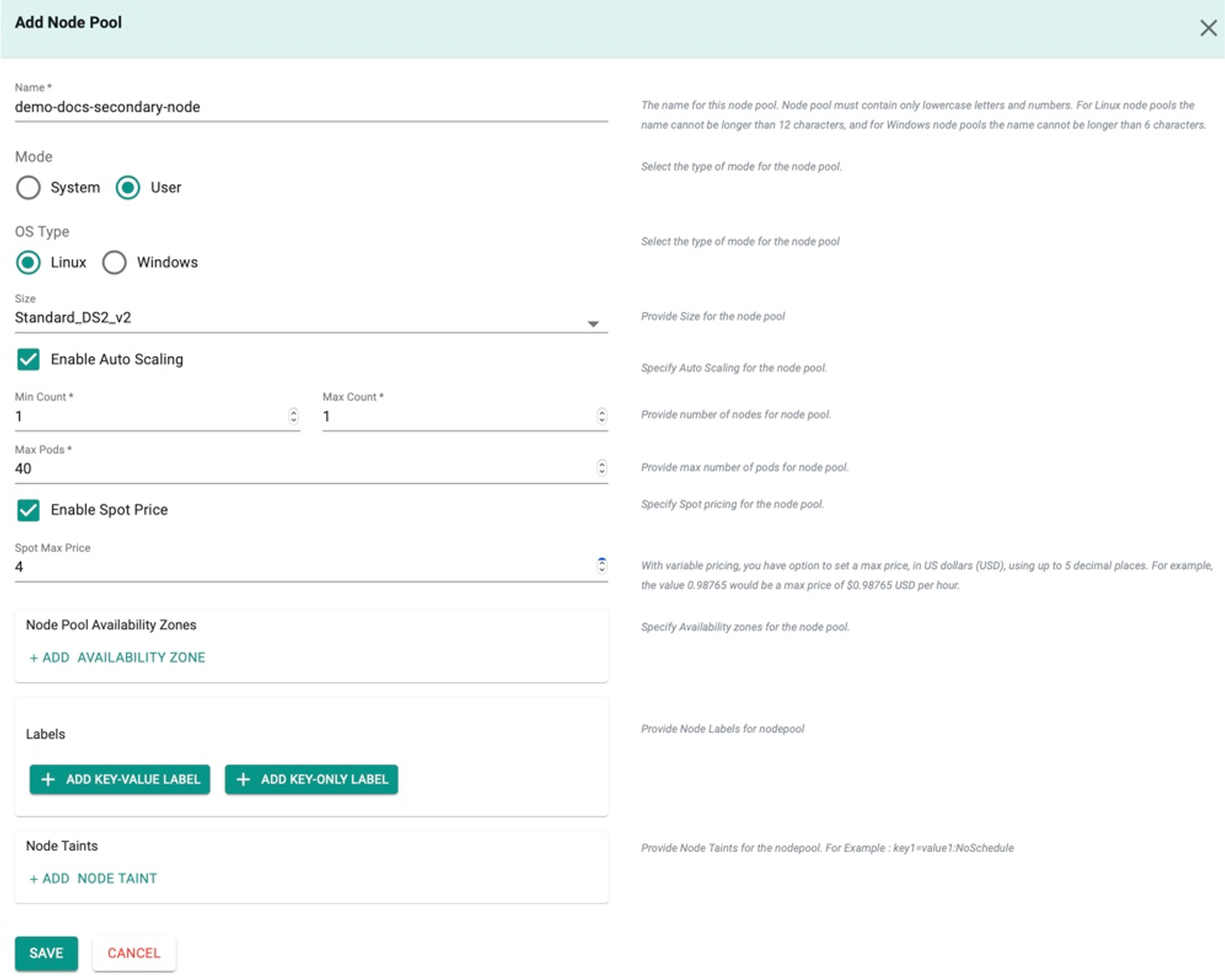

Secondary Node Pool

Click Add Node Pool to add secondary node and provide the required details.

Important

If Kubenet is selected as the Network Configuration under Cluster Settings, Azure do not support Windows OS Type for the secondary node pool. If Azure CNI is selected as the Network Configuration under Cluster Settings, Azure allows both Linux and Windows OS type for the User Mode.

Linux Node Pools¶

- Provide a Node Pool name

- Select Mode User and OS Type Linux

- Provide the required details

Spot Price

Enable Spot Price allows the user to set a price for the require instances. Based on the availability of the instances for the provided bid price, users can make use of the instances for the clusters at a significant cost savings. This check-box is enabled only for the combination of User Mode and Linux OS Type.

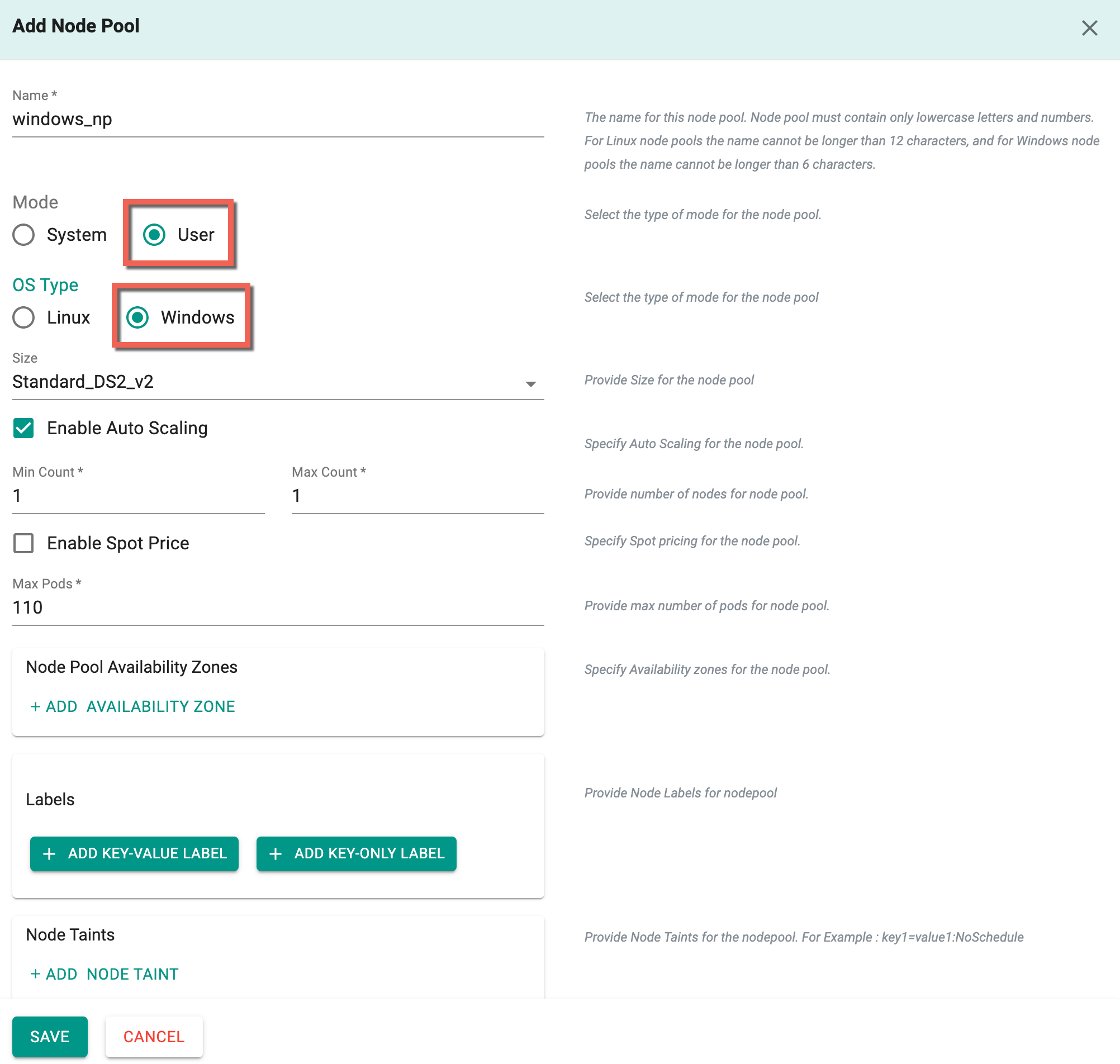

Windows Node Pools¶

Azure AKS supports Windows Nodes that allow running Windows containers.

Pre-Requisites

- Choose Azure CNI as the network configuration in the Cluster Settings section.

- Ensure the existence of the primary system node pool

Add Windows Node Pool

- Provide a Node Pool name

- Select Mode User and OS Type Windows

- Provide the required details

Click Save

Once all the required config details are provided, perform the below steps

- Click Save Changes and proceed to cluster provisioning

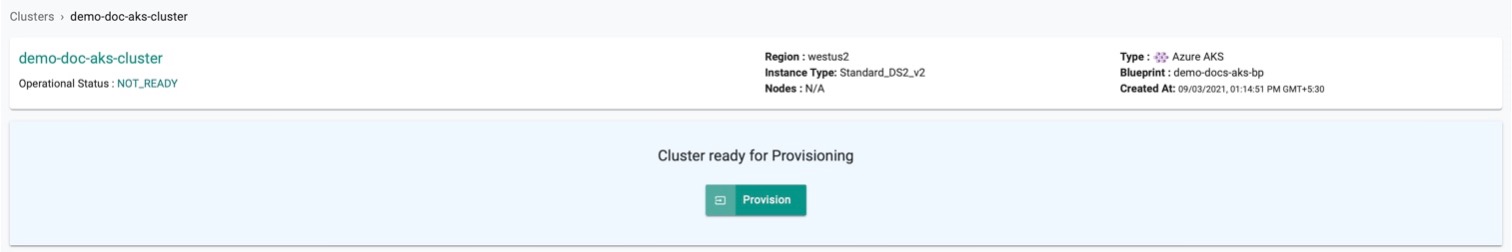

- The cluster is ready for provision. Click Provision

Important

As part of Azure AKS provisioning, Standard_B1ms virtual machine will be spun up and used for bootstrapping system components. Once the bootstrap is completed, this virtual machine will be deleted.

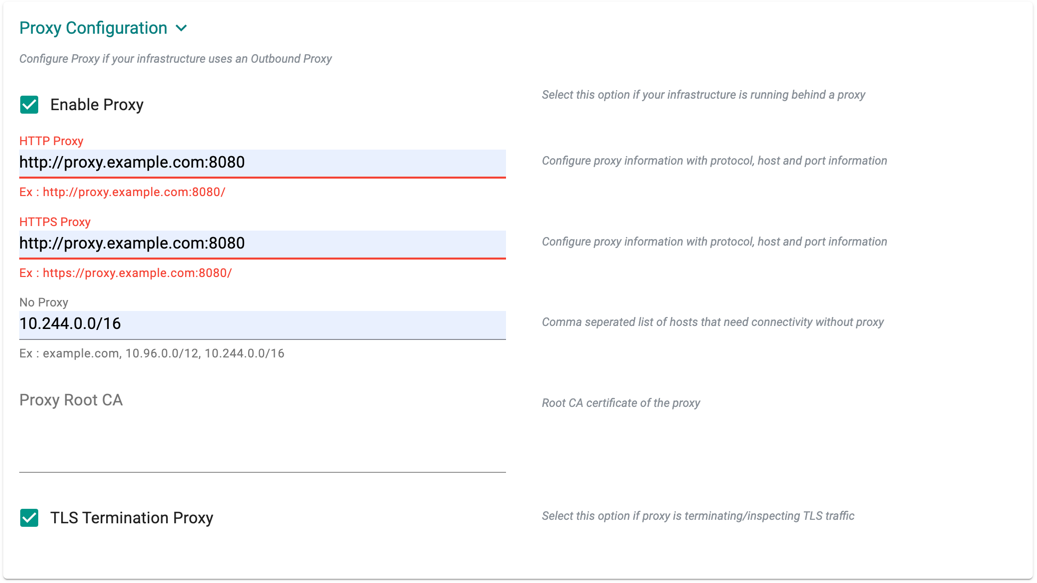

Proxy Configuration

- Select Enable Proxy if the cluster is behind a forward proxy.

- Configure the http proxy with the proxy information (ex: http://proxy.example.com:8080)

- Configure the https proxy with the proxy information (ex: https://proxy.example.com:8080)

- Configure No Proxy with Comma separated list of hosts that need connectivity without proxy. Kubernetes Service IP (from the default namespace) has to be included

- Configure the Root CA certificate of the proxy if proxy is terminating non MTLS traffic

- Enable TLS Termination Proxy if proxy is terminating non MTLS traffic and it is not possible to provide the Root CA certificate of the proxy

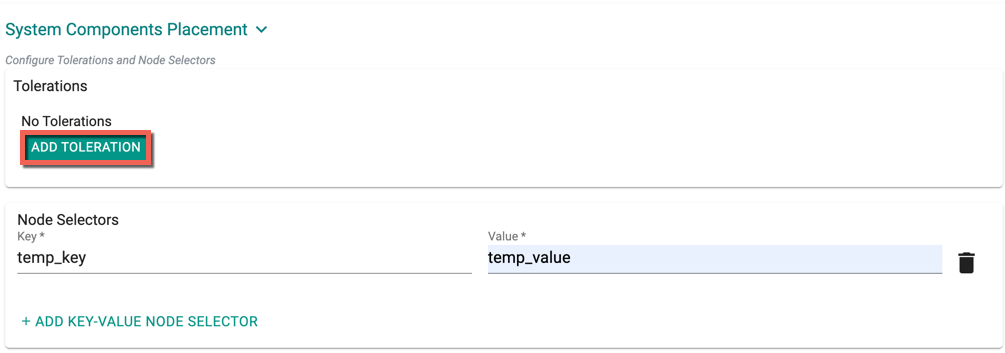

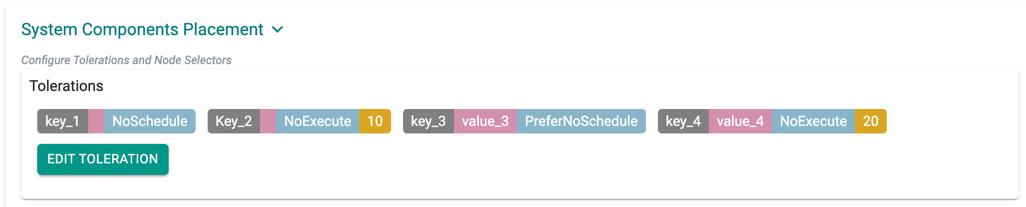

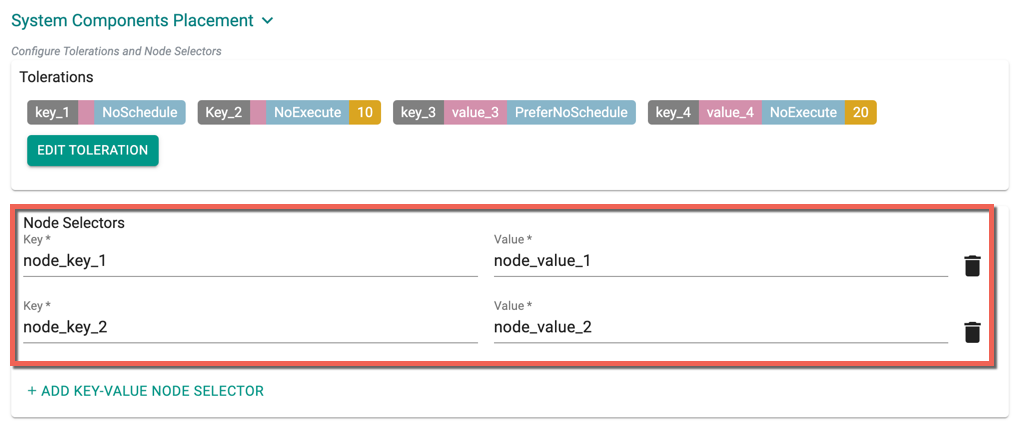

System Components Placement

Users can configure the kubernetes tolerations and nodeSelector in the advanced settings. Tolerations allow the Kubernetes scheduler to schedule pods with matching taints. Users can apply one or more taints to a node and this node will not accept any pods that do not tolerate the taints.

If nodes without taints exists in the cluster, use NodeSelectors to ensure the pods are scheduled to the desired nodes. These tolerations and nodeSelector are configured at the cluster level and are applied to the managed addons and core components. All the pods that are part of managed addons and components contain tolerations and nodeSelector in its YAML spec. Users can use corresponding taints (nodepool taints) and labels (nodepool labels) on the nodepool

System Components Placement can contain a list of tolerations and nodeSelector

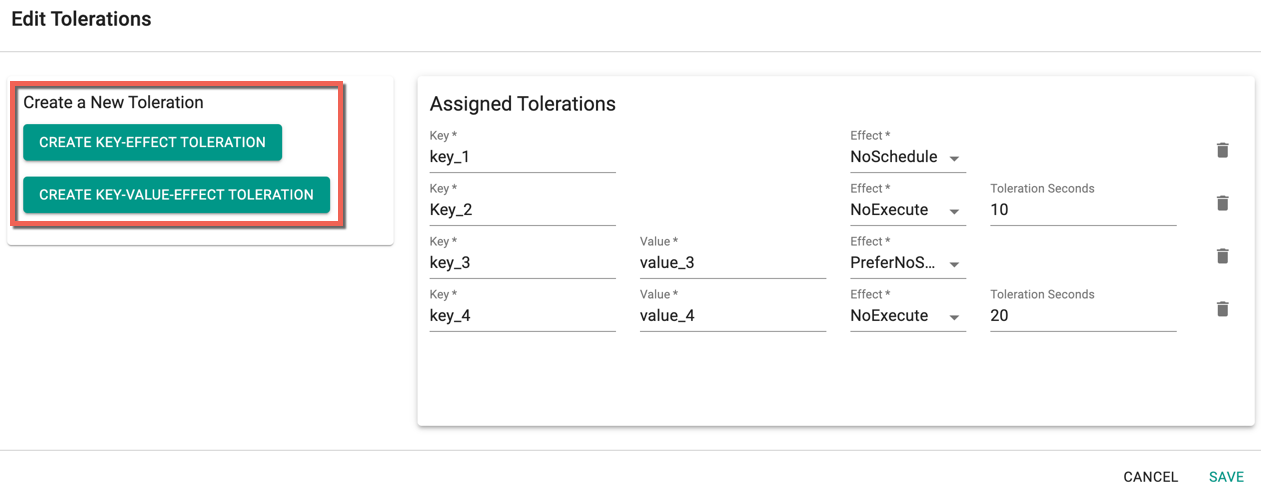

- Click Add Toleration to add one or more tolerations at cluster level

- Create one or more Key-Effect Toleration and Key-Value-Effect-Toleration

- Click Save

You can view the list of tolerations created as shown in the below example

Important

Managed Add-ons and core components will be placed in Nodes with matching taints. If none of the node has taints, all the add-ons and core components will be split to all the available nodes

- Click Add Key-Value Node Selector to add one or more node selectors

Important

The labels that are passed as part of the nodepool level are matched using the Nodepool Key Value pairs

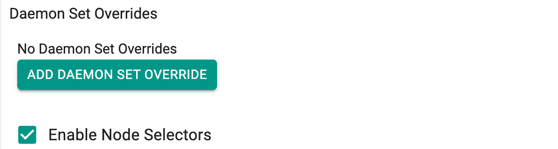

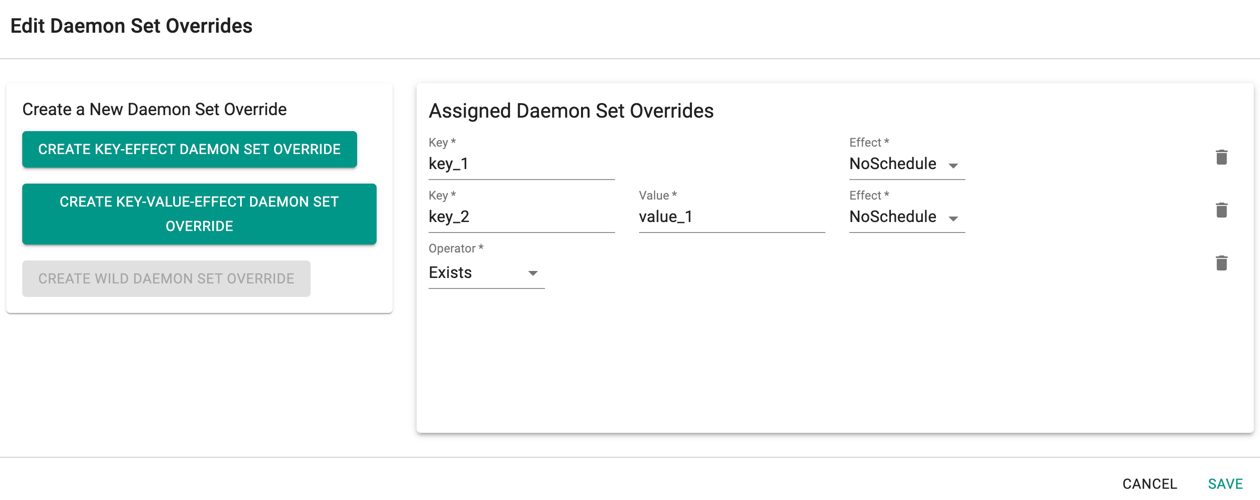

- Enabling the Daemon Set Overrides allows the users to add additional tolerations for the Rafay daemon sets to match the taints available in the nodes.

Recommendation

Use the tolerations in daemon set overrides, ensuring that daemon sets are run on every nodepool

- Click Add Daemon Set Override to create one or more Key-effect(s) and Key-value(s). If the daemon sets are matching the toleration along with the taints already available, all the daemon sets gets deployed on the nodes

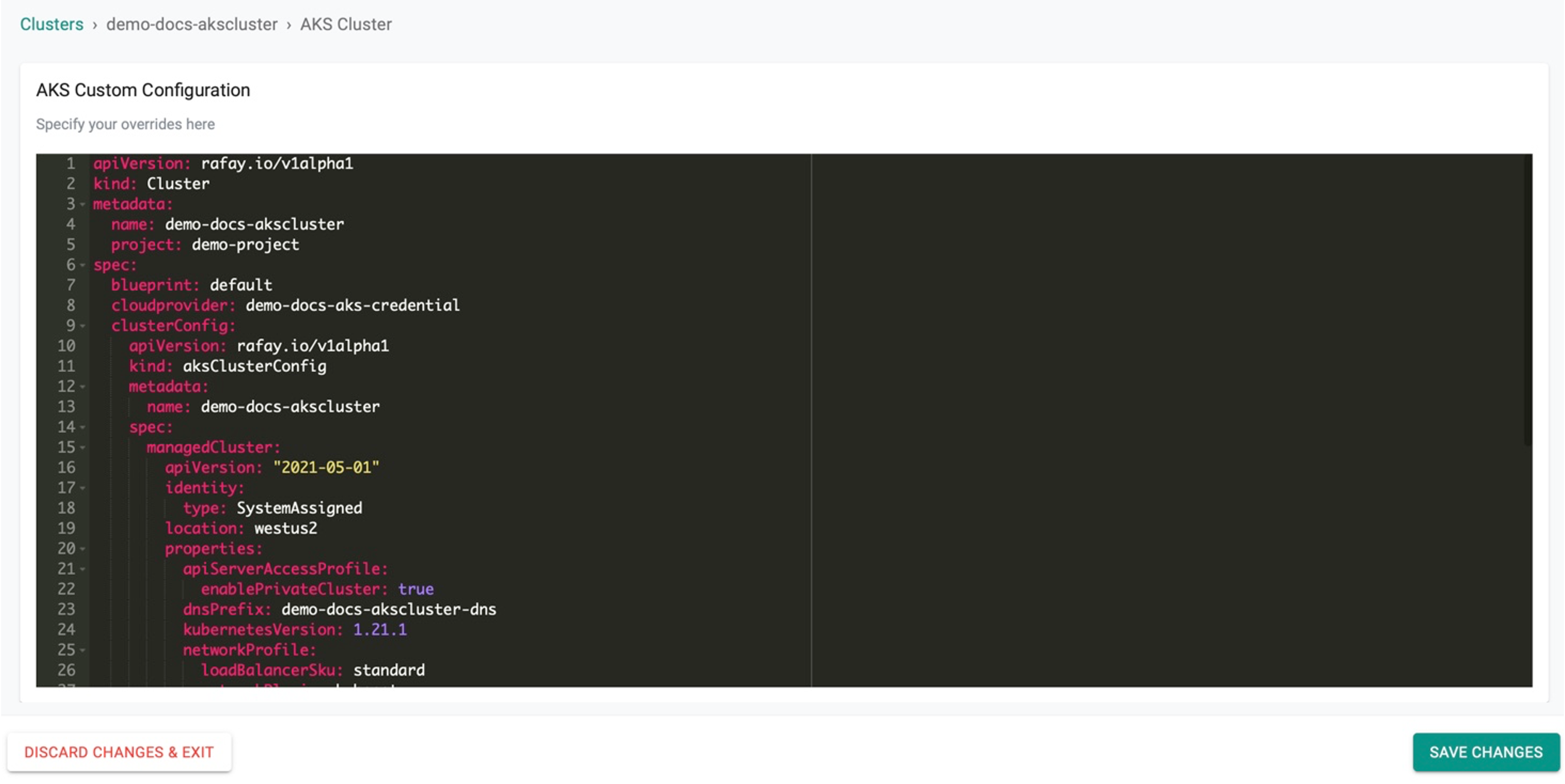

Customize Cluster¶

Click SAVE & CUSTOMIZE to customize the cluster. Users can also use the self service wizard to create a "baseline cluster configuration", view the YAML based specification, update/save, and use the updated configuration to provision an AKS cluster. This can be very useful for advanced cluster customization or for advanced features that are only supported via the "cluster configuration file"

Step 1¶

Click SAVE & CUSTOMIZE

Step 2¶

This will present the user with the baseline cluster configuration in a YAML viewer. The user has two options for customizing the cluster configuration before provisioning using the self service wizard.

(a) Copy the configuration, make changes offline and paste the updated configuration and Save (OR) (b) Make the required changes inline in the YAML viewer and Save

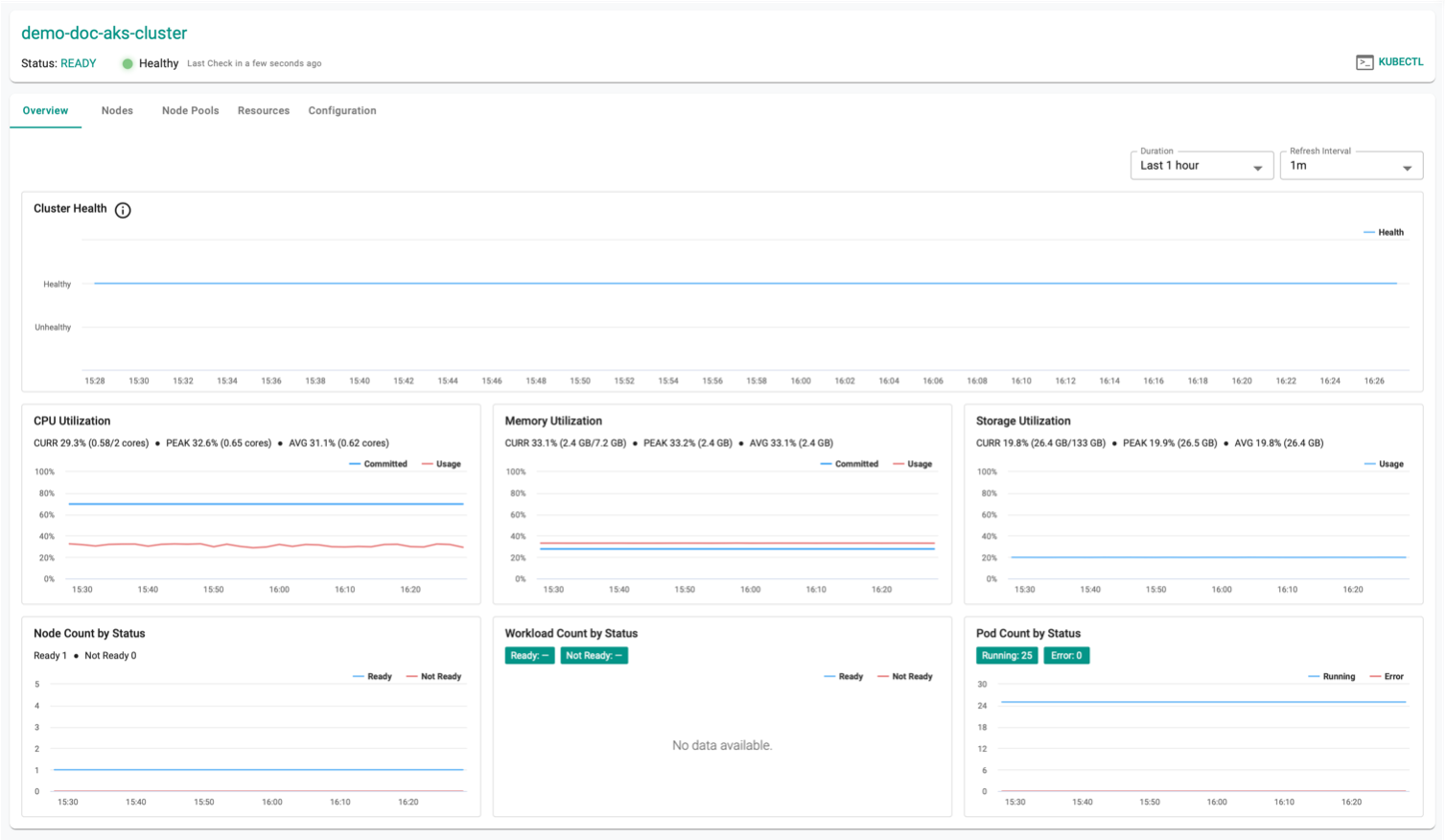

Successful Provisioning¶

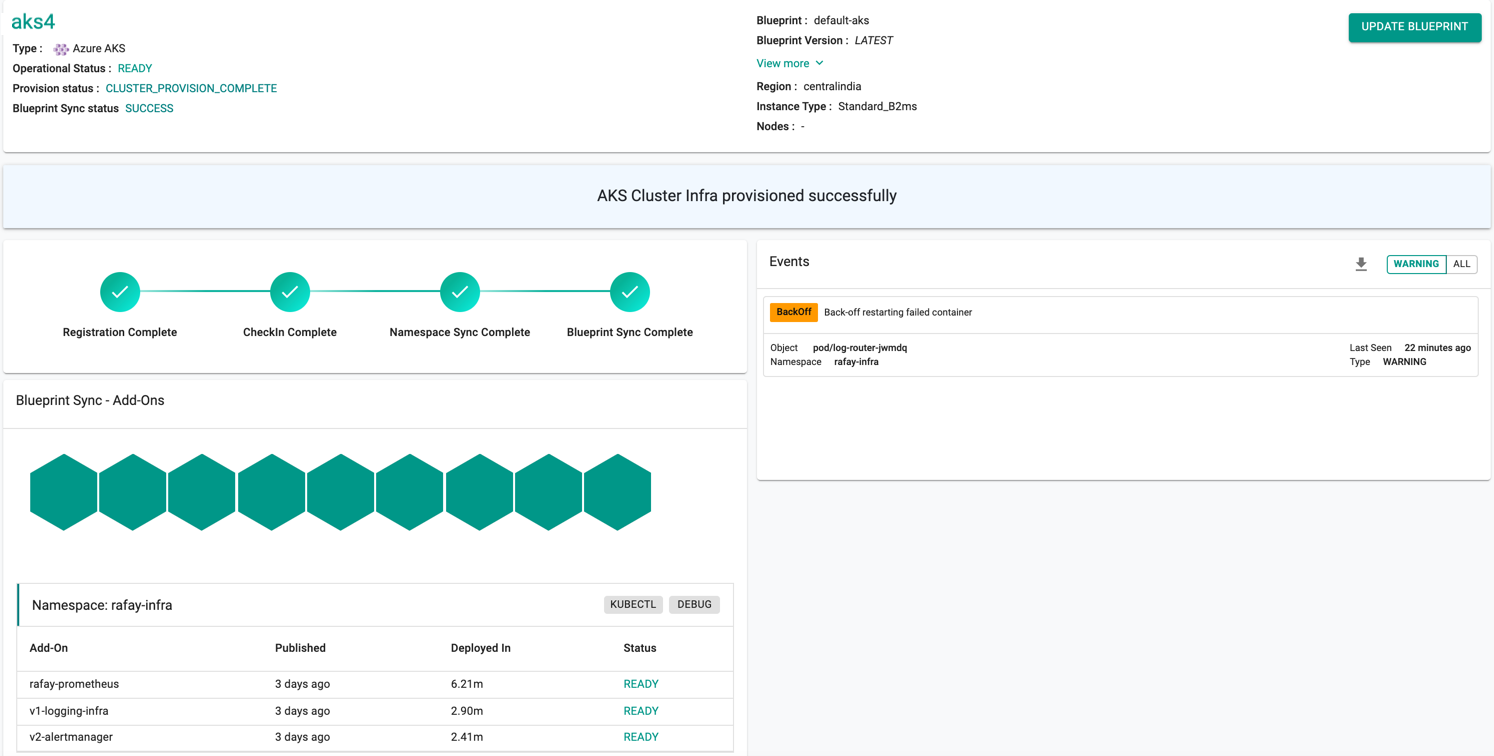

Once all the steps are complete, the cluster is successfully provisioned as per the specified configuration. Users can now view and manage the Azure AKS Cluster in the specified Project in the Controller. On successfully provisioning, the user can view the dashboards

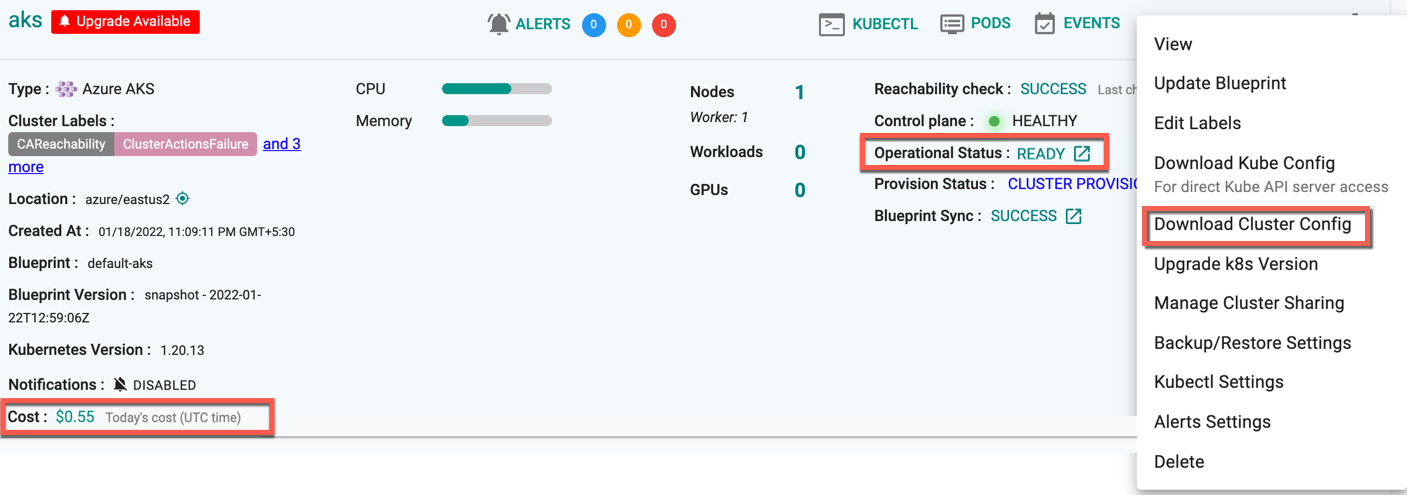

Download Config¶

Administrators can download the AKS Cluster's configuration either from the console or using the RCTL CLI

On successful cluster provisioning, users can view the detailed operations and workflow of the cluster by clicking the Operation Status Ready icon. The screen shows all the stages that occurred during cluster deployment. Click View more to know the Region, Instance Type, and number of Nodes

AAD Cluster Config

When using the Azure-provided Kubeconfig with Azure role-based access control (RBAC) enabled, the user needs to have one of four Azure roles assigned to them, depending on specific requirements.

- Azure Kubernetes Service RBAC Reader

- Azure Kubernetes Service RBAC Writer

- Azure Kubernetes Service RBAC Admin

- Azure Kubernetes Service RBAC Cluster Admin

For more information on each role, refer this page

Failed Provisioning¶

Cluster provisioning can fail if the user had misconfigured the cluster configuration (e.g. wrong cloud credentials) or encountered soft limits in their Azure account for resources. When this occurs, the user is presented with an intuitive error message. Users are allowed to edit the configuration and retry provisioning

Note: The Region field in the General settings is disabled when the user try to modify the failed cluster configuration details

Refer Troubleshooting to explore different scenarios where troubleshooting is needed.

Automated Cluster Creation¶

Users can also automate the cluster creation process without any form of inbound access to their VNet and these can be created via config file.